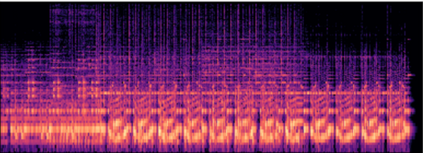

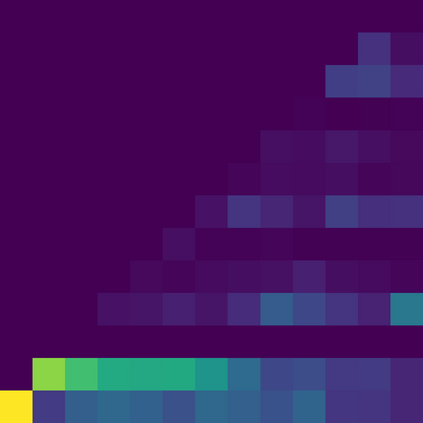

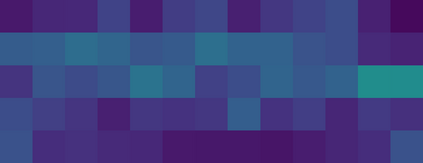

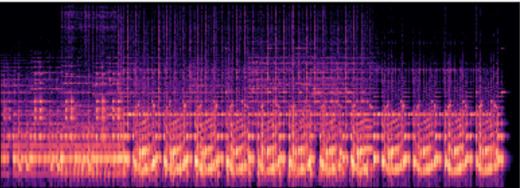

Automated audio captioning (AAC) has developed rapidly in recent years, involving acoustic signal processing and natural language processing to generate human-readable sentences for audio clips. The current models are generally based on the neural encoder-decoder architecture, and their decoder mainly uses acoustic information that is extracted from the CNN-based encoder. However, they have ignored semantic information that could help the AAC model to generate meaningful descriptions. This paper proposes a novel approach for automated audio captioning based on incorporating semantic and acoustic information. Specifically, our audio captioning model consists of two sub-modules. (1) The pre-trained keyword encoder utilizes pre-trained ResNet38 to initialize its parameters, and then it is trained by extracted keywords as labels. (2) The multi-modal attention decoder adopts an LSTM-based decoder that contains semantic and acoustic attention modules. Experiments demonstrate that our proposed model achieves state-of-the-art performance on the Clotho dataset. Our code can be found at https://github.com/WangHelin1997/DCASE2021_Task6_PKU

翻译:近年来,自动听力字幕(AAC)发展迅速,涉及声信号处理和自然语言处理,为音效剪辑生成人类可读的句子,目前的模型一般以神经编码器-代码器结构为基础,其解码器主要使用CNN的编码器提取的声学信息,然而,它们忽视了可以帮助AAC模型生成有意义的描述的语义信息。本文件提议了一种基于纳入语义和声学信息的自动听音字幕新颖方法。具体地说,我们的音频字幕模型由两个子模块组成。 (1)预训练的关键词编码器使用预先训练过的ResNet38来初始化其参数,然后用提取的关键词作为标签进行培训。 (2) 多式注意解码器采用一个基于LSTM的解码器,其中包含了语义和声学关注模块。实验表明,我们提议的模型在Clotho数据集上取得了最新艺术性表现。我们的代码可以在https://gihub.com/WangHelin1997/DCASASASASE20上找到。