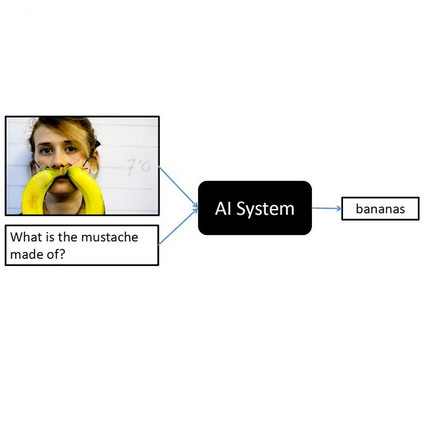

In the domain of Visual Question Answering (VQA), studies have shown improvement in users' mental model of the VQA system when they are exposed to examples of how these systems answer certain Image-Question (IQ) pairs. In this work, we show that showing controlled counterfactual image-question examples are more effective at improving the mental model of users as compared to simply showing random examples. We compare a generative approach and a retrieval-based approach to show counterfactual examples. We use recent advances in generative adversarial networks (GANs) to generate counterfactual images by deleting and inpainting certain regions of interest in the image. We then expose users to changes in the VQA system's answer on those altered images. To select the region of interest for inpainting, we experiment with using both human-annotated attention maps and a fully automatic method that uses the VQA system's attention values. Finally, we test the user's mental model by asking them to predict the model's performance on a test counterfactual image. We note an overall improvement in users' accuracy to predict answer change when shown counterfactual explanations. While realistic retrieved counterfactuals obviously are the most effective at improving the mental model, we show that a generative approach can also be equally effective.

翻译:在视觉问答(VQA)领域,研究表明,当用户在VQA系统的心理模型中发现这些系统如何解答某些图像-问题(IQ)配对的例子时,用户对VQA系统的心理模型有了改进。在这项工作中,我们表明,显示受控反事实图像问题实例对于改进用户的心理模型比仅仅显示随机实例更为有效。我们比较了一种基因化方法和一种基于检索的方法来显示反事实实例。我们利用基因对抗网络(GANs)最近的进展来生成反事实图像,方法是删除和涂抹某些对图像感兴趣的区域。然后我们让用户了解VQA系统对这些被修改图像的答复中的变化。要选择有兴趣的图像区域,我们实验使用人注解的注意地图和一种完全自动的方法来使用VQA系统的注意值。最后,我们测试用户的心理模型,请他们预测模型在测试反事实图像上的性能。我们注意到,用户在预测这些被修改的图像时,其准确性总体改进了VQA的准确性,同时我们同样也发现,在显示反向实际解释时,我们能够正确预测改变的模型。