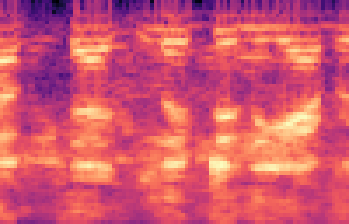

This paper proposes a unified deep speaker embedding framework for modeling speech data with different sampling rates. Considering the narrowband spectrogram as a sub-image of the wideband spectrogram, we tackle the joint modeling problem of the mixed-bandwidth data in an image classification manner. From this perspective, we elaborate several mixed-bandwidth joint training strategies under different training and test data scenarios. The proposed systems are able to flexibly handle the mixed-bandwidth speech data in a single speaker embedding model without any additional downsampling, upsampling, bandwidth extension, or padding operations. We conduct extensive experimental studies on the VoxCeleb1 dataset. Furthermore, the effectiveness of the proposed approach is validated by the SITW and NIST SRE 2016 datasets.

翻译:本文提出一个统一的深层演讲者嵌入框架,用于以不同取样率模拟语音数据。将窄带光谱图作为宽带光谱图的子图像,我们以图像分类方式处理混合带宽数据的联合建模问题。从这个角度出发,我们在不同培训和测试数据假设情景下详细制定若干混合带宽联合培训战略。提议的系统能够灵活处理单个演讲者嵌入模型中的混合带宽语言数据,而无需再做任何下层取样、上层取样、带宽扩展或铺设操作。我们对VoxCeleb1数据集进行了广泛的实验研究。此外,拟议的方法的有效性得到了科学、技术、技术、技术、科学、科学、科学、科学、科学、技术、科学、科学、技术、科学、技术、科学、技术、科学、技术、科学、技术、科学、技术等数据集的验证。