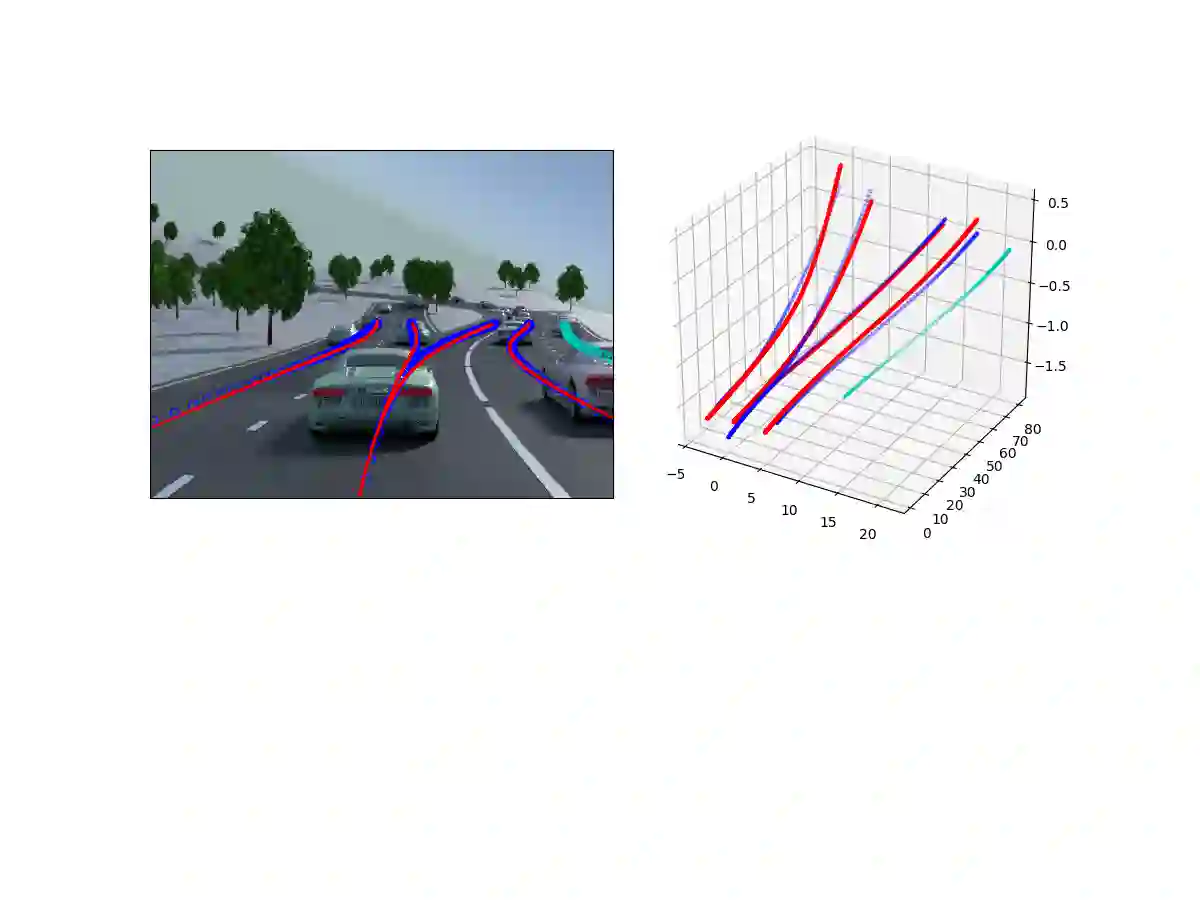

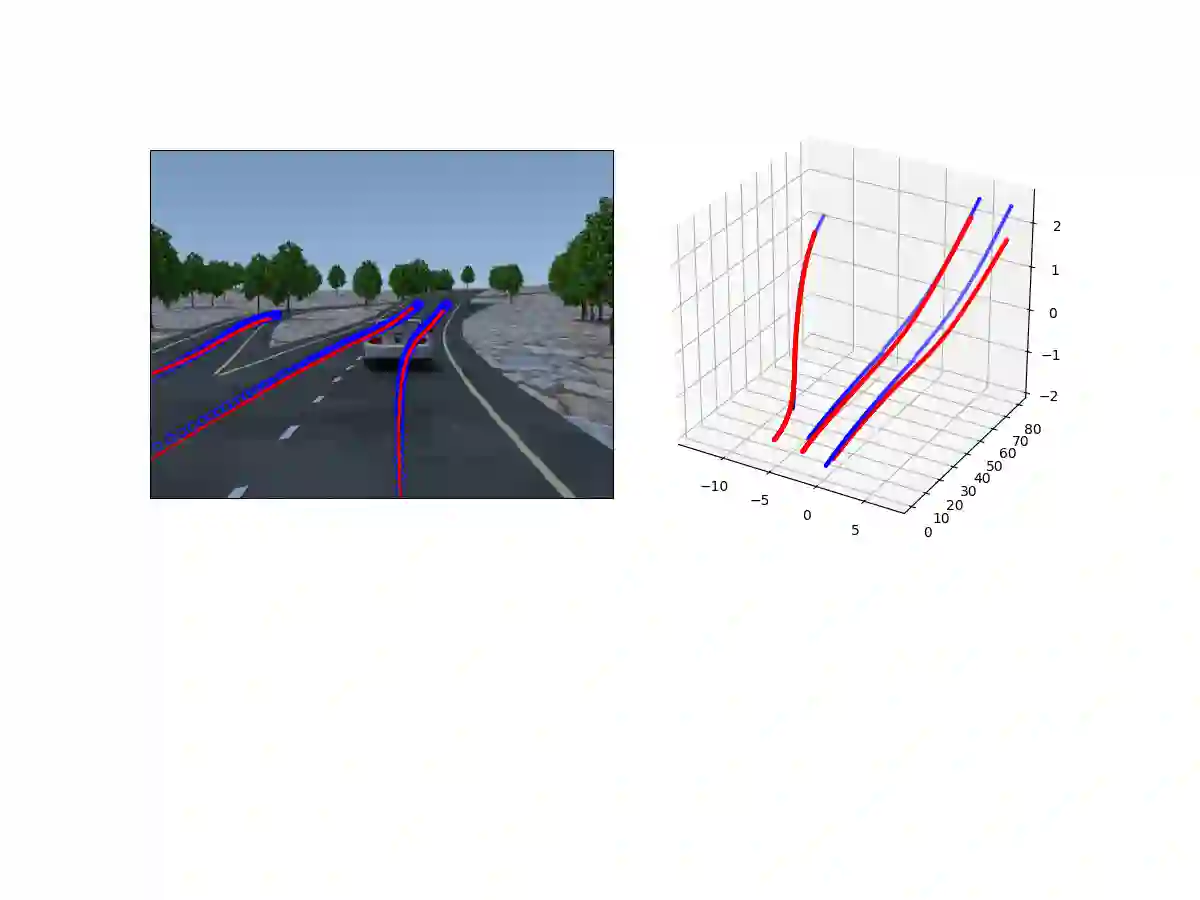

We introduce a network that directly predicts the 3D layout of lanes in a road scene from a single image. This work marks a first attempt to address this task with on-board sensing instead of relying on pre-mapped environments. Our network architecture, 3D-LaneNet, applies two new concepts: intra-network inverse-perspective mapping (IPM) and anchor-based lane representation. The intra-network IPM projection facilitates a dual-representation information flow in both regular image-view and top-view. An anchor-per-column output representation enables our end-to-end approach replacing common heuristics such as clustering and outlier rejection. In addition, our approach explicitly handles complex situations such as lane merges and splits. Promising results are shown on a new 3D lane synthetic dataset. For comparison with existing methods, we verify our approach on the image-only tuSimple lane detection benchmark and reach competitive performance.

翻译:我们引入了一个网络,从单一图像中直接预测道路场景中的三维车道布局。 这项工作标志着首次尝试用机上遥感而不是依赖预先测绘的环境来完成这项任务。 我们的网络架构 3D-LaneNet 应用了两个新概念: 网络内反视角绘图和锚基车道代表。 网络内IPM 投影为常规图像视图和顶部图像视图的双重代表信息流动提供了便利。 锚- 每栏输出代表让我们能够以终端到终端的方法取代共同的超常方法, 如集群和超值拒绝。 此外, 我们的方法明确处理像行列合并和分割这样的复杂情况。 3D行间合成新数据集显示的预期结果。 为了与现有方法进行比较,我们核查了我们关于仅以图像显示的拖网路检测基准和达到竞争性性表现的方法。