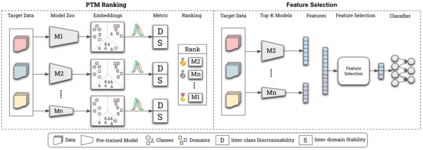

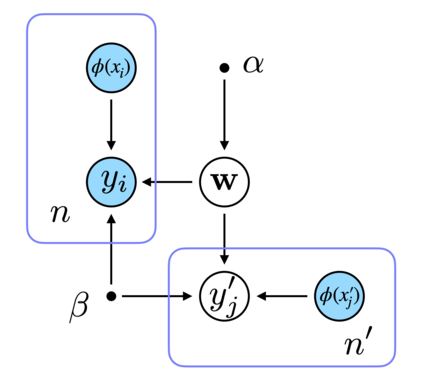

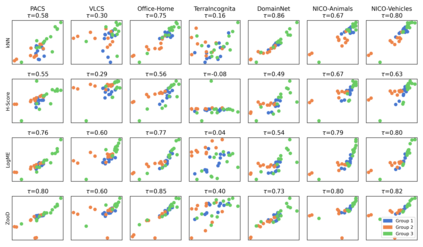

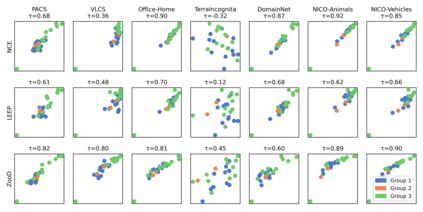

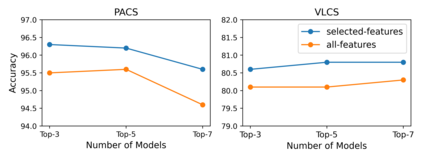

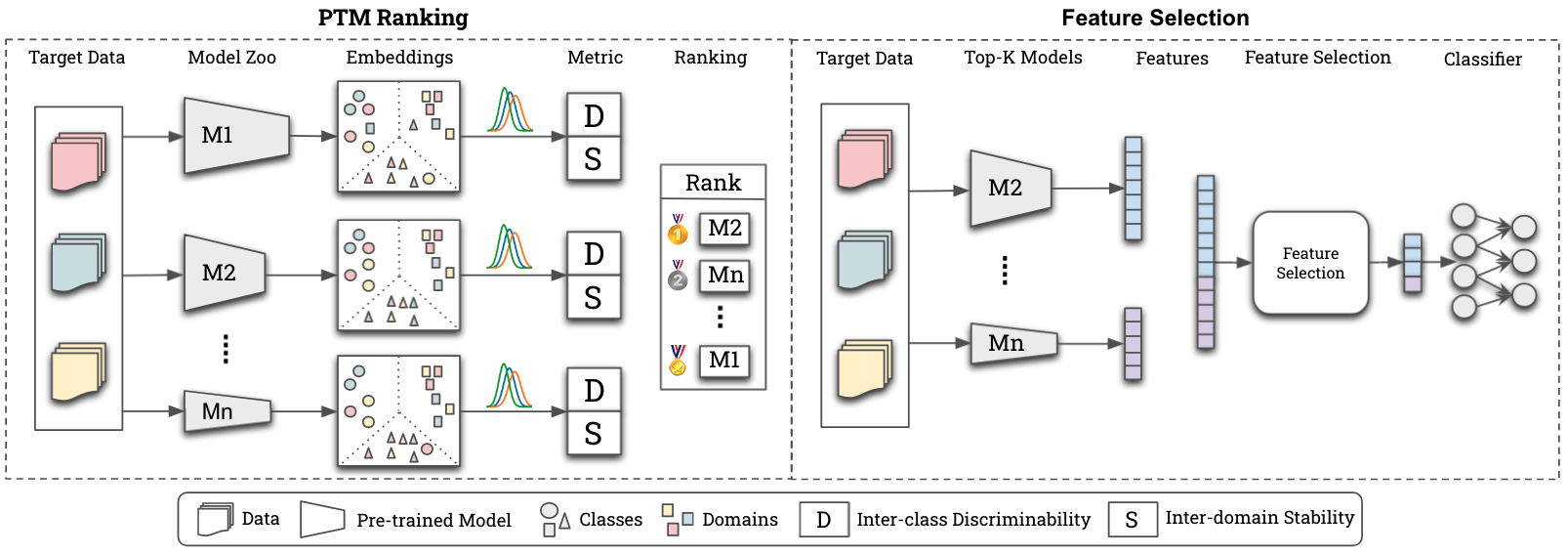

Recent advances on large-scale pre-training have shown great potentials of leveraging a large set of Pre-Trained Models (PTMs) for improving Out-of-Distribution (OoD) generalization, for which the goal is to perform well on possible unseen domains after fine-tuning on multiple training domains. However, maximally exploiting a zoo of PTMs is challenging since fine-tuning all possible combinations of PTMs is computationally prohibitive while accurate selection of PTMs requires tackling the possible data distribution shift for OoD tasks. In this work, we propose ZooD, a paradigm for PTMs ranking and ensemble with feature selection. Our proposed metric ranks PTMs by quantifying inter-class discriminability and inter-domain stability of the features extracted by the PTMs in a leave-one-domain-out cross-validation manner. The top-K ranked models are then aggregated for the target OoD task. To avoid accumulating noise induced by model ensemble, we propose an efficient variational EM algorithm to select informative features. We evaluate our paradigm on a diverse model zoo consisting of 35 models for various OoD tasks and demonstrate: (i) model ranking is better correlated with fine-tuning ranking than previous methods and up to 9859x faster than brute-force fine-tuning; (ii) OoD generalization after model ensemble with feature selection outperforms the state-of-the-art methods and the accuracy on most challenging task DomainNet is improved from 46.5\% to 50.6\%. Furthermore, we provide the fine-tuning results of 35 PTMs on 7 OoD datasets, hoping to help the research of model zoo and OoD generalization. Code will be available at https://gitee.com/mindspore/models/tree/master/research/cv/zood.

翻译:大规模培训前的近期进展显示,利用大批培训前模型(PTMs)来改进传播前模型(OOD)总体化,目标是在对多个培训领域进行微调后在可能的无形领域运行良好。然而,充分利用PTMs的动物园具有挑战性,因为微调所有可能的PTMs组合在计算上令人望而却步,而准确选择PTMs则需要解决OOD任务可能的数据分配转移。在这项工作中,我们建议ZooD,这是PTMs排名和配对功能选择的集合模式。我们提议的MTMs排名PTMs,目的是通过量化类间差异性和在多个培训领域之间的稳定性。 最高K级模型随后被归并用于目标的OOOD任务。为了避免通过模型的改进,我们建议高效的帮助变异式EM算法来选择信息化的功能。我们用最精细的50-OD任务定位模式来评估我们最精细的SODMTMs, 其前级模型比前的更精细的模型要更精确化。