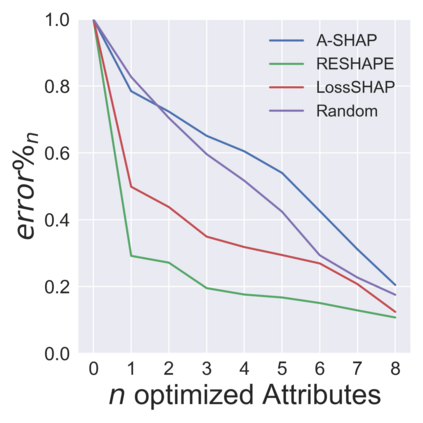

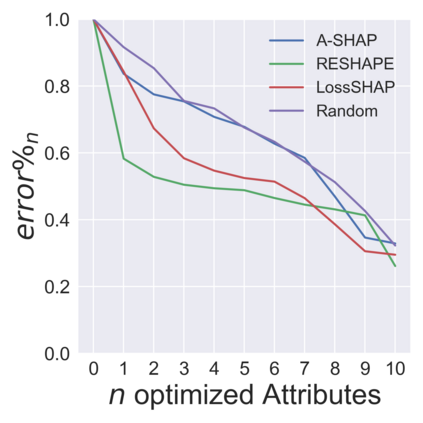

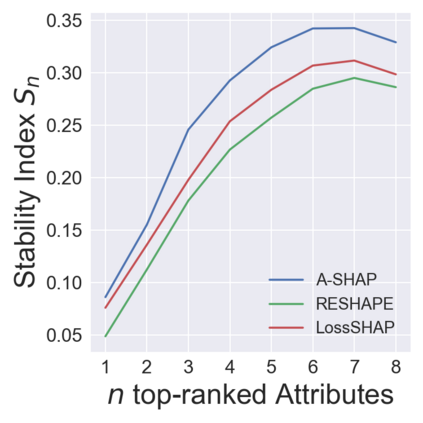

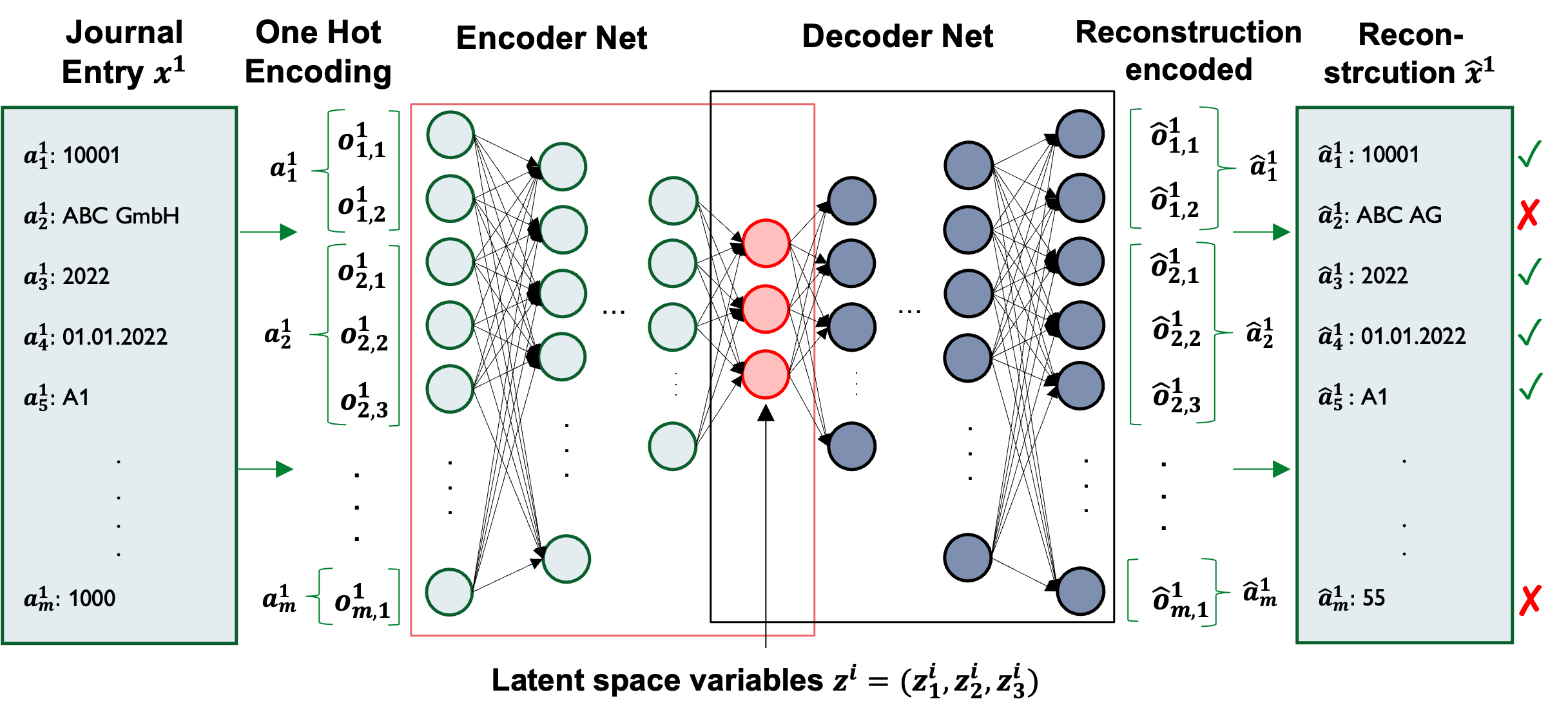

Detecting accounting anomalies is a recurrent challenge in financial statement audits. Recently, novel methods derived from Deep-Learning (DL) have been proposed to audit the large volumes of a statement's underlying accounting records. However, due to their vast number of parameters, such models exhibit the drawback of being inherently opaque. At the same time, the concealing of a model's inner workings often hinders its real-world application. This observation holds particularly true in financial audits since auditors must reasonably explain and justify their audit decisions. Nowadays, various Explainable AI (XAI) techniques have been proposed to address this challenge, e.g., SHapley Additive exPlanations (SHAP). However, in unsupervised DL as often applied in financial audits, these methods explain the model output at the level of encoded variables. As a result, the explanations of Autoencoder Neural Networks (AENNs) are often hard to comprehend by human auditors. To mitigate this drawback, we propose (RESHAPE), which explains the model output on an aggregated attribute-level. In addition, we introduce an evaluation framework to compare the versatility of XAI methods in auditing. Our experimental results show empirical evidence that RESHAPE results in versatile explanations compared to state-of-the-art baselines. We envision such attribute-level explanations as a necessary next step in the adoption of unsupervised DL techniques in financial auditing.

翻译:发现会计异常现象是财务报表审计中反复出现的一项挑战。最近,从深学习公司(DL)得到的新方法建议审计大量报表的基本会计记录。然而,由于这些模型有许多参数,这些模型显示出内在的不透明性有缺陷;同时,隐藏模型的内部运行过程往往妨碍其真实世界的应用。这种观察在财务审计中尤其适用,因为审计员必须合理解释和说明其审计决定的理由。现在,提出了各种可解释的AI(XAI)技术来应对这一挑战,例如,Shanapley Additive Explanation(SHAP) 。然而,在财务审计中经常采用的不受监督的DL(SHAP)系统,这些方法在编码变量一级解释了模型产出的缺陷。因此,自动编码网络的解释往往难以为人类审计员所理解。为了减轻这一缺陷,我们提议(RESHA)采用各种可解释模型产出在综合属性级别上的解释。此外,我们在不监督DIAA(AA)的评级基准解释中采用一种必要的方法,用以比较我们AAA(AA)的弹性分析结果的实验性基准解释。