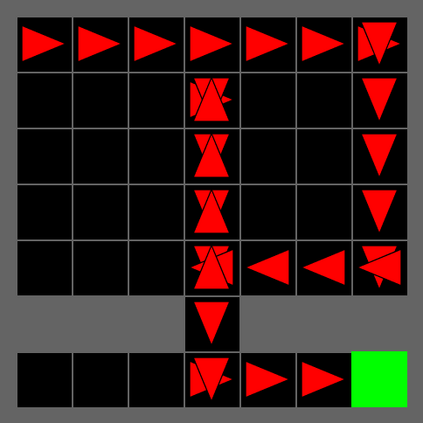

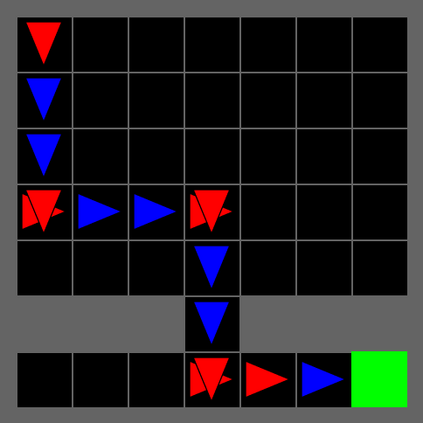

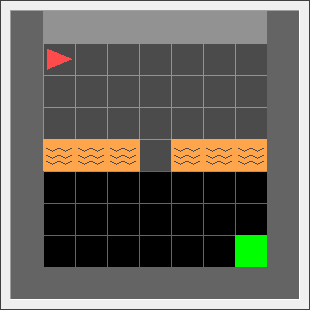

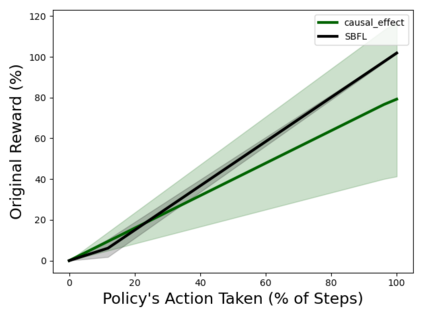

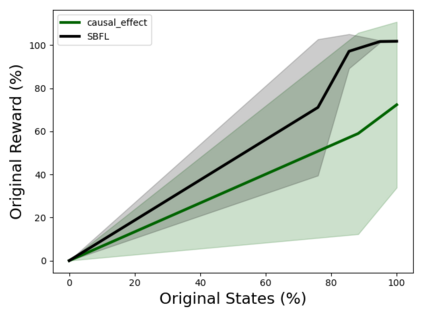

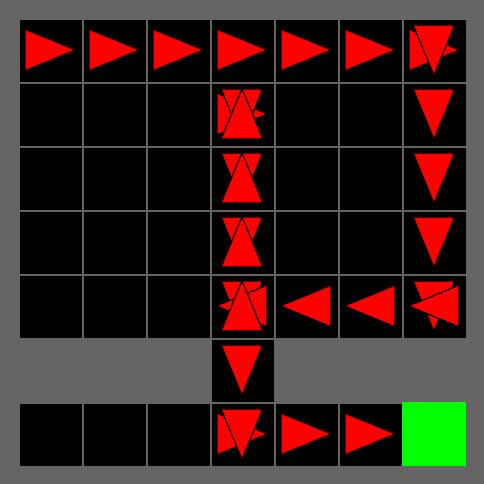

Policies trained via reinforcement learning (RL) are often very complex even for simple tasks. In an episode with $n$ time steps, a policy will make $n$ decisions on actions to take, many of which may appear non-intuitive to the observer. Moreover, it is not clear which of these decisions directly contribute towards achieving the reward and how significant is their contribution. Given a trained policy, we propose a black-box method based on counterfactual reasoning that estimates the causal effect that these decisions have on reward attainment and ranks the decisions according to this estimate. In this preliminary work, we compare our measure against an alternative, non-causal, ranking procedure, highlight the benefits of causality-based policy ranking, and discuss potential future work integrating causal algorithms into the interpretation of RL agent policies.

翻译:通过强化学习(RL)培训的政策往往非常复杂,即使是简单的任务也是如此。在花费10美元的时间步骤的情况下,一项政策将就所要采取的行动做出不值一提的决定,其中很多行动对观察员来说似乎是不直观的。此外,还不清楚这些决定中哪些直接有助于实现奖赏,以及其贡献有多大。根据经过培训的政策,我们提出了一个基于反事实推理的黑盒法,该黑盒法估计这些决定对奖励成就的因果关系并根据这一估计对决定进行排序。在这个初步工作中,我们将我们的措施与替代的、非因果性、排名程序进行比较,突出基于因果关系的政策排名的好处,并讨论将因果算法纳入解释RL代理政策的未来工作。