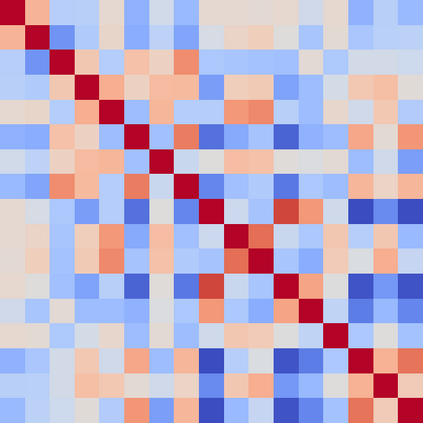

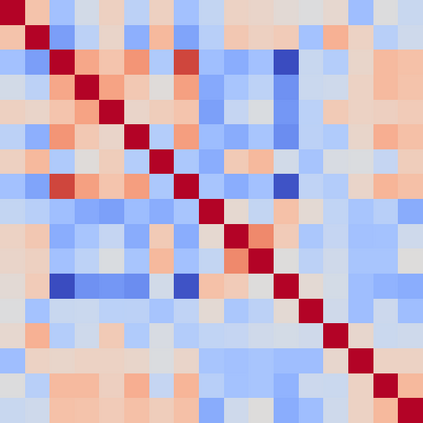

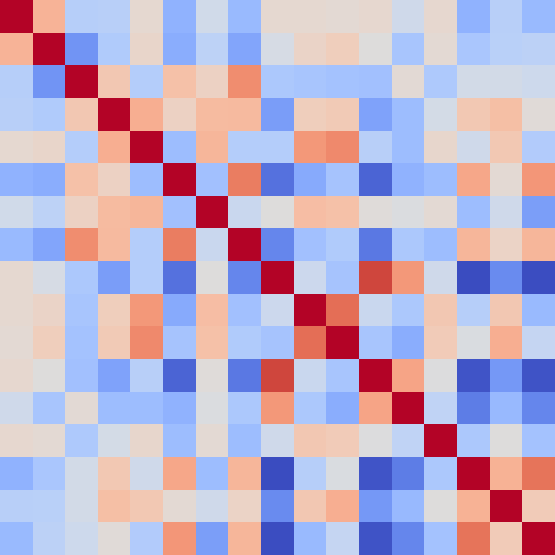

Learning to disentangle and represent factors of variation in data is an important problem in AI. While many advances have been made to learn these representations, it is still unclear how to quantify disentanglement. While several metrics exist, little is known on their implicit assumptions, what they truly measure, and their limits. In consequence, it is difficult to interpret results when comparing different representations. In this work, we survey supervised disentanglement metrics and thoroughly analyze them. We propose a new taxonomy in which all metrics fall into one of three families: intervention-based, predictor-based and information-based. We conduct extensive experiments in which we isolate properties of disentangled representations, allowing stratified comparison along several axes. From our experiment results and analysis, we provide insights on relations between disentangled representation properties. Finally, we share guidelines on how to measure disentanglement.

翻译:学会解剖和代表数据差异因素是AI中的一个重要问题。虽然在了解这些表达方式方面已经取得了许多进展,但仍不清楚如何量化其差异。虽然存在一些衡量标准,但对其隐含的假设、真正计量和局限性却知之甚少。因此,在比较不同表达方式时很难解释结果。在这项工作中,我们调查了监督分离指标并深入分析了它们。我们提出了一个新的分类方法,其中所有指标都属于三个组中的一个组:以干预为基础的、以预测者为基础的和信息为基础的。我们进行了广泛的实验,在实验中我们分离了分离的表达方式的特性,允许沿着几个轴进行分层比较。从我们的实验结果和分析中,我们就分解的表达特性之间的关系提供了深刻的见解。最后,我们分享了如何衡量分离的指导方针。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem