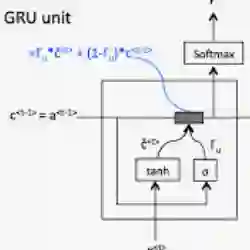

A field that has directly benefited from the recent advances in deep learning is Automatic Speech Recognition (ASR). Despite the great achievements of the past decades, however, a natural and robust human-machine speech interaction still appears to be out of reach, especially in challenging environments characterized by significant noise and reverberation. To improve robustness, modern speech recognizers often employ acoustic models based on Recurrent Neural Networks (RNNs), that are naturally able to exploit large time contexts and long-term speech modulations. It is thus of great interest to continue the study of proper techniques for improving the effectiveness of RNNs in processing speech signals. In this paper, we revise one of the most popular RNN models, namely Gated Recurrent Units (GRUs), and propose a simplified architecture that turned out to be very effective for ASR. The contribution of this work is two-fold: First, we analyze the role played by the reset gate, showing that a significant redundancy with the update gate occurs. As a result, we propose to remove the former from the GRU design, leading to a more efficient and compact single-gate model. Second, we propose to replace hyperbolic tangent with ReLU activations. This variation couples well with batch normalization and could help the model learn long-term dependencies without numerical issues. Results show that the proposed architecture, called Light GRU (Li-GRU), not only reduces the per-epoch training time by more than 30% over a standard GRU, but also consistently improves the recognition accuracy across different tasks, input features, noisy conditions, as well as across different ASR paradigms, ranging from standard DNN-HMM speech recognizers to end-to-end CTC models.

翻译:最近深层学习的进展直接受益于一个领域,即自动语音识别。尽管在过去几十年取得了巨大成就,但是自然和强大的人类机器语音互动似乎仍然无法达到,特别是在具有重大噪音和反响特点的挑战性环境中。为了提高稳健性,现代语音识别器经常使用基于神经网络(RNN)的音响模型,这些模型自然能够利用大量时间背景和长期语音调制。因此,继续研究适当技术,提高区域NNW在处理语音信号方面的效力,是非常有意义的。在本文中,我们修改最受欢迎的RNN模式之一,即Ged经常单元(GRUs),并提议一个对ASR非常有效的简化架构。这项工作的贡献有两重:首先,我们分析重置门的作用,显示与更新大门发生重大重复。因此,我们提议将前者从GRU设计中去除,导致更高效和紧凑的单一启动模式。在本文中,我们还提议以不固定的GRRRRR标准结构取代30个标准值的升级。我们提议,将GRRRRRRR的升级,而不是以固定的升级的方式,将GRRRRRRR的升级为固定的升级。我们建议,将G的升级的升级的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本的版本,可以显示。