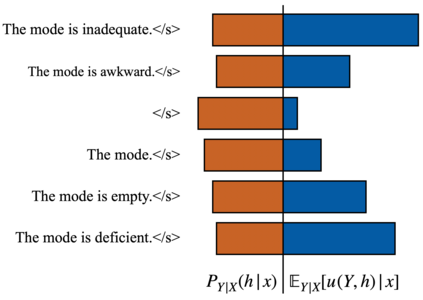

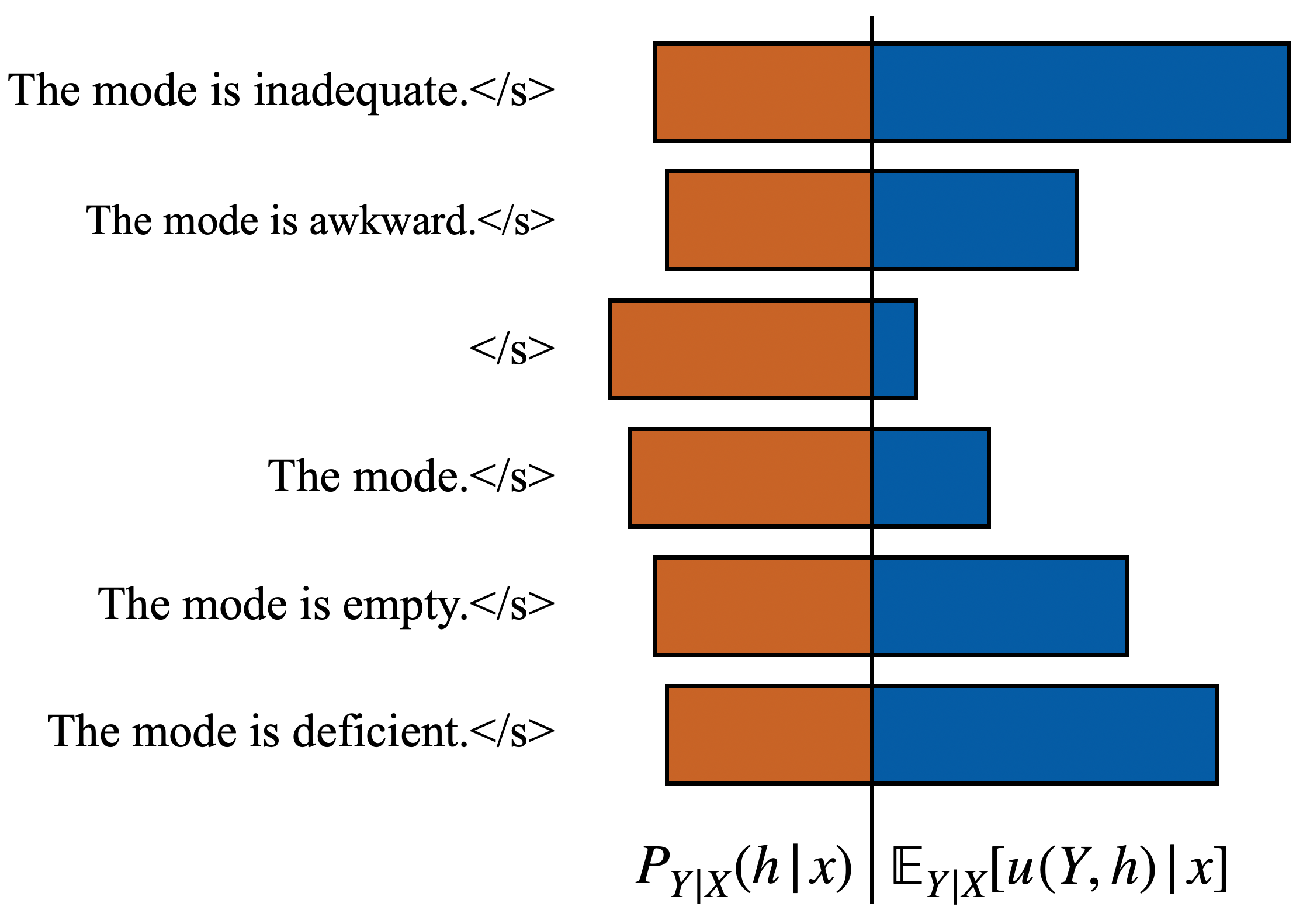

In neural machine translation (NMT), we search for the mode of the model distribution to form predictions. The mode as well as other high probability translations found by beam search have been shown to often be inadequate in a number of ways. This prevents practitioners from improving translation quality through better search, as these idiosyncratic translations end up being selected by the decoding algorithm, a problem known as the beam search curse. Recently, a sampling-based approximation to minimum Bayes risk (MBR) decoding has been proposed as an alternative decision rule for NMT that would likely not suffer from the same problems. We analyse this approximation and establish that it has no equivalent to the beam search curse, i.e. better search always leads to better translations. We also design different approximations aimed at decoupling the cost of exploration from the cost of robust estimation of expected utility. This allows for exploration of much larger hypothesis spaces, which we show to be beneficial. We also show that it can be beneficial to make use of strategies like beam search and nucleus sampling to construct hypothesis spaces efficiently. We show on three language pairs (English into and from German, Romanian, and Nepali) that MBR can improve upon beam search with moderate computation.

翻译:在神经机翻译(NMT)中,我们寻找模型分配模式的模式,以作出预测。通过梁搜索找到的模式和其他高概率翻译往往在很多方面都显示不够。这妨碍了从业人员通过更好的搜索提高翻译质量,因为这些奇特的翻译最终是由解码算法(一个被称为光束搜索诅咒的问题)所选择的。最近,我们提出了一种基于抽样的近似比对最小巴伊斯风险解码(MBR)的替代决定规则,作为NMT可能不会遭受同样问题影响的替代决定规则。我们分析这一近似,确定它不等同于光线搜索诅咒,即更好的搜索总是导致更好的翻译。我们还设计了不同的近似方法,旨在将勘探成本与严格估计预期效用的成本脱钩。这样可以探索大得多的假设空间,我们可以证明这样做是有益的。我们还表明,利用像浅搜索和核心取样这样的战略来高效地构建假说空间是有好处的。我们展示了三种语言配对(英语和从德国、罗马尼亚和尼泊尔的搜索可以改进M)。