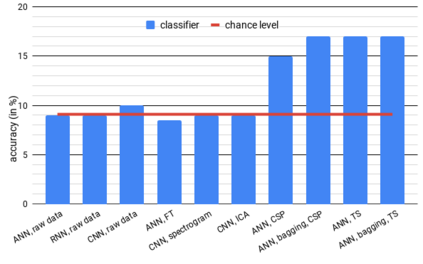

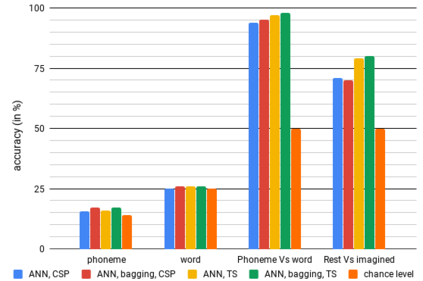

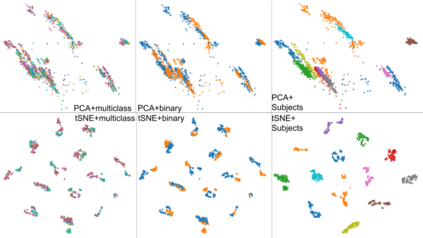

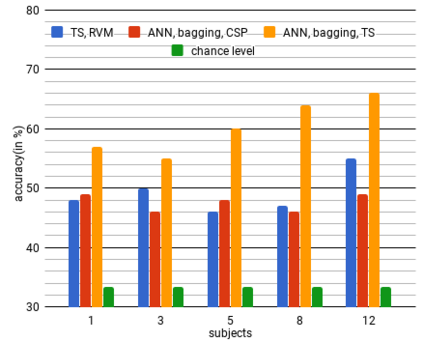

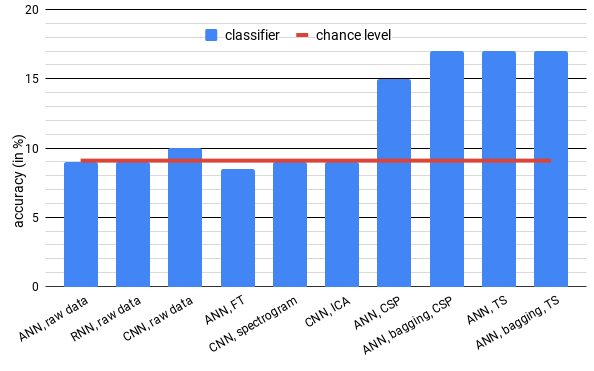

This work explores the possibility of decoding Imagined Speech (IS) signals which can be used to create a new design of Human-Computer Interface (HCI). Since the underlying process generating EEG signals is unknown, various feature extraction methods, along with different neural network (NN) models, are used to approximate data distribution and classify IS signals. Based on the experimental results, feed-forward NN model with ensemble and covariance matrix transformed features showed the highest performance in comparison to other existing methods. For comparison, three publicly available datasets were used. We report a mean classification accuracy of 80% between rest and imagined state, 96% and 80% for decoding long and short words on two datasets. These results show that it is possible to differentiate brain signals (generated during rest state) from the IS brain signals. Based on the experimental results, we suggest that the word length and complexity can be used to decode IS signals with high accuracy, and a BCI system can be designed with IS signals for computer interaction. These ideas, and results give direction for the development of a commercial level IS based BCI system, which can be used for human-computer interaction in daily life.

翻译:这项工作探索了解码模拟语音(IS)信号的可能性,这些信号可用于创建人类-计算机界面(HCI)的新设计。由于生成 EEG 信号的基本过程未知,各种特征提取方法,以及不同的神经网络模型,都被用于接近数据分布和对IS信号进行分类。根据实验结果,具有混合和共变矩阵转变特征的反馈式NN模型显示与其他现有方法相比,其性能最高。相比之下,使用了三种公开的数据集。我们报告说,在两个数据集中,用于解码长短单词的平均分类精确度为休息状态和想象状态之间的80%、96%和80%。这些结果表明,可以将大脑信号(休息状态期间生成的)与IS脑信号区分开来。根据实验结果,我们建议,可以使用文字长度和复杂性高精确度解码IS信号,并且可以用IS信号设计一个BCI系统进行计算机互动。这些想法和结果为基于商业级别的IS BCI系统的发展提供了方向,该系统可以用于人类的日常生活互动。