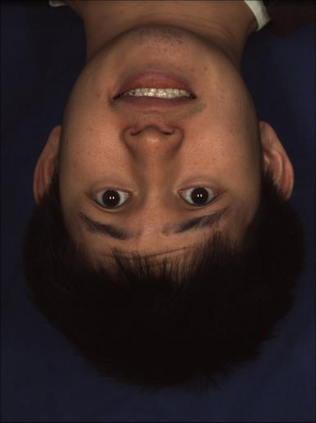

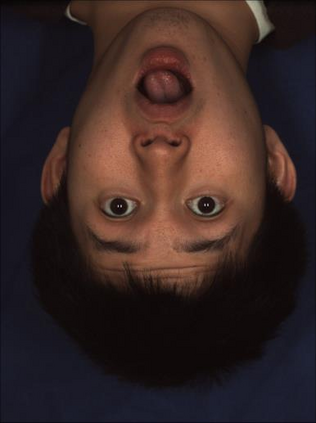

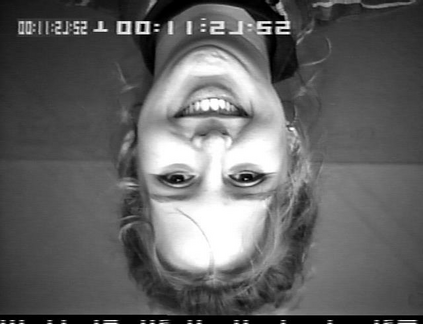

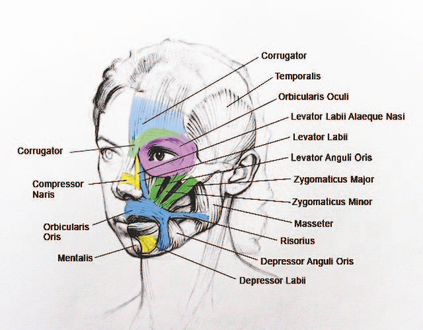

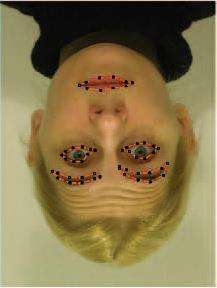

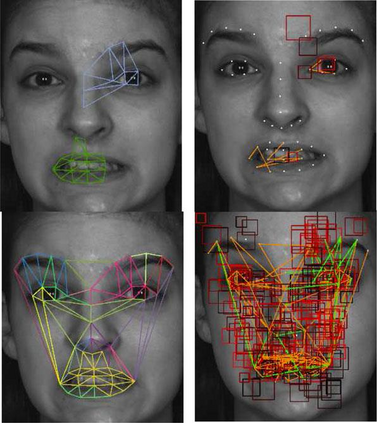

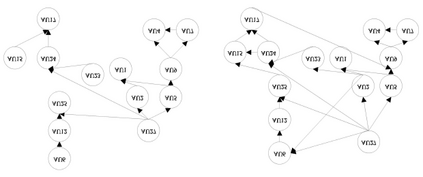

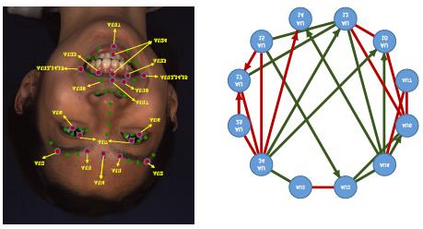

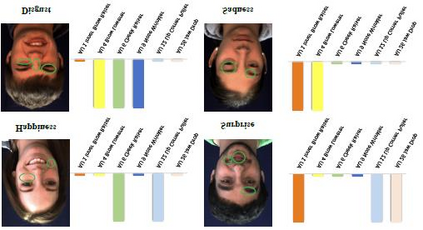

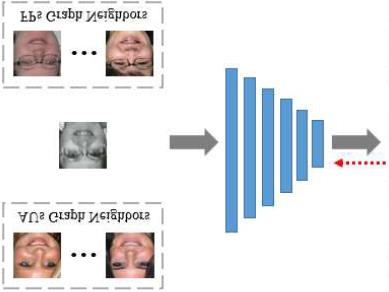

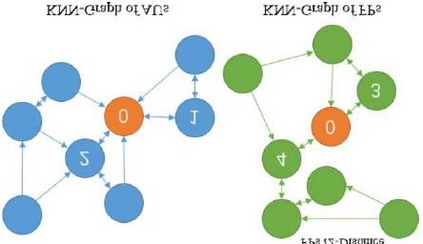

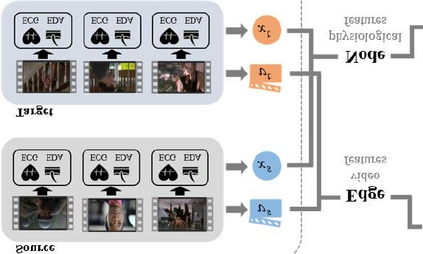

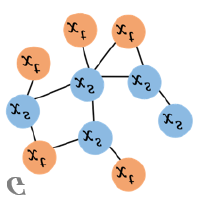

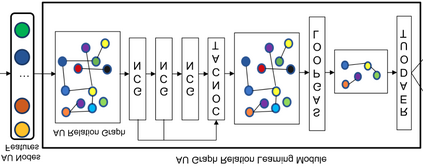

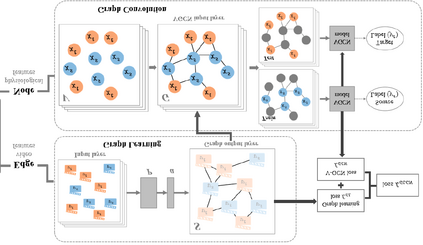

Facial affect analysis (FAA) using visual signals is important in human-computer interaction. Early methods focus on extracting appearance and geometry features associated with human affects, while ignoring the latent semantic information among individual facial changes, leading to limited performance and generalization. Recent work attempts to establish a graph-based representation to model these semantic relationships and develop frameworks to leverage them for various FAA tasks. In this paper, we provide a comprehensive review of graph-based FAA, including the evolution of algorithms and their applications. First, the FAA background knowledge is introduced, especially on the role of the graph. We then discuss approaches that are widely used for graph-based affective representation in literature and show a trend towards graph construction. For the relational reasoning in graph-based FAA, existing studies are categorized according to their usage of traditional methods or deep models, with a special emphasis on the latest graph neural networks. Performance comparisons of the state-of-the-art graph-based FAA methods are also summarized. Finally, we discuss the challenges and potential directions. As far as we know, this is the first survey of graph-based FAA methods. Our findings can serve as a reference for future research in this field.

翻译:早期方法侧重于提取与人类影响有关的外观和几何特征,同时忽略个人面部变化中潜在的语义信息,导致绩效和概括性有限。最近的工作试图建立一个基于图表的表述方法,以模拟这些语义关系,并制订框架,以利用这些语义关系来完成各种FAA任务。在本文件中,我们对基于图表的FAA进行了全面审查,包括算法及其应用的演变。首先,引入了FAA背景知识,特别是关于图的作用。然后,我们讨论了在文献中广泛用于基于图表的感知表达方法,并展示了图表构造的趋势。在基于图表的FAA中,现有研究按其传统方法或深层模型的使用情况进行分类,特别侧重于最新的图表神经网络。对基于图表的FAA方法的绩效比较也作了总结。最后,我们讨论了挑战和潜在方向。我们知道,这是基于图表的FAAA方法的首次实地调查,作为未来研究的参考。我们的研究结果可以作为实地参考。