材料科学中的数据挖掘:晶体图神经网络解读与代码解析

©PaperWeekly 原创 · 作者|张玮玮

学校|东北大学硕士

研究方向|情绪识别

论文标题:

Crystal Graph Neural Networks for Data Mining in Materials Science

论文链接:

https://storage.googleapis.com/rimcs_cgnn/cgnn_matsci_May_27_2019.pdf

代码链接:

OQMD 数据库(这个数据库比较大,提供 python API 与晶体结构可视化,可以使用 MySQL 读入):OQMD [2]

预备知识

1.2 原子坐标

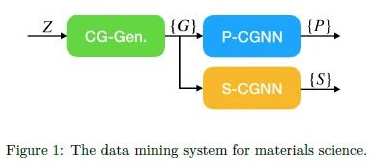

晶体图神经网络

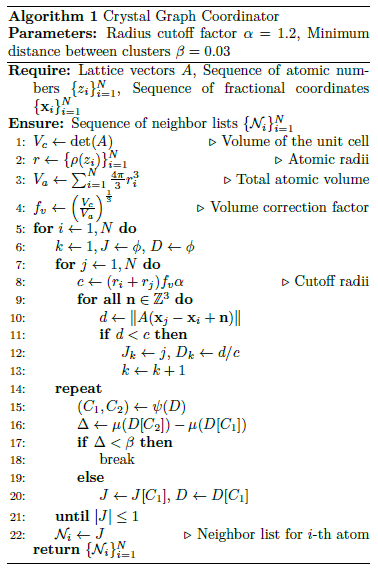

2.1 The Crystal Graph Coordinator

源代码如下所示:

def get_neighbors(geom):

elems = [Element.from_Z(z) for z in geom.atomic_numbers]#通过原子序数来得到元素种类;OQMD数据库共有89种化学元素,原子序数为1到83,89到94

radii = np.array([get_radius(e) for e in elems])#原子半径是从PyMatGen库获得

cutoff = radii[:,np.newaxis] + radii[np.newaxis, :]#将任意两个原子的半径相加,比如有4种原子,则返回一个4*4的矩阵。

vol_atom = (4 * np.pi / 3) * np.array([r**3 for r in radii]).sum()#将晶胞内的原子体积相加

factor_vol = (geom.volume / vol_atom)**(1.0/3.0)#体积校正因子通过晶胞体积和原子体积之和得来

factor = factor_vol * RADIUS_FACTOR

cutoff *= factor#得到截止半径

candidates = get_nbrs(geom.cart_coords, geom.lattice.matrix, cutoff)#这部分代码函数在下文提供,主要是将原子的笛卡尔坐标转换为分子坐标并且得到当前原子小于截止半径范围内的候选邻居原子。

neighbors = []

for j in range(len(candidates)):

dists = []

for nbr in candidates[j]:

i = nbr[0]#第一维表示原子索引

d = nbr[1]#第二维表示距离

r = nbr[2]

dists.append(d / cutoff[j,i])#距离归一到[0,1],以便进行后续的聚类

X = np.array(dists).reshape((-1, 1))

nnc_nbrs = get_nnc_loop(X)#得到最近邻居原子的位置索引

neighbors.append([candidates[j][i][0] for i in nnc_nbrs])

return neighbors

def get_nbrs(crystal_xyz, crystal_lat, R_max):

A = np.transpose(crystal_lat)

B = np.linalg.inv(A)

crystal_red = np.matmul(crystal_xyz, np.transpose(B))#笛卡尔坐标系转换为分数坐标系

crystal_nbrs = pbc.get_shortest_distances(crystal_red, A, R_max,

crdn_only=True)#得到当前原子小于截止半径范围内的候选邻居原子

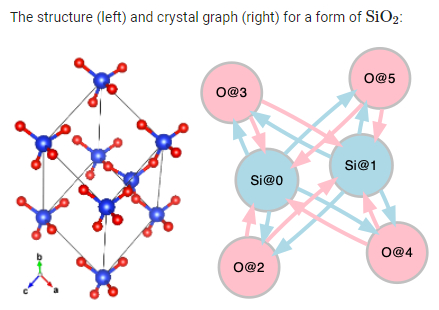

return crystal_nbrs得到的晶体图如下例所示:

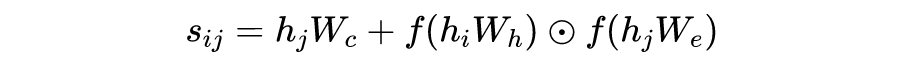

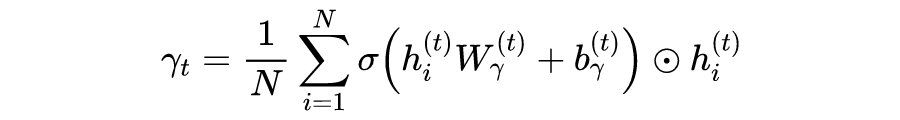

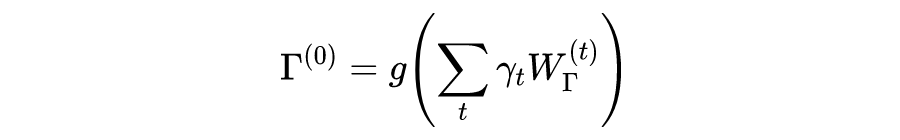

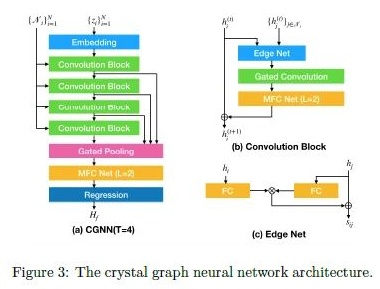

2.2 Crystal Graph Neural Networks

作者对模型结构的介绍可以见 Architectures-CGNN(tony-y.github.io)[3]

class GGNN(nn.Module):

"""

Gated Graph Neural Networks

Nodes -> Embedding -> Gated Convolutions -> Graph Pooling -> Full Connections -> Linear Regression

"""

def __init__(self, n_node_feat, n_hidden_feat, n_graph_feat, n_conv, n_fc,

activation, use_batch_norm, node_activation, use_node_batch_norm,

edge_activation, use_edge_batch_norm, n_edge_net_feat, n_edge_net_layers,

edge_net_activation, use_edge_net_batch_norm, use_fast_edge_network,

fast_edge_network_type, use_aggregated_edge_network, edge_net_cardinality,

edge_net_width, use_edge_net_shortcut, n_postconv_net_layers,

postconv_net_activation, use_postconv_net_batch_norm, conv_type,

conv_bias=False, edge_net_bias=False, postconv_net_bias=False,

full_pooling=False, gated_pooling=False,

use_extension=False):

super(GGNN, self).__init__()

#设置激活函数,论文共设置了softplus,ssp,elu,relu,selu,celu六种激活函数

act_fn = get_activation(activation)

if node_activation is not None:

node_act_fn = get_activation(node_activation)

else:

node_act_fn = None

if edge_activation is not None:

edge_act_fn = get_activation(edge_activation)

else:

edge_act_fn = None

postconv_net_act_fn = get_activation(postconv_net_activation)

#EdgeNet层的三个变体

if n_edge_net_layers < 1:

edge_nets = [None for i in range(n_conv)]

else:

edge_net_act_fn = get_activation(edge_net_activation)

if use_aggregated_edge_network:

#AggregatedEdgeNetwork

edge_nets = [AggregatedEdgeNetwork(n_hidden_feat, n_edge_net_feat,

n_edge_net_layers, cardinality=edge_net_cardinality,

width=edge_net_width, activation=edge_net_act_fn,

use_batch_norm=use_edge_net_batch_norm,

bias=edge_net_bias,

use_shortcut=use_edge_net_shortcut)

for i in range(n_conv)]

elif use_fast_edge_network:

#FastEdgeNetwork

edge_nets = [FastEdgeNetwork(n_hidden_feat, n_edge_net_feat,

n_edge_net_layers, activation=edge_net_act_fn,

net_type=fast_edge_network_type,

use_batch_norm=use_edge_net_batch_norm,

bias=edge_net_bias,

use_shortcut=use_edge_net_shortcut)

for i in range(n_conv)]

else:

#Original EdgeNet Layer

edge_nets = [EdgeNetwork(n_hidden_feat, n_edge_net_feat,

n_edge_net_layers, activation=edge_net_act_fn,

use_batch_norm=use_edge_net_batch_norm,

bias=edge_net_bias,

use_shortcut=use_edge_net_shortcut)

for i in range(n_conv)]

if n_postconv_net_layers < 1:

postconv_nets = [None for i in range(n_conv)]

else:

postconv_nets = [PostconvolutionNetwork(n_hidden_feat, n_hidden_feat,

n_postconv_net_layers,

activation=postconv_net_act_fn,

use_batch_norm=use_postconv_net_batch_norm,

bias=postconv_net_bias)

for i in range(n_conv)]

self.embedding = NodeEmbedding(n_node_feat, n_hidden_feat)#节点嵌入层,使用线性层

self.convs = [GatedGraphConvolution(n_hidden_feat, n_hidden_feat,

node_activation=node_act_fn,

edge_activation=edge_act_fn,

use_node_batch_norm=use_node_batch_norm,

use_edge_batch_norm=use_edge_batch_norm,

bias=conv_bias,

conv_type=conv_type,

edge_network=edge_nets[i],

postconv_network=postconv_nets[i])

for i in range(n_conv)]#门卷积层

self.convs = nn.ModuleList(self.convs)

if full_pooling:

n_steps = n_conv

if gated_pooling:

self.pre_poolings = [GatedPooling(n_hidden_feat)

for _ in range(n_conv)]

else:

self.pre_poolings = [LinearPooling(n_hidden_feat)

for _ in range(n_conv)]

else:

n_steps = 1

self.pre_poolings = [None for _ in range(n_conv-1)]

if gated_pooling:

self.pre_poolings.append(GatedPooling(n_hidden_feat))

else:

self.pre_poolings.append(LinearPooling(n_hidden_feat))

self.pre_poolings = nn.ModuleList(self.pre_poolings)

#门pooling层

self.pooling = GraphPooling(n_hidden_feat, n_steps, activation=act_fn,

use_batch_norm=use_batch_norm)

#多层全连接神经网络

self.fcs = [FullConnection(n_hidden_feat, n_graph_feat,

activation=act_fn, use_batch_norm=use_batch_norm)]

self.fcs += [FullConnection(n_graph_feat, n_graph_feat,

activation=act_fn, use_batch_norm=use_batch_norm)

for i in range(n_fc-1)]

self.fcs = nn.ModuleList(self.fcs)

#线性回归

self.regression = LinearRegression(n_graph_feat)

if use_extension:

self.extension = Extension()

else:

self.extension = None

def forward(self, input):

#模型顺序如下:Nodes -> Embedding -> Gated Convolutions -> Graph Pooling -> Full Connections -> Linear Regression

x = self.embedding(input.nodes)

y = []

for conv, pre_pooling in zip(self.convs, self.pre_poolings):

x = conv(x, input.edge_sources, input.edge_targets)

if pre_pooling is not None:

y.append(pre_pooling(x, input.graph_indices, input.node_counts))

x = self.pooling(y)

for fc in self.fcs:

x = fc(x)

x = self.regression(x)

if self.extension is not None:

x = self.extension(x, input.node_counts)

return x

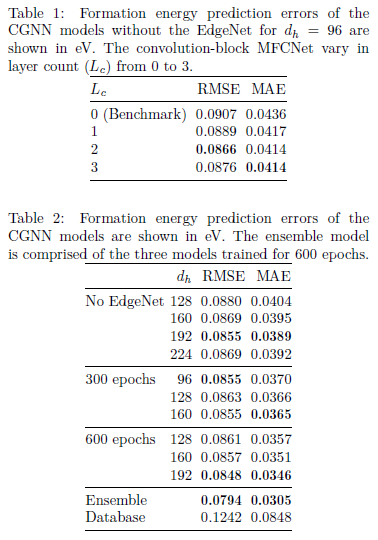

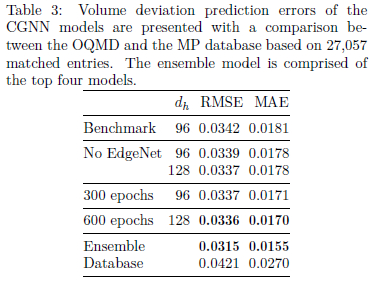

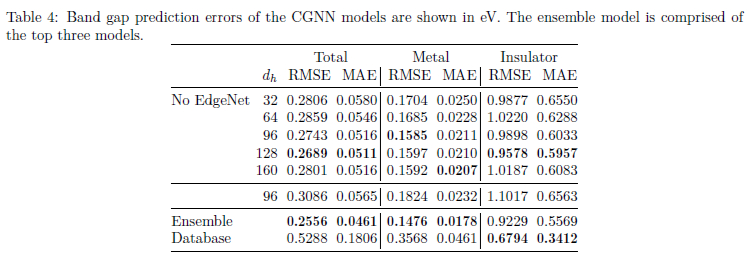

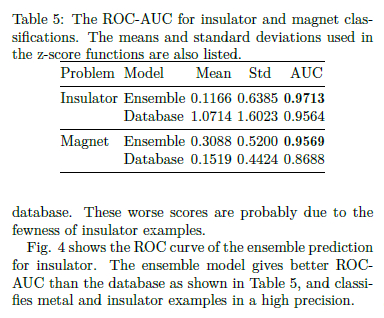

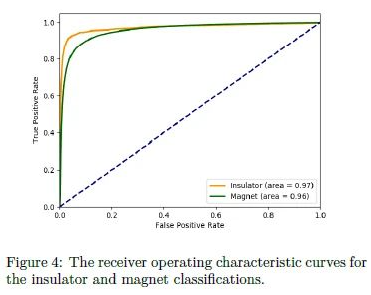

实验结果

结果与讨论

参考文献

[1] Xie T, Grossman J C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties[J]. Physical review letters, 2018, 120(14): 145301.

更多阅读

#投 稿 通 道#

让你的论文被更多人看到

如何才能让更多的优质内容以更短路径到达读者群体,缩短读者寻找优质内容的成本呢?答案就是:你不认识的人。

总有一些你不认识的人,知道你想知道的东西。PaperWeekly 或许可以成为一座桥梁,促使不同背景、不同方向的学者和学术灵感相互碰撞,迸发出更多的可能性。

PaperWeekly 鼓励高校实验室或个人,在我们的平台上分享各类优质内容,可以是最新论文解读,也可以是学习心得或技术干货。我们的目的只有一个,让知识真正流动起来。

📝 来稿标准:

• 稿件确系个人原创作品,来稿需注明作者个人信息(姓名+学校/工作单位+学历/职位+研究方向)

• 如果文章并非首发,请在投稿时提醒并附上所有已发布链接

• PaperWeekly 默认每篇文章都是首发,均会添加“原创”标志

📬 投稿邮箱:

• 投稿邮箱:hr@paperweekly.site

• 所有文章配图,请单独在附件中发送

• 请留下即时联系方式(微信或手机),以便我们在编辑发布时和作者沟通

🔍

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧

关于PaperWeekly

PaperWeekly 是一个推荐、解读、讨论、报道人工智能前沿论文成果的学术平台。如果你研究或从事 AI 领域,欢迎在公众号后台点击「交流群」,小助手将把你带入 PaperWeekly 的交流群里。