那些年“号称”要超越Adam的优化器

,已经删了。

,已经删了。

:

:

那些年“号称”要超越Adam的优化器

1.1 ICLR 2019中国北大本科生提出的AdaBound

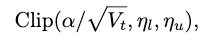

并不是常数,而是时间t的函数,

并不是常数,而是时间t的函数,

初始值为∞,随着时间t也逐渐收敛到

初始值为∞,随着时间t也逐渐收敛到

1.2 “整流版Adam”——RAdam

1.3 可以自动预热的AdaMod

,

用来描述训练过程中学习率记忆长短的程度,指数滑动平均的范围就是

,

用来描述训练过程中学习率记忆长短的程度,指数滑动平均的范围就是

,所以根据

,所以根据

就能算出当前时刻的学习率的平滑插值进而结合长期记忆就可以得到学习率的值,从而避免出现极端学习率的情况。

就能算出当前时刻的学习率的平滑插值进而结合长期记忆就可以得到学习率的值,从而避免出现极端学习率的情况。

1.4 更加省显存的AdaFactor

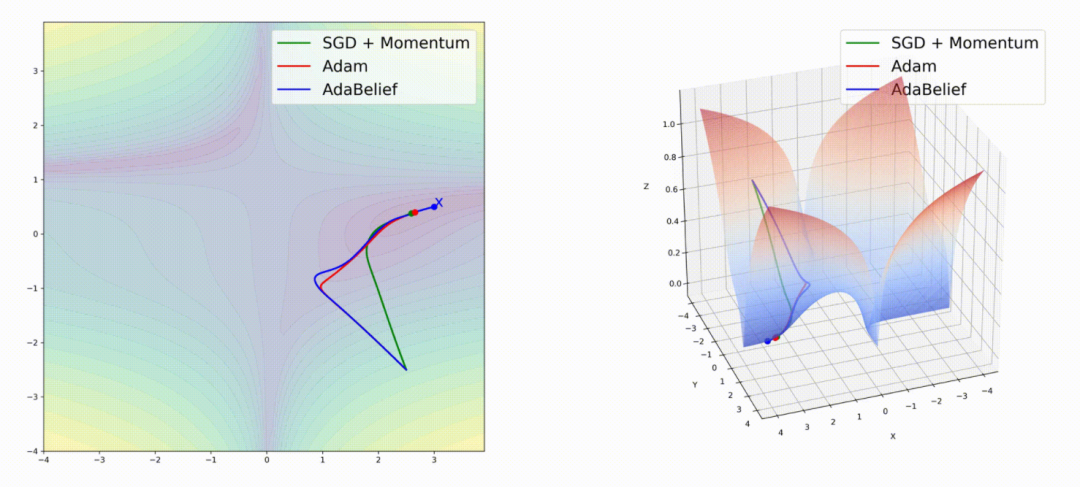

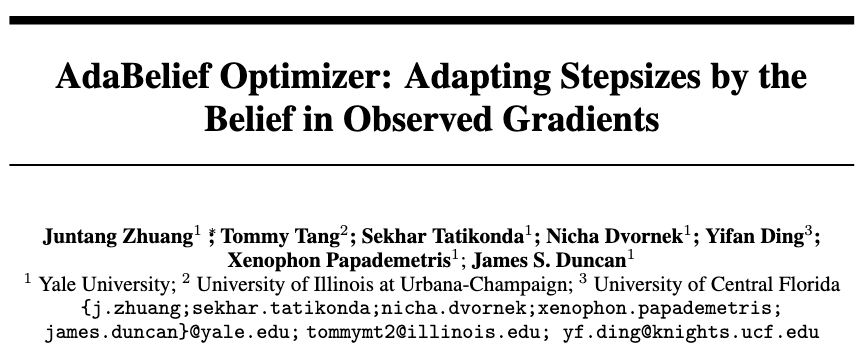

来看看最新的AdaBelief吧

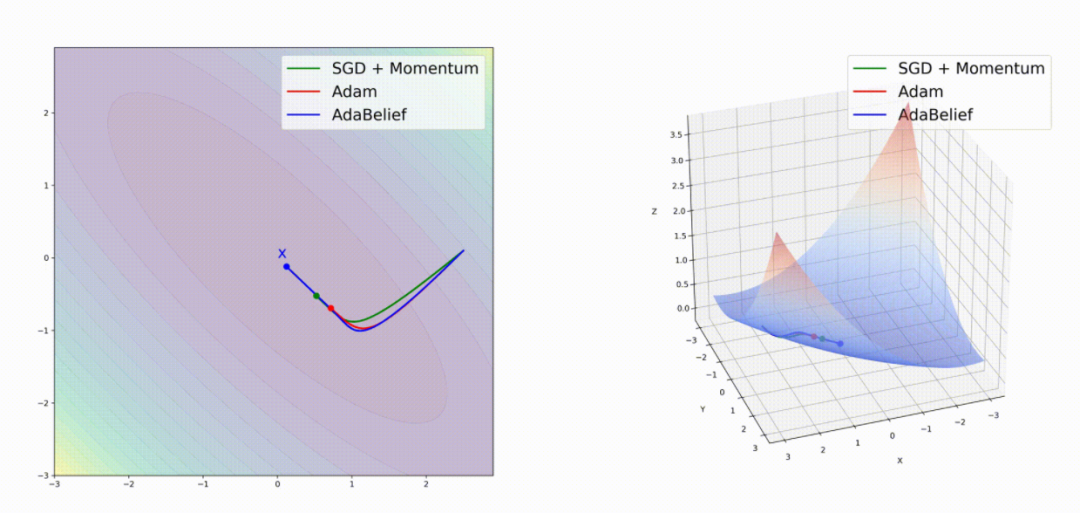

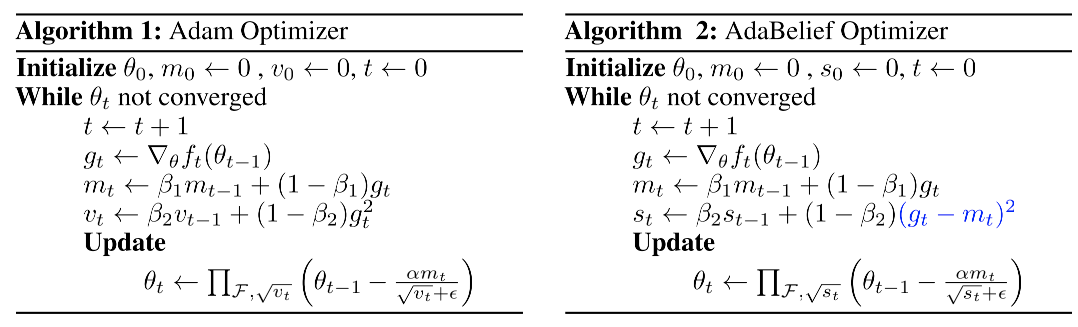

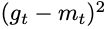

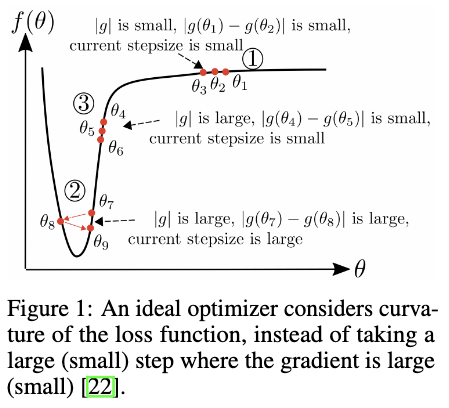

,AdaBelief的梯度更新方向为

,AdaBelief的梯度更新方向为

,作者形象的将

,作者形象的将

比喻为当前梯度方向的“belief”,其中

比喻为当前梯度方向的“belief”,其中

和

和

的区别在于它们分别是

的区别在于它们分别是

和

和

的指数滑动平均(EMA)值,其中

的指数滑动平均(EMA)值,其中

是t时刻梯度的预测值,然后在梯度更新时比较观测值和预测值的大小,如果当前观察到的梯度非常接近梯度预测,就获得一个较大的更新步长,反之获得一个较小的更新步长。

是t时刻梯度的预测值,然后在梯度更新时比较观测值和预测值的大小,如果当前观察到的梯度非常接近梯度预测,就获得一个较大的更新步长,反之获得一个较小的更新步长。

原始Adam:

# Decay the first and second moment running average coefficientexp_avg.mul_(beta1).add_(1 - beta1, grad)exp_avg_sq.mul_(beta2).addcmul_(1 - beta2, grad, grad)

AdaBelief代码:

# Update first and second moment running averageexp_avg.mul_(beta1).add_(1 - beta1, grad)grad_residual = grad - exp_avgexp_avg_var.mul_(beta2).addcmul_(1 - beta2, grad_residual, grad_residual)

值较大,分母较大,导致更新步长很小,而对于AdaBelief,此时的

值较大,分母较大,导致更新步长很小,而对于AdaBelief,此时的

值较小,分母较小,所以更新步长很大,可以达到理想的效果。

值较小,分母较小,所以更新步长很大,可以达到理想的效果。

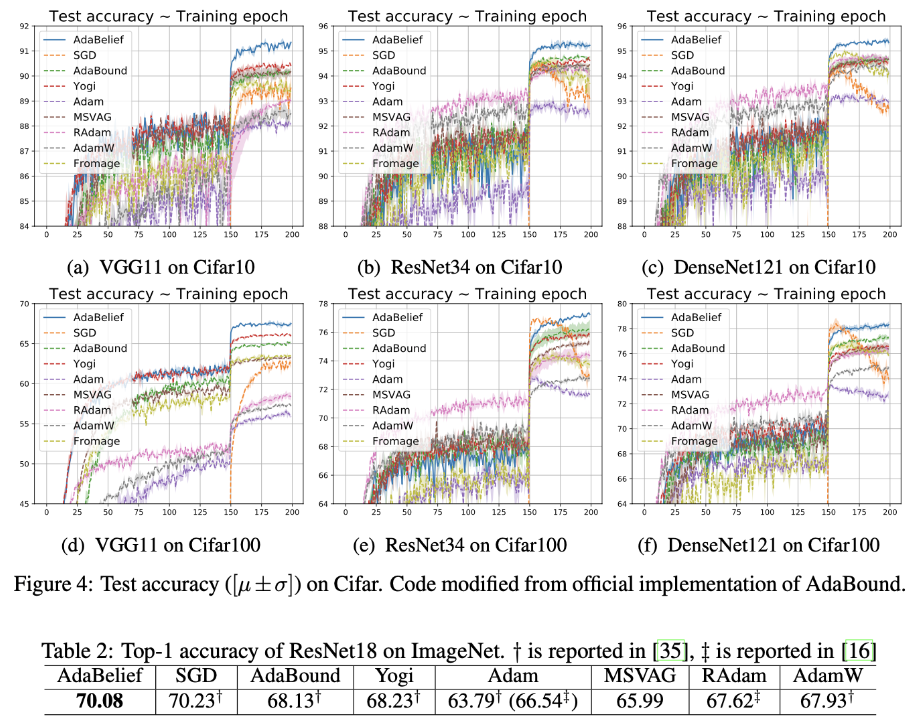

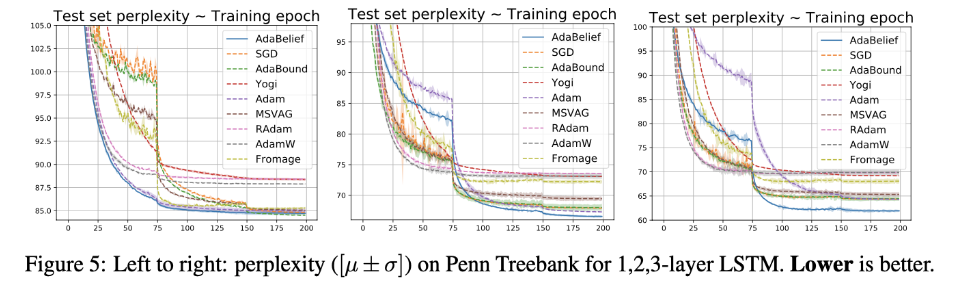

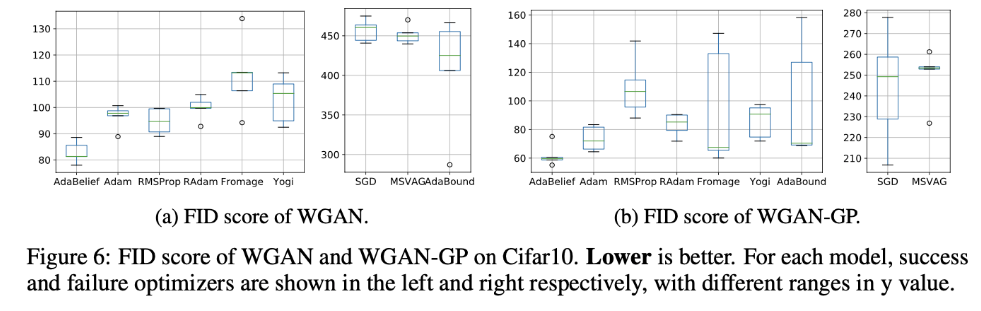

实验

讨论

的心态多一些,反正接着用SGD/Adam呗。

的心态多一些,反正接着用SGD/Adam呗。

保命)。

保命)。

点击阅读原文,直达AAAI小组!

登录查看更多

相关内容

专知会员服务

15+阅读 · 2020年1月13日

Arxiv

0+阅读 · 2021年1月31日

Arxiv

3+阅读 · 2018年9月6日