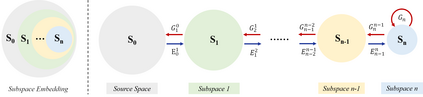

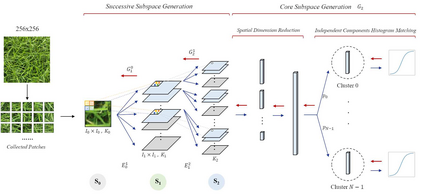

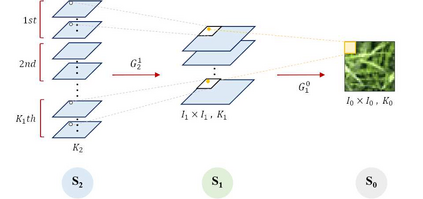

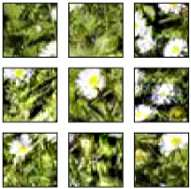

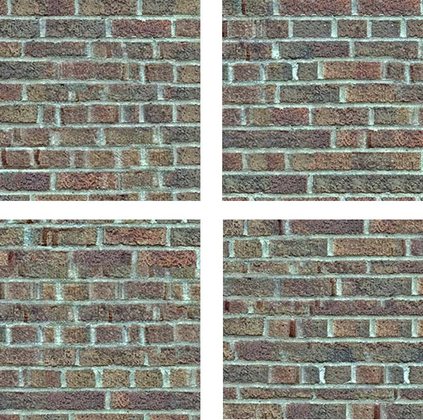

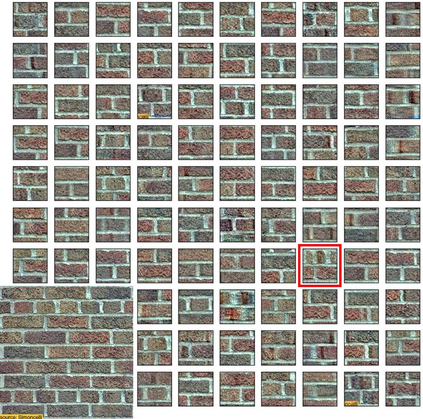

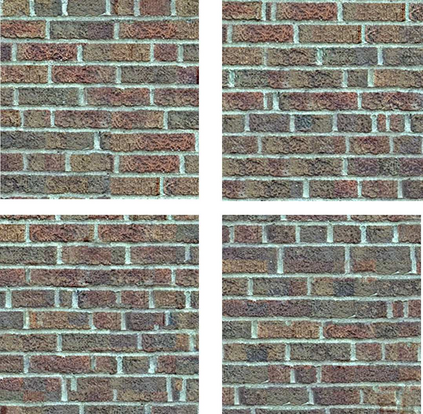

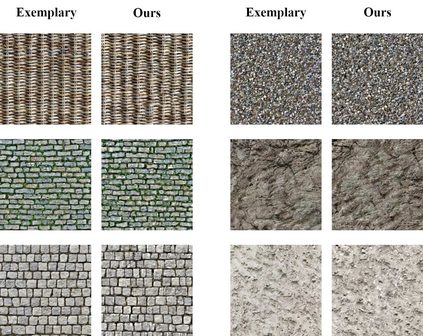

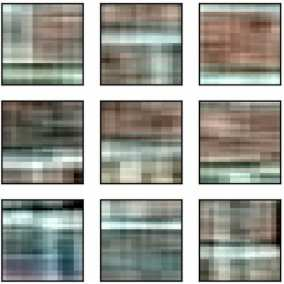

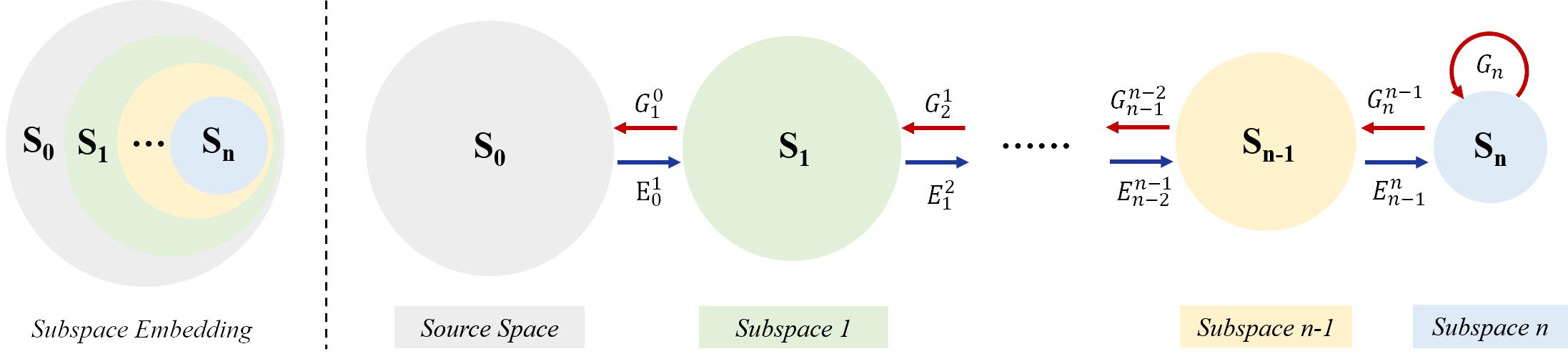

An explainable, efficient and lightweight method for texture generation, called TGHop (an acronym of Texture Generation PixelHop), is proposed in this work. Although synthesis of visually pleasant texture can be achieved by deep neural networks, the associated models are large in size, difficult to explain in theory, and computationally expensive in training. In contrast, TGHop is small in its model size, mathematically transparent, efficient in training and inference, and able to generate high quality texture. Given an exemplary texture, TGHop first crops many sample patches out of it to form a collection of sample patches called the source. Then, it analyzes pixel statistics of samples from the source and obtains a sequence of fine-to-coarse subspaces for these patches by using the PixelHop++ framework. To generate texture patches with TGHop, we begin with the coarsest subspace, which is called the core, and attempt to generate samples in each subspace by following the distribution of real samples. Finally, texture patches are stitched to form texture images of a large size. It is demonstrated by experimental results that TGHop can generate texture images of superior quality with a small model size and at a fast speed.

翻译:在这项工作中,提出了一种可解释、高效和轻量级的质谱生成方法,称为TGHOP(Texture General PixelHop的缩略词缩略语缩略语),在这项工作中,建议采用一种可解释、高效和轻量的质谱生成方法。虽然通过深神经网络可以实现视觉舒适质谱的合成,但相关模型的规模很大,在理论上难以解释,在培训过程中成本也很高。相比之下,TGHOP在模型大小上很小,在数学上透明,在培训和推断方面效率高,能够产生高质量的质质质质质质。根据一种模质,TGHOP首先将许多样本拼接出来,以形成一个叫做源的样本。最后,将源样本的样本拼凑成像素统计数据,然后通过使用 PixelHop+++的框架为这些补丁的细至粗的亚空间序列。为了生成与TGHOP的纹度快速图像,我们先从最粗的子空间开始,称为核心,并尝试在每一个子空间中根据真实样本的分布进行样本。最后,将纹质谱补补补成一个小的图像质的图像,然后通过一个小的模型,可以生成一个快速的图像。通过一个小的图像的模型,以生成一个小的图像。