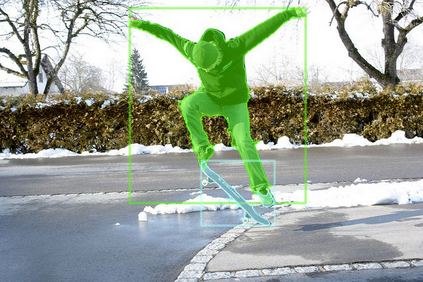

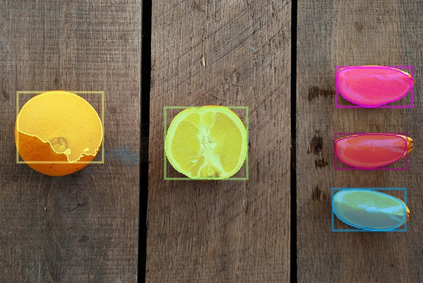

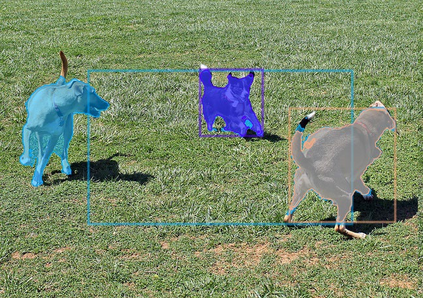

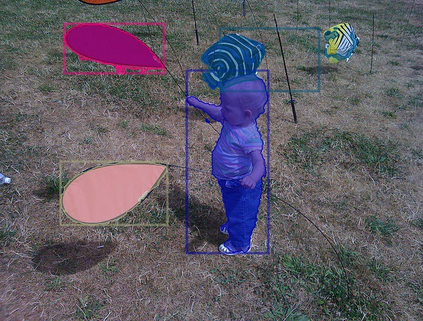

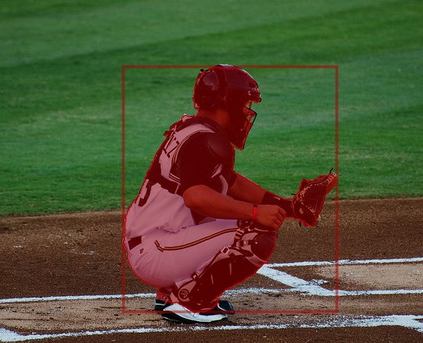

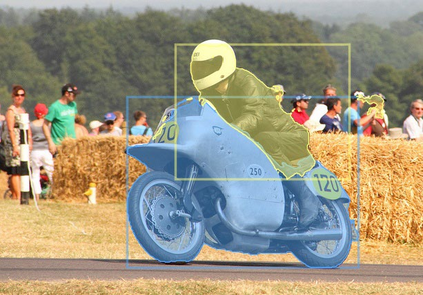

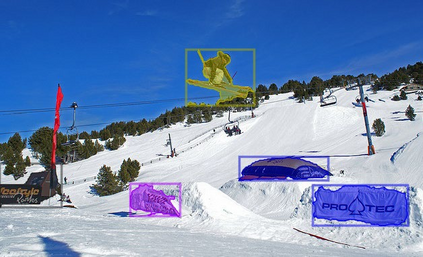

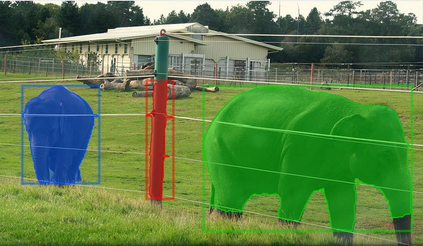

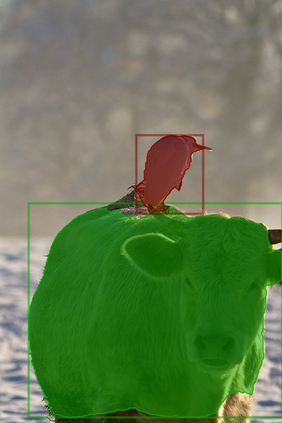

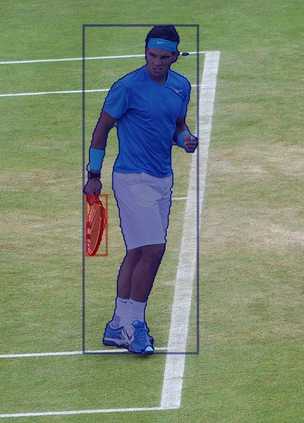

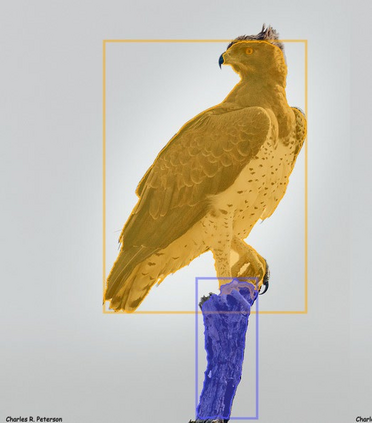

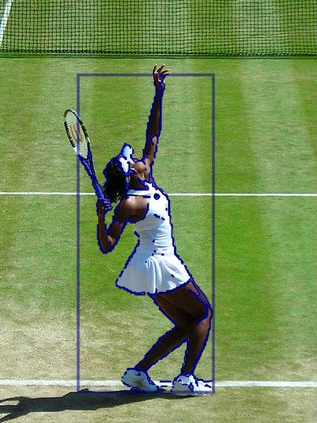

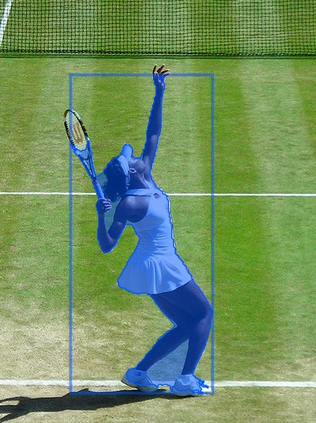

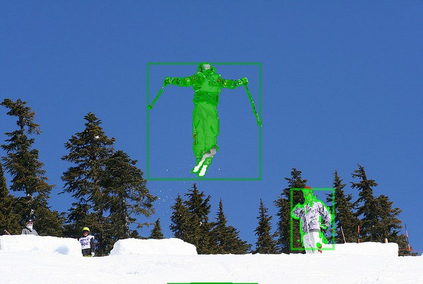

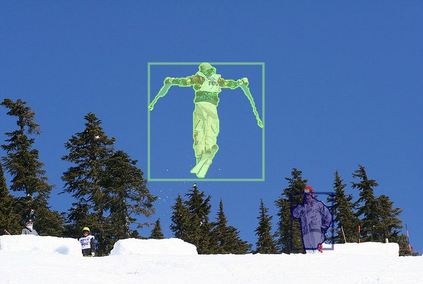

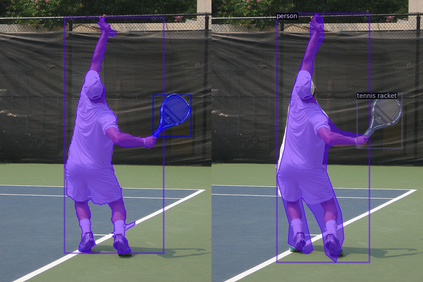

Instance segmentation is a fundamental vision task that aims to recognize and segment each object in an image. However, it requires costly annotations such as bounding boxes and segmentation masks for learning. In this work, we propose a fully unsupervised learning method that learns class-agnostic instance segmentation without any annotations. We present FreeSOLO, a self-supervised instance segmentation framework built on top of the simple instance segmentation method SOLO. Our method also presents a novel localization-aware pre-training framework, where objects can be discovered from complicated scenes in an unsupervised manner. FreeSOLO achieves 9.8% AP_{50} on the challenging COCO dataset, which even outperforms several segmentation proposal methods that use manual annotations. For the first time, we demonstrate unsupervised class-agnostic instance segmentation successfully. FreeSOLO's box localization significantly outperforms state-of-the-art unsupervised object detection/discovery methods, with about 100% relative improvements in COCO AP. FreeSOLO further demonstrates superiority as a strong pre-training method, outperforming state-of-the-art self-supervised pre-training methods by +9.8% AP when fine-tuning instance segmentation with only 5% COCO masks. Code is available at: github.com/NVlabs/FreeSOLO

翻译:试样分解是一个基本的视觉任务, 目的是在图像中辨别和分割每个对象。 但是, 它需要昂贵的注释, 如捆绑框和分解面面来学习。 在这项工作中, 我们提出一种完全不受监督的学习方法, 学习类中不附带任何注释的分解。 我们提出FreeSOLO, 这是在简单例分解方法SOLO之上建立的自我监督的分解框架。 我们的方法还提出了一个全新的本地化前训练框架, 可以在复杂场景中以不受监督的方式发现物体。 FreeSOLO在具有挑战性的COCO数据集上实现了9.8% AP ⁇ 50} 的优势, 这甚至超越了使用手动说明的一些分解建议方法。 我们第一次展示了未经监督的分类分解框架。 FreeSOLO的框地方化大大超越了最先进的、 不受监督的物体检测/分解方法, 其中COCO AP. SOLOLO 进一步展示了强的优势性, 在进行前常规化时, AS- OSADR 5 的自我调整方法仅能 。