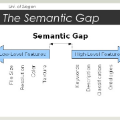

Building a shopping product collection has been primarily a human job. With the manual efforts of craftsmanship, experts collect related but diverse products with common shopping intent that are effective when displayed together, e.g., backpacks, laptop bags, and messenger bags for freshman bag gifts. Automatically constructing a collection requires an ML system to learn a complex relationship between the customer's intent and the product's attributes. However, there have been challenging points, such as 1) long and complicated intent sentences, 2) rich and diverse product attributes, and 3) a huge semantic gap between them, making the problem difficult. In this paper, we use a pretrained language model (PLM) that leverages textual attributes of web-scale products to make intent-based product collections. Specifically, we train a BERT with triplet loss by setting an intent sentence to an anchor and corresponding products to positive examples. Also, we improve the performance of the model by search-based negative sampling and category-wise positive pair augmentation. Our model significantly outperforms the search-based baseline model for intent-based product matching in offline evaluations. Furthermore, online experimental results on our e-commerce platform show that the PLM-based method can construct collections of products with increased CTR, CVR, and order-diversity compared to expert-crafted collections.

翻译:建立购物产品收藏主要是人的工作。随着手工艺的手工努力,专家收集了具有共同购物意图的、具有共同购物意图的多种相关产品,这些产品一起展示是有效的,例如背包、笔记本袋和新人袋礼品的信使袋。自动建造一个收藏需要ML系统来了解客户意图和产品属性之间的复杂关系。然而,还存在一些具有挑战性的点,例如:1) 长期和复杂的意向句子,2) 丰富和多样化的产品属性,3) 它们之间存在巨大的语义差距,使问题难以解决。在本文中,我们使用一种预先训练的语言模型,利用网络规模产品的文本属性来收集意向性产品。具体地说,我们培训一个三重损失的BERT,将意图句设定为锚和相应产品的积极例子。此外,我们通过基于搜索的负面抽样和类别积极的配对增强来改进模型的性能。我们的模式大大优于基于搜索的基线模型,从而在离线评价中匹配意图性产品。此外,我们使用一种预先训练的语言模型,利用网络规模产品的文本属性属性属性属性来收集基于意向性产品。具体地,我们电子商务数据库的在线试验结果,将C-TR数据库的数据收集与专家收藏的收集方法显示,可增加的PLM-M-C-V-C-S-C-S-S-S-S-S-S-S-S-S-S-S-S-S-S-C-S-C-S-S-C-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S