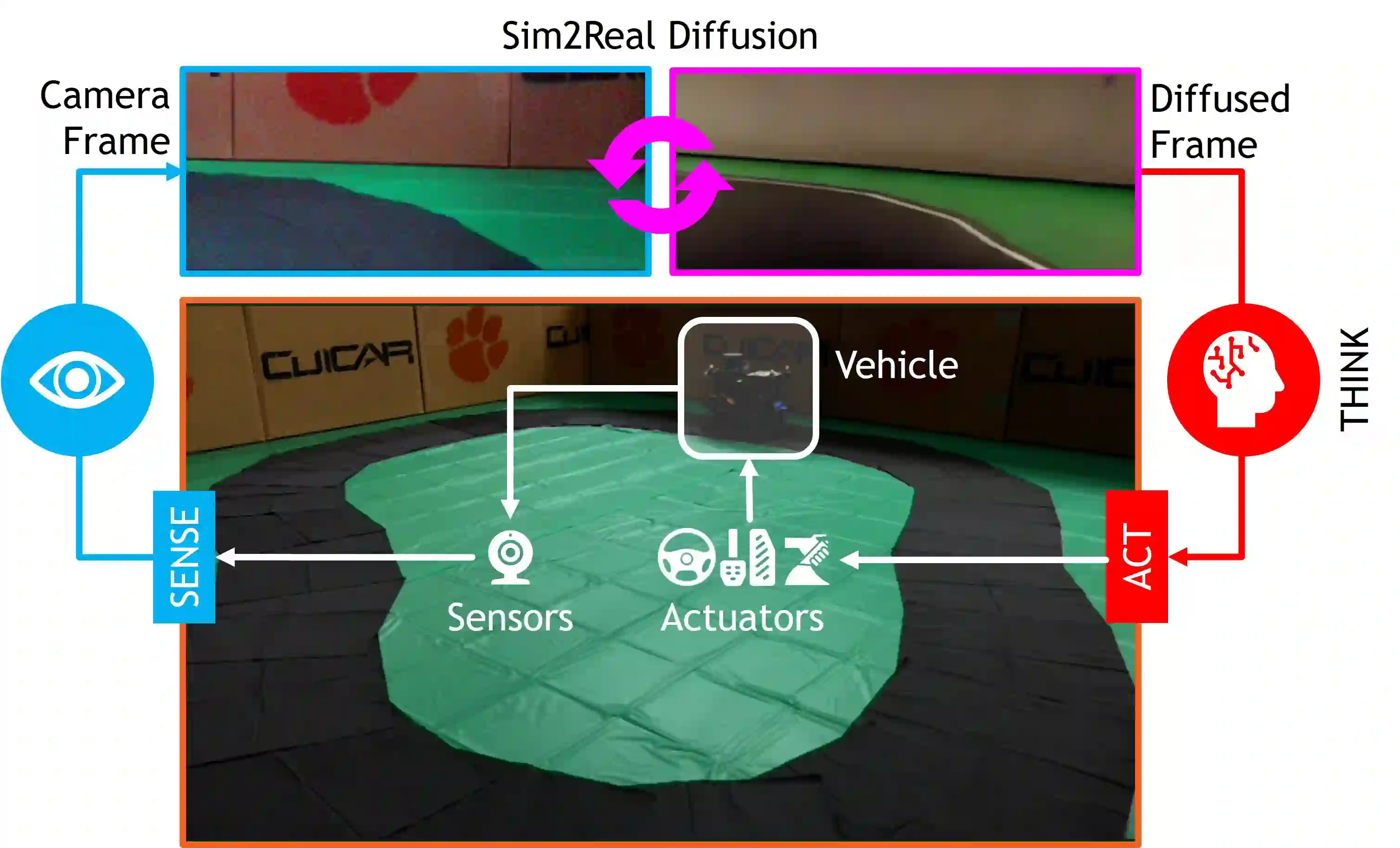

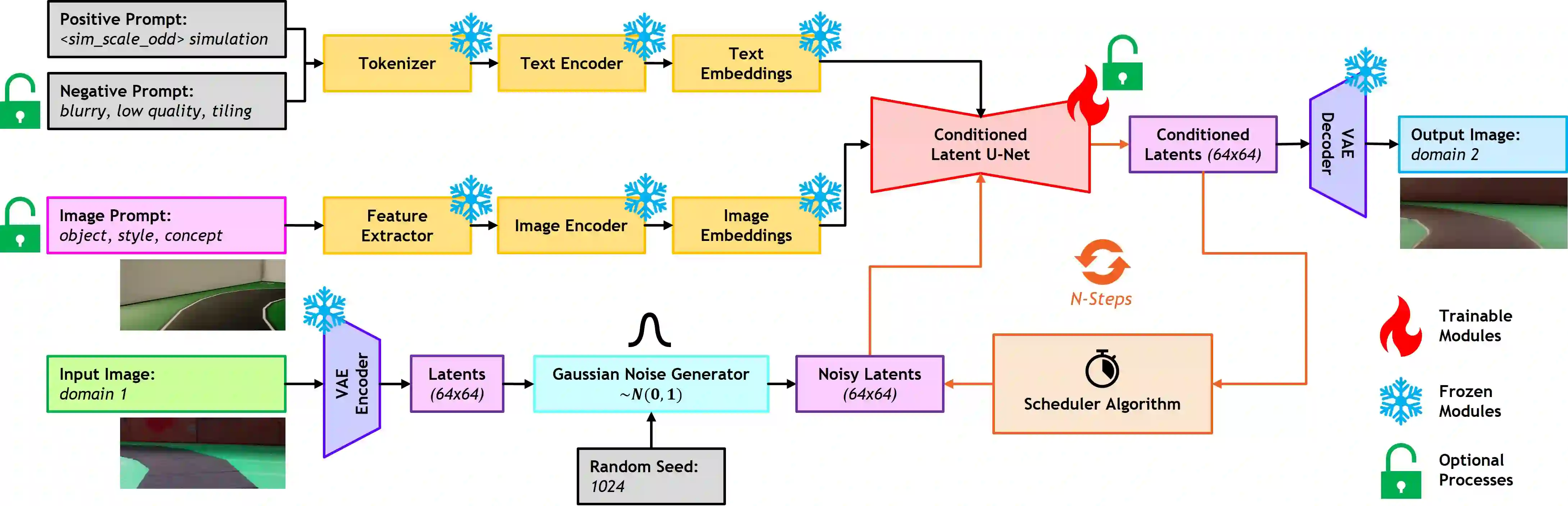

Simulation-based design, optimization, and validation of autonomous vehicles have proven to be crucial for their improvement over the years. Nevertheless, the ultimate measure of effectiveness is their successful transition from simulation to reality (sim2real). However, existing sim2real transfer methods struggle to address the autonomy-oriented requirements of balancing: (i) conditioned domain adaptation, (ii) robust performance with limited examples, (iii) modularity in handling multiple domain representations, and (iv) real-time performance. To alleviate these pain points, we present a unified framework for learning cross-domain adaptive representations through conditional latent diffusion for sim2real transferable automated driving. Our framework offers options to leverage: (i) alternate foundation models, (ii) a few-shot fine-tuning pipeline, and (iii) textual as well as image prompts for mapping across given source and target domains. It is also capable of generating diverse high-quality samples when diffusing across parameter spaces such as times of day, weather conditions, seasons, and operational design domains. We systematically analyze the presented framework and report our findings in terms of performance benchmarks and ablation studies. Additionally, we demonstrate its serviceability for autonomous driving using behavioral cloning case studies. Our experiments indicate that the proposed framework is capable of bridging the perceptual sim2real gap by over 40%.

翻译:基于仿真的自动驾驶车辆设计、优化与验证已被证明对其长期改进至关重要。然而,最终衡量其有效性的标准在于能否成功实现从仿真到现实的迁移(sim2real)。现有sim2real迁移方法难以平衡以下面向自主性的需求:(i) 条件化领域自适应,(ii) 有限样本下的鲁棒性能,(iii) 多领域表征处理的模块化,以及(iv) 实时性能。为缓解这些痛点,我们提出一个通过条件潜在扩散学习跨领域自适应表征的统一框架,用于实现sim2real可迁移的自动驾驶。该框架提供以下应用选项:(i) 可替换的基础模型,(ii) 少样本微调流程,以及(iii) 用于跨给定源域与目标域映射的文本与图像提示。该框架还能在扩散过程中跨越时间(如昼夜)、天气条件、季节及运行设计域等参数空间生成多样化高质量样本。我们对所提框架进行系统分析,并通过性能基准测试与消融实验报告研究结果。此外,我们通过行为克隆案例研究论证了其在自动驾驶中的实用性。实验表明,所提框架能够将感知层面的sim2real差距缩小超过40%。