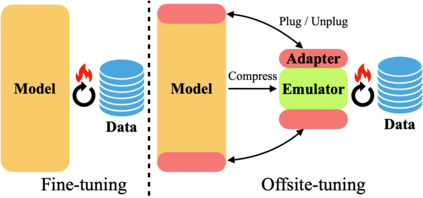

Transfer learning is important for foundation models to adapt to downstream tasks. However, many foundation models are proprietary, so users must share their data with model owners to fine-tune the models, which is costly and raise privacy concerns. Moreover, fine-tuning large foundation models is computation-intensive and impractical for most downstream users. In this paper, we propose Offsite-Tuning, a privacy-preserving and efficient transfer learning framework that can adapt billion-parameter foundation models to downstream data without access to the full model. In offsite-tuning, the model owner sends a light-weight adapter and a lossy compressed emulator to the data owner, who then fine-tunes the adapter on the downstream data with the emulator's assistance. The fine-tuned adapter is then returned to the model owner, who plugs it into the full model to create an adapted foundation model. Offsite-tuning preserves both parties' privacy and is computationally more efficient than the existing fine-tuning methods that require access to the full model weights. We demonstrate the effectiveness of offsite-tuning on various large language and vision foundation models. Offsite-tuning can achieve comparable accuracy as full model fine-tuning while being privacy-preserving and efficient, achieving 6.5x speedup and 5.6x memory reduction. Code is available at https://github.com/mit-han-lab/offsite-tuning.

翻译:然而,许多基础模型是专有的,因此,用户必须与模型所有者分享其数据,以微调模型,因为模型成本昂贵,并引起隐私问题。此外,对大型基础模型进行微调对于大多数下游用户来说是计算密集和不切实际的。在本文中,我们提议了场外托宁,一个隐私保护和有效的转移学习框架,可以使10亿参数基础模型适应下游数据,而不能进入完整模型。在场外调整中,模型所有者向数据所有者发送一个轻量的适配器和一个压缩模版模拟器,然后由数据所有者在模拟器的协助下微调下游数据上的适配器。微调适应器随后又归还给模型所有者,由他将之插入一个整版的基础模型。场外调保护双方的隐私,其计算效率高于需要完全模型重量的现有微调方法。我们展示了在各种大型语言和视觉基础模型上对下游数据进行离场调整的功效,然后对下游数据进行微调,同时进行精确度调整,同时进行精确度调整,可以实现精确性改进。