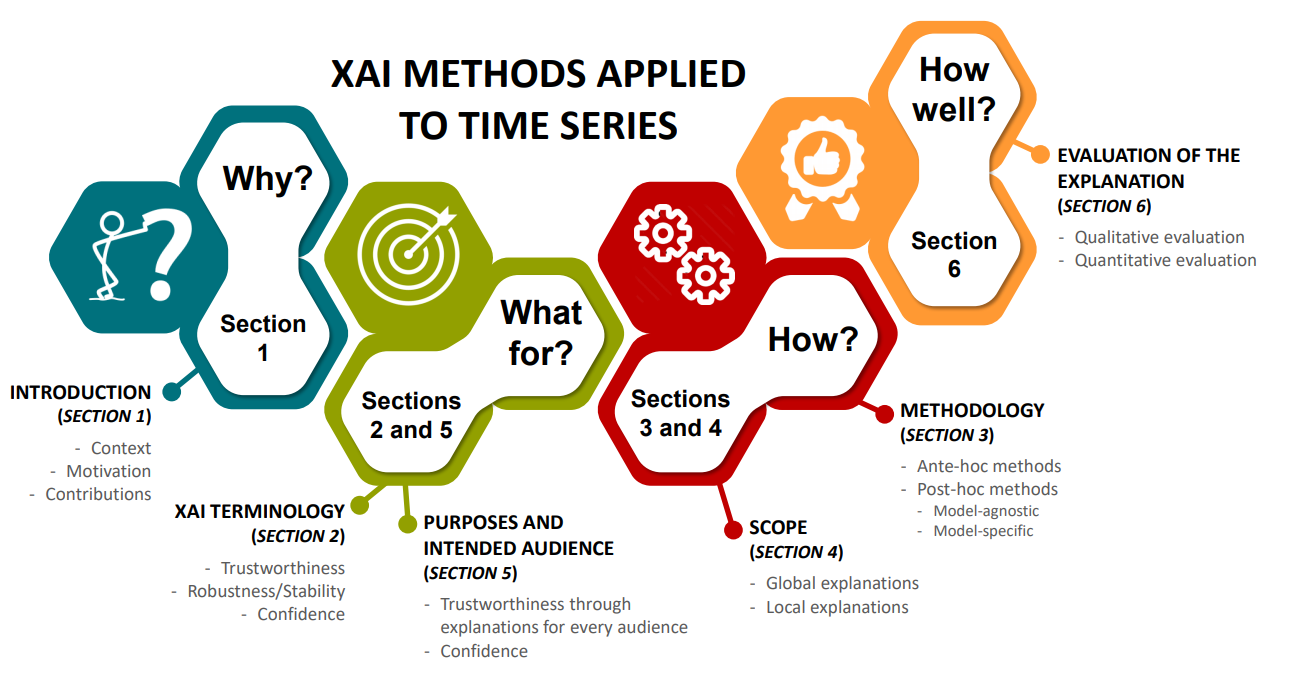

Most of state of the art methods applied on time series consist of deep learning methods that are too complex to be interpreted. This lack of interpretability is a major drawback, as several applications in the real world are critical tasks, such as the medical field or the autonomous driving field. The explainability of models applied on time series has not gather much attention compared to the computer vision or the natural language processing fields. In this paper, we present an overview of existing explainable AI (XAI) methods applied on time series and illustrate the type of explanations they produce. We also provide a reflection on the impact of these explanation methods to provide confidence and trust in the AI systems.

翻译:在时间序列上应用的大多数最新方法都包括深层次的学习方法,这些方法过于复杂,难以解释,缺乏解释是一个重大缺陷,因为在现实世界中,有几个应用是关键的任务,如医疗领域或自主驾驶领域;与计算机视觉或自然语言处理领域相比,在时间序列上应用的模式的解释性没有引起多少注意;在本文件中,我们概述了在时间序列上应用的现有可解释的AI(XAI)方法,并说明了这些方法的解释性。我们还对这些解释性方法的影响进行了反思,以对AI系统提供信心和信任。