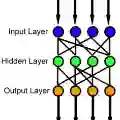

In this paper we show how specific families of positive definite kernels serve as powerful tools in analyses of iteration algorithms for multiple layer feedforward Neural Network models. Our focus is on particular kernels that adapt well to learning algorithms for data-sets/features which display intrinsic self-similarities at feedforward iterations of scaling.

翻译:在本文中,我们展示了正确定内核的特定家庭如何成为分析多层进料神经网络模型迭代算法的有力工具。 我们的侧重点是某些特别的内核,这些内核能够很好地适应数据集/功能的学习算法,这些内核在向外递增缩放时显示出内在的自我差异。