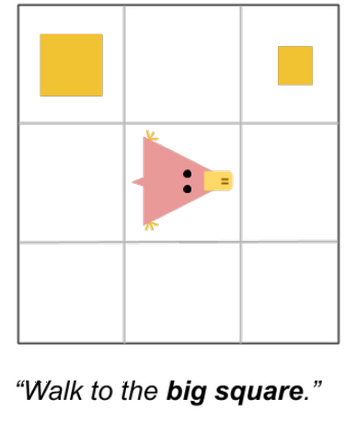

Human reasoning can often be understood as an interplay between two systems: the intuitive and associative ("System 1") and the deliberative and logical ("System 2"). Neural sequence models -- which have been increasingly successful at performing complex, structured tasks -- exhibit the advantages and failure modes of System 1: they are fast and learn patterns from data, but are often inconsistent and incoherent. In this work, we seek a lightweight, training-free means of improving existing System 1-like sequence models by adding System 2-inspired logical reasoning. We explore several variations on this theme in which candidate generations from a neural sequence model are examined for logical consistency by a symbolic reasoning module, which can either accept or reject the generations. Our approach uses neural inference to mediate between the neural System 1 and the logical System 2. Results in robust story generation and grounded instruction-following show that this approach can increase the coherence and accuracy of neurally-based generations.

翻译:人类推理往往可以被理解为两个系统之间的相互作用:直觉和关联(“系统1”)和议事和逻辑(“系统2”)。神经序列模型 -- -- 在完成复杂、结构化任务方面越来越成功 -- -- 展示了系统1的优势和失败模式:它们是快速的,从数据中学习模式,但往往不连贯和不连贯。在这项工作中,我们寻求一种轻量的、没有培训的改进现有系统1类序列模型的手段,方法是添加以系统2为根据的逻辑推理。我们探讨了关于这个主题的若干变异,即神经序列模型的候选世代通过一个象征性推理模块接受或拒绝代代。我们的方法使用了神经系统1和逻辑系统2之间的线性推理法。在扎实的故事生成和基于指令的演练中的结果显示,这一方法可以提高神经基世代的一致性和准确性。