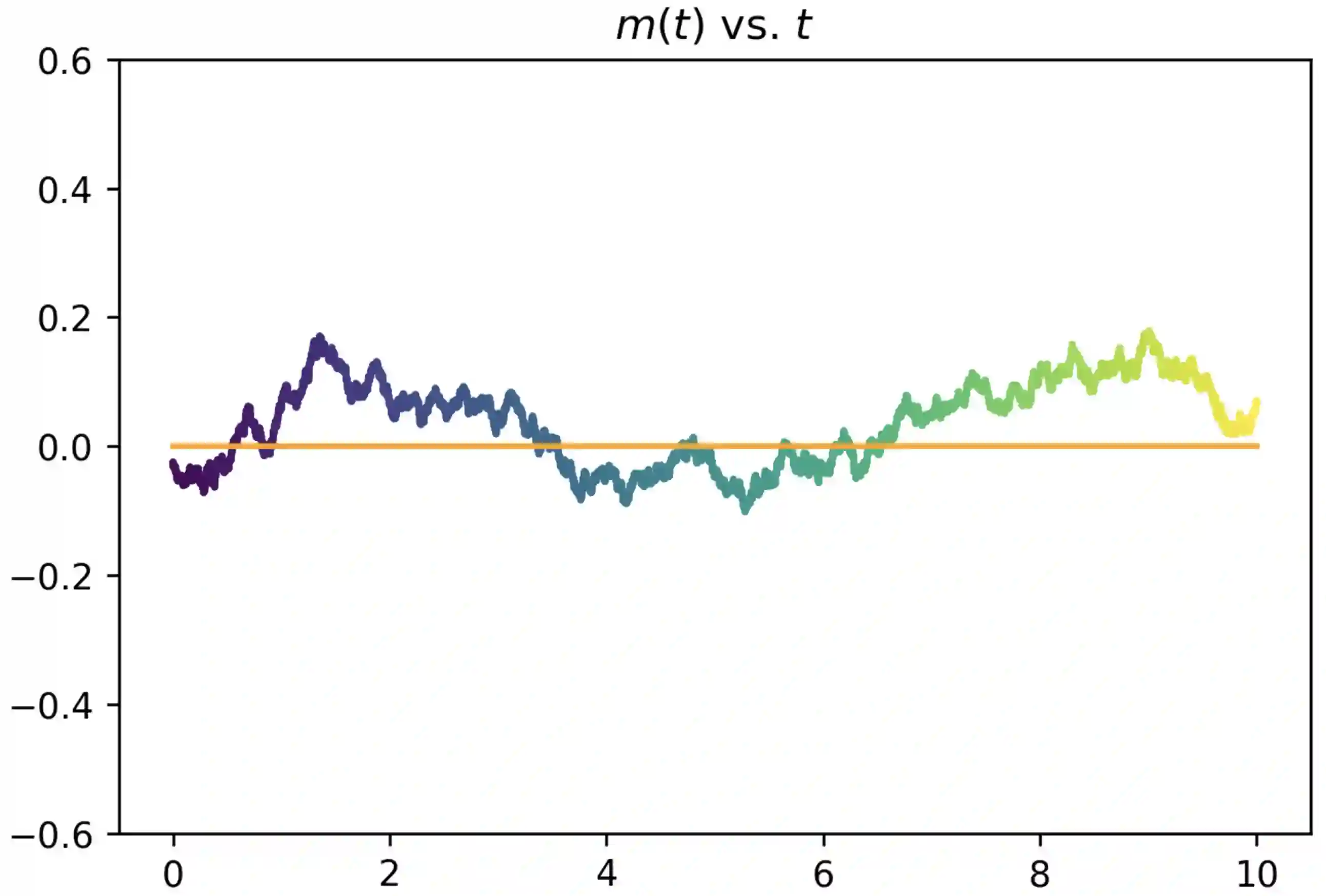

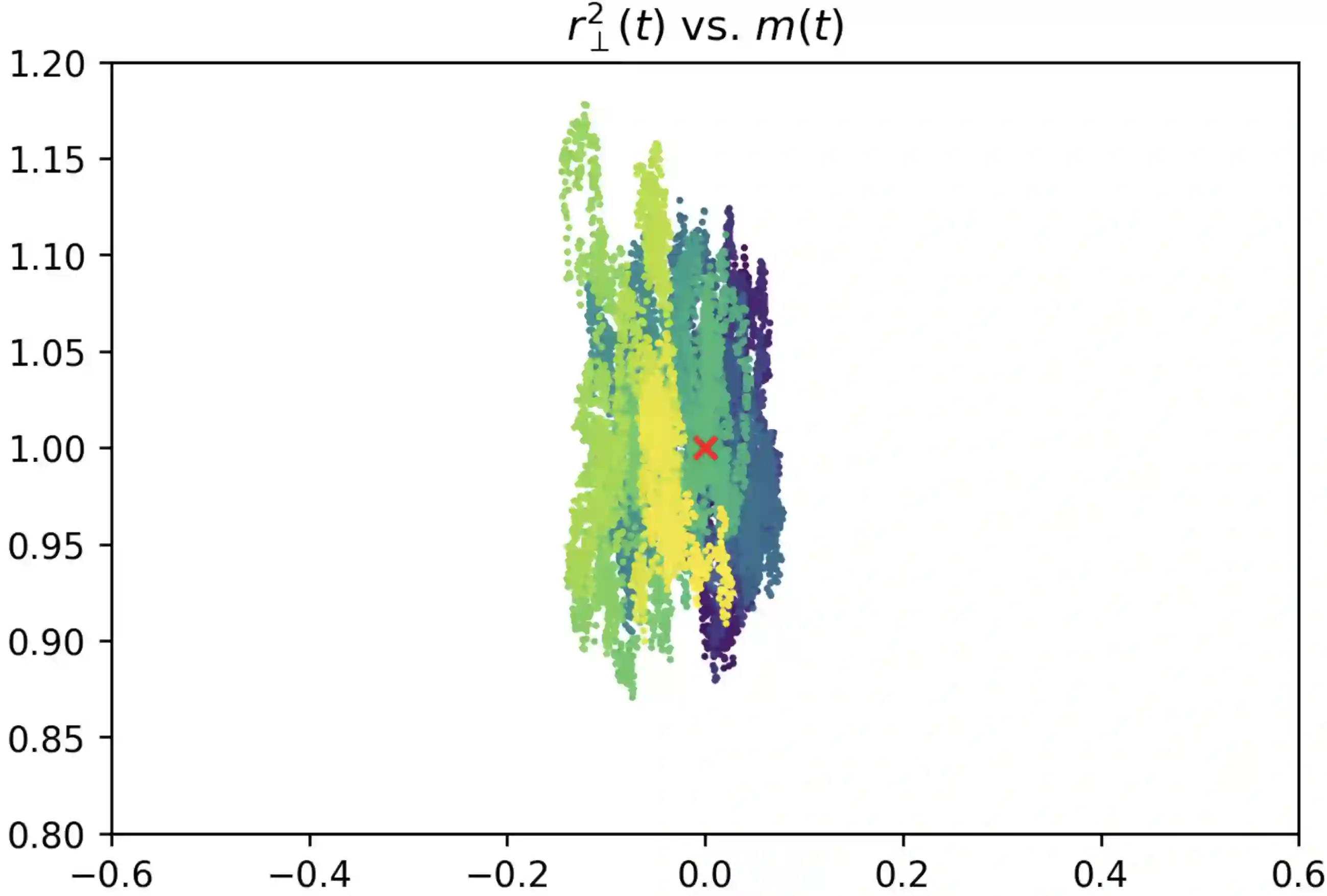

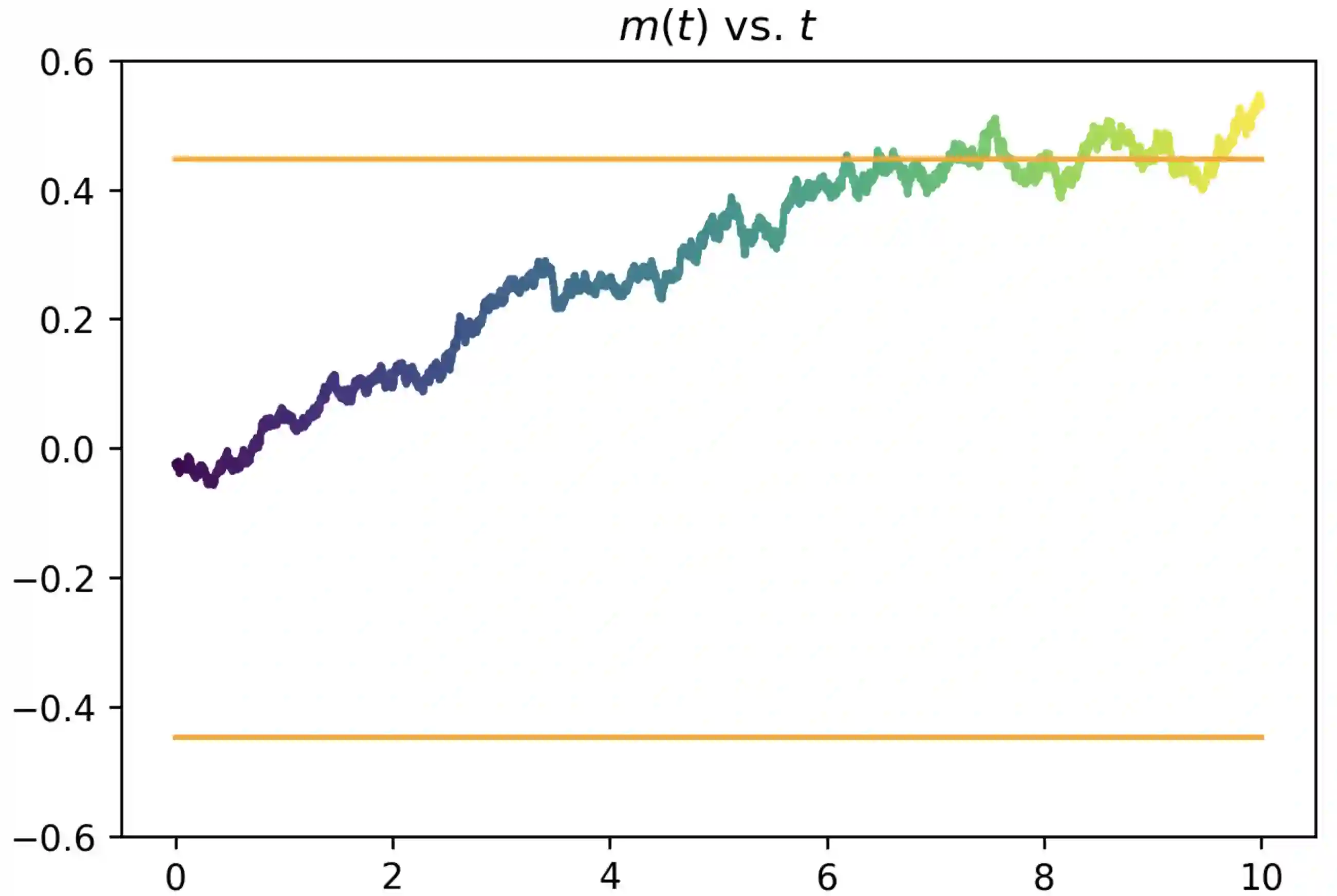

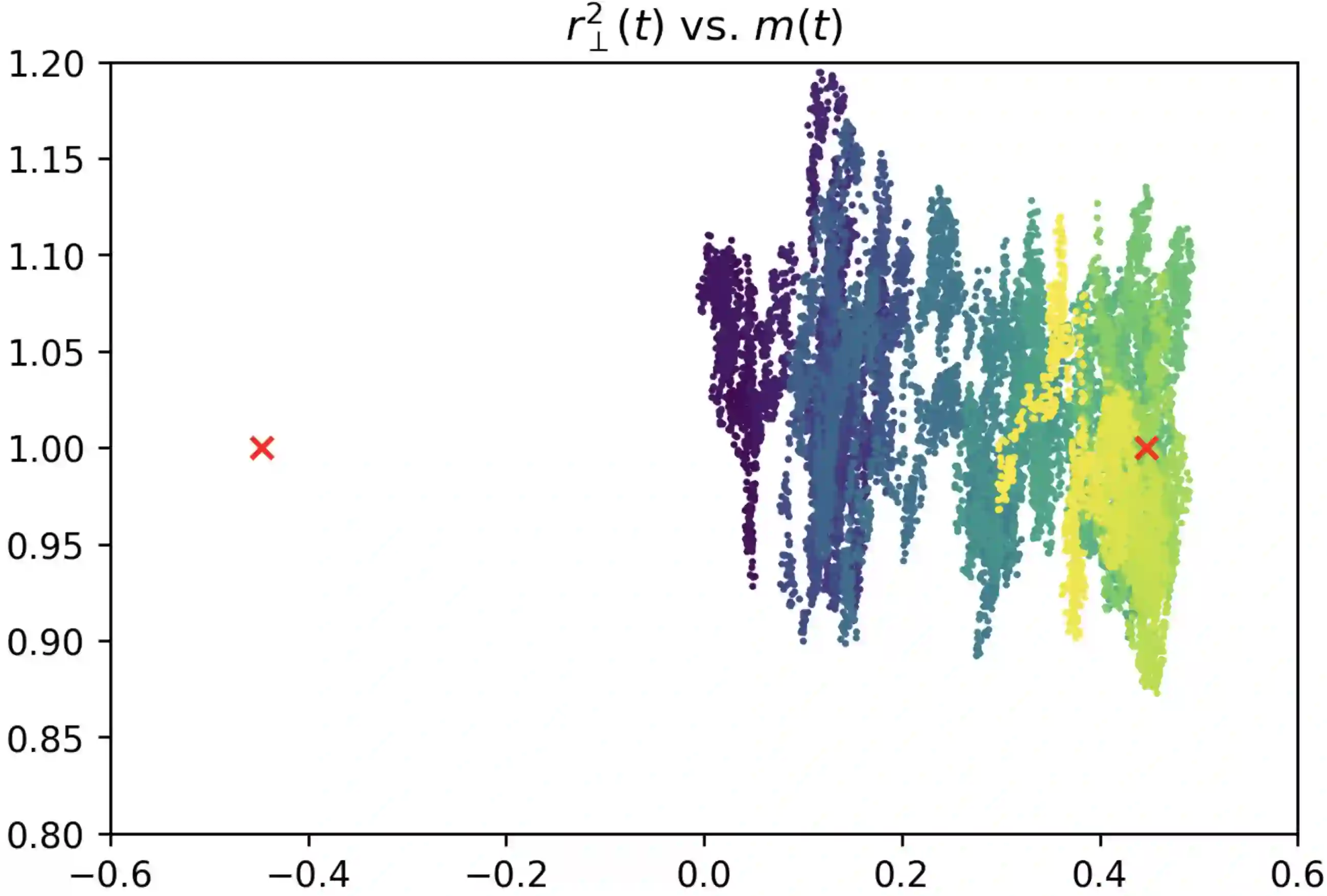

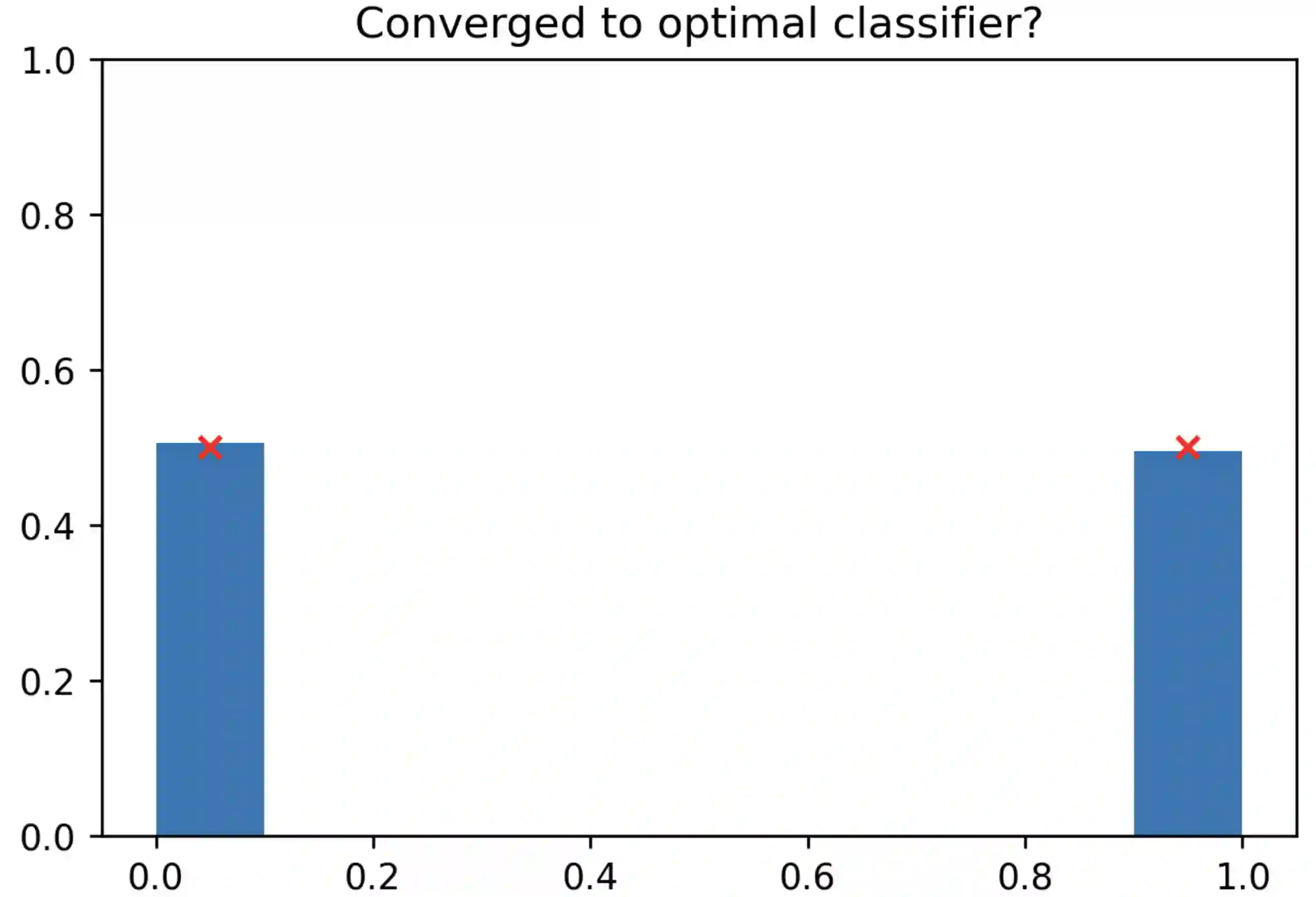

We study the scaling limits of stochastic gradient descent (SGD) with constant step-size in the high-dimensional regime. We prove limit theorems for the trajectories of summary statistics (i.e., finite-dimensional functions) of SGD as the dimension goes to infinity. Our approach allows one to choose the summary statistics that are tracked, the initialization, and the step-size. It yields both ballistic (ODE) and diffusive (SDE) limits, with the limit depending dramatically on the former choices. Interestingly, we find a critical scaling regime for the step-size below which the effective ballistic dynamics matches gradient flow for the population loss, but at which, a new correction term appears which changes the phase diagram. About the fixed points of this effective dynamics, the corresponding diffusive limits can be quite complex and even degenerate. We demonstrate our approach on popular examples including estimation for spiked matrix and tensor models and classification via two-layer networks for binary and XOR-type Gaussian mixture models. These examples exhibit surprising phenomena including multimodal timescales to convergence as well as convergence to sub-optimal solutions with probability bounded away from zero from random (e.g., Gaussian) initializations.

翻译:我们用在高维系统中的恒定梯度梯度梯度下(SGD)的缩放限制值来研究高维系统中的常态梯度梯度梯度下(SGD)的缩放限制值。我们证明,在SGD的简统计轨迹(即有限维函数)的轨迹到无限度时,我们能够限制SGD的缩放参数。我们的方法允许一个人选择所跟踪的汇总统计、初始化和阶梯度。它产生弹道(ODE)和diffusive(SDE)的缩放值限制值,其极限在很大程度上取决于以前的选择。有趣的是,我们发现一个临界的缩放度制度,在这种制度下,有效的弹道动态与人口损失的梯度流动相匹配,但在这个制度下,出现了一个新的校正术语,改变了阶段图。关于这种有效动态的固定点,相应的细度限制可能相当复杂,甚至退化。我们展示了我们关于流行的例子的方法,其中包括对加压矩阵和高压模型进行估算,并通过两层网络对二级网络对二进和XOR类高估混合混合混合模型进行分类。这些例子显示了惊人的现象,包括从多式时间缩缩到从零度接近到离零点的初概率的初合。