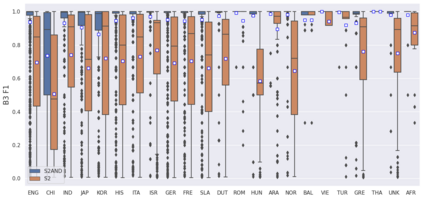

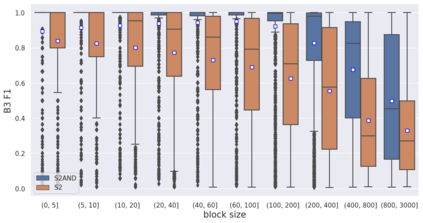

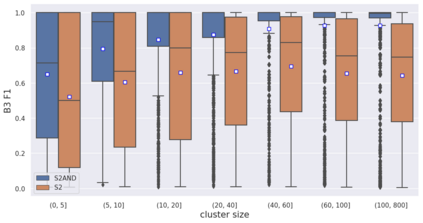

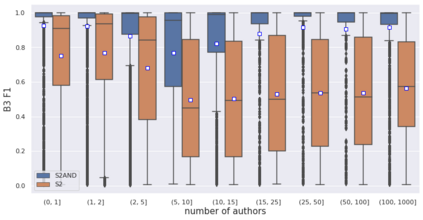

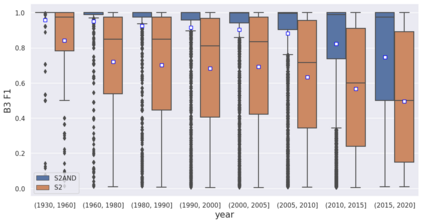

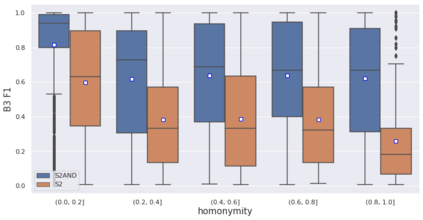

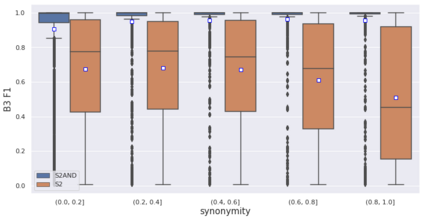

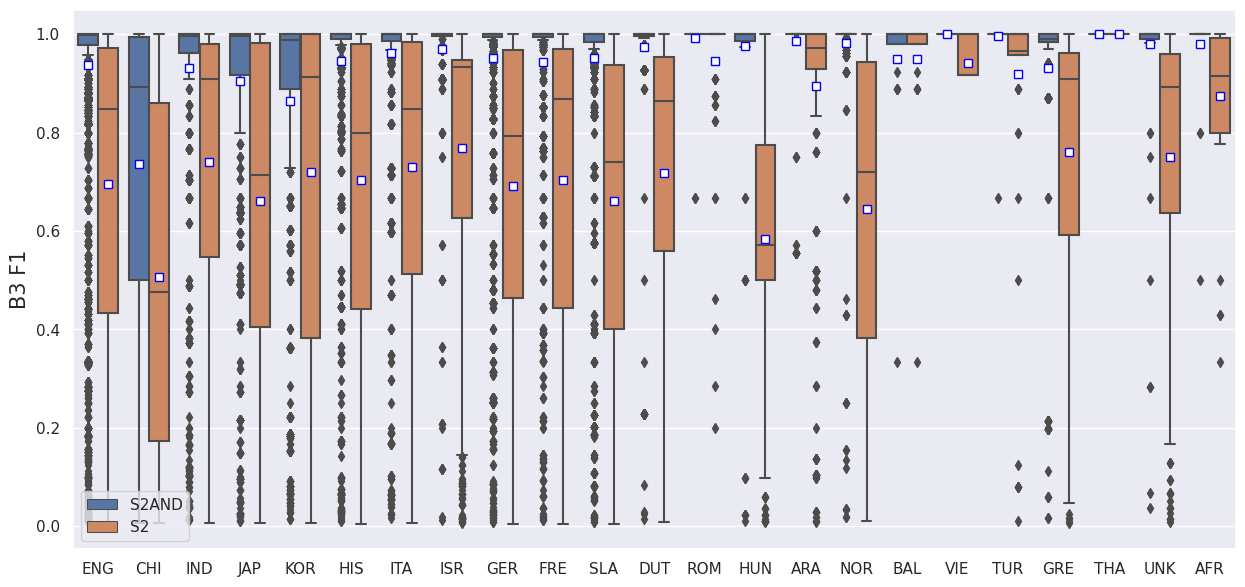

Author Name Disambiguation (AND) is the task of resolving which author mentions in a bibliographic database refer to the same real-world person, and is a critical ingredient of digital library applications such as search and citation analysis. While many AND algorithms have been proposed, comparing them is difficult because they often employ distinct features and are evaluated on different datasets. In response to this challenge, we present S2AND, a unified benchmark dataset for AND on scholarly papers, as well as an open-source reference model implementation. Our dataset harmonizes eight disparate AND datasets into a uniform format, with a single rich feature set drawn from the Semantic Scholar (S2) database. Our evaluation suite for S2AND reports performance split by facets like publication year and number of papers, allowing researchers to track both global performance and measures of fairness across facet values. Our experiments show that because previous datasets tend to cover idiosyncratic and biased slices of the literature, algorithms trained to perform well on one on them may generalize poorly to others. By contrast, we show how training on a union of datasets in S2AND results in more robust models that perform well even on datasets unseen in training. The resulting AND model also substantially improves over the production algorithm in S2, reducing error by over 50% in terms of $B^3$ F1. We release our unified dataset, model code, trained models, and evaluation suite to the research community. https://github.com/allenai/S2AND/

翻译:作者姓名 Disambiguation (AND) 是解决任务的任务, 作者在书目数据库中提及, 作者在书目数据库中提及的名称是同一位真实世界的人, 是搜索和引证分析等数字图书馆应用程序的关键组成部分。 虽然提出了许多和算法, 比较它们很困难, 因为它们经常使用不同的特性, 并且在不同数据集中进行评估。 我们提出 S2AND, 是一个统一的文献文献和学术论文的基准数据集, 以及一个开放源码参考模型的实施。 我们的数据集将八个差异和数据集统一成一个统一格式, 由Smantical学者(S2)数据库(S2) 制作一个单一的丰富功能集。 我们的 S2AND 评估套方案报告业绩按出版年份和文件数量等不同方面分列, 使研究人员能够跟踪全球业绩和衡量面值公平度的尺度。 我们的实验显示, 由于以前的数据集往往覆盖文献的特质和偏差的切片段。 我们经过训练的模型可以向其他人概括。 相比之下, 我们展示了如何在S2AND 模型中进行数据组合组合的合并, 并用更精细化的数据模型 。