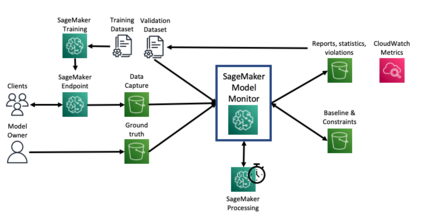

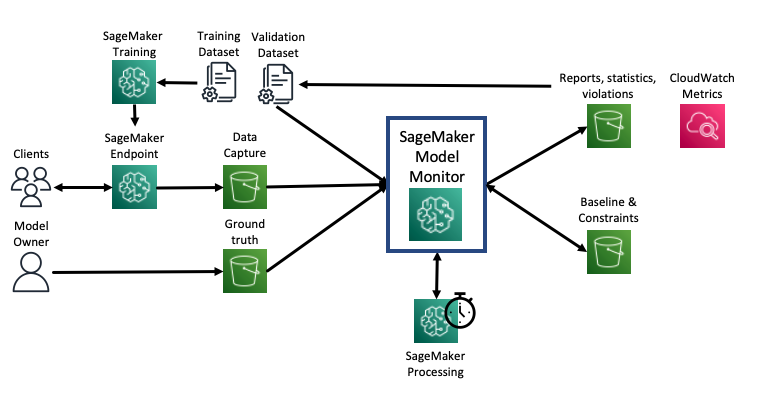

With the increasing adoption of machine learning (ML) models and systems in high-stakes settings across different industries, guaranteeing a model's performance after deployment has become crucial. Monitoring models in production is a critical aspect of ensuring their continued performance and reliability. We present Amazon SageMaker Model Monitor, a fully managed service that continuously monitors the quality of machine learning models hosted on Amazon SageMaker. Our system automatically detects data, concept, bias, and feature attribution drift in models in real-time and provides alerts so that model owners can take corrective actions and thereby maintain high quality models. We describe the key requirements obtained from customers, system design and architecture, and methodology for detecting different types of drift. Further, we provide quantitative evaluations followed by use cases, insights, and lessons learned from more than 1.5 years of production deployment.

翻译:随着在不同行业的高风险环境中越来越多地采用机器学习模式和系统,保证模型部署后的业绩已成为关键因素。监测生产模式是确保其持续业绩和可靠性的一个关键方面。我们介绍了亚马逊Sage-Maker模型监测,这是一个全面管理的服务,持续监测亚马逊Sage-Maker所主机学习模式的质量。我们的系统自动检测实时模型中的数据、概念、偏向和特征归属的漂移,并提供警报,使模型所有人能够采取纠正行动,从而保持高质量的模型。我们描述了从客户、系统设计和建筑中获得的关键要求,以及发现不同类型漂移的方法。我们还提供了定量评价,然后使用案例、洞见和从1.5年以上的生产部署中吸取的经验教训。