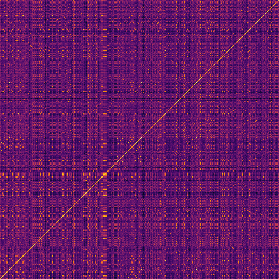

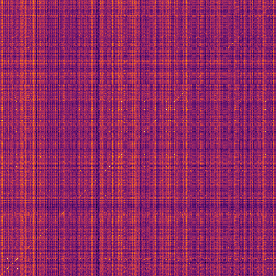

Large language models (such as OpenAI's Codex) have demonstrated impressive zero-shot multi-task capabilities in the software domain, including code explanation. In this work, we examine if this ability can be used to help with reverse engineering. Specifically, we investigate prompting Codex to identify the purpose, capabilities, and important variable names or values from code, even when the code is produced through decompilation. Alongside an examination of the model's responses in answering open-ended questions, we devise a true/false quiz framework to characterize the performance of the language model. We present an extensive quantitative analysis of the measured performance of the language model on a set of program purpose identification and information extraction tasks: of the 136,260 questions we posed, it answered 72,754 correctly. A key takeaway is that while promising, LLMs are not yet ready for zero-shot reverse engineering.

翻译:大型语言模型(如 OpenAI 的 Codex ) 在软件域中展示了令人印象深刻的零光多任务能力, 包括代码解释。 在这项工作中, 我们检查这一能力是否可以用于帮助反向工程。 具体地说, 我们调查了代码, 以确定代码的目的、 能力和重要的变量名称或值, 即使代码是通过分解生成的。 在研究模型在回答开放式问题时的答复的同时, 我们设计了一个真实/ 虚假的测试框架来描述语言模型的性能。 我们对一套程序目的识别和信息提取任务中语言模型的测量性能进行了广泛的定量分析: 在我们提出的136, 260个问题中, 它回答了72, 754个问题。 一个关键选项是, 虽然LLMS还没有准备好用于零光反向工程, 但是它还没有准备好。