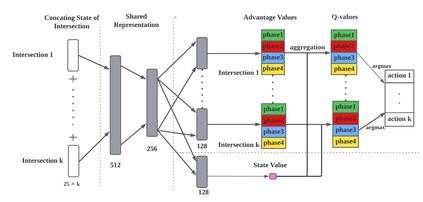

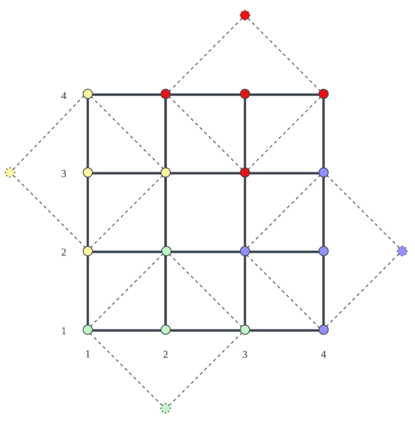

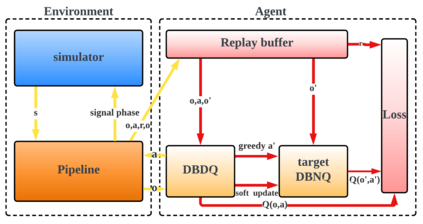

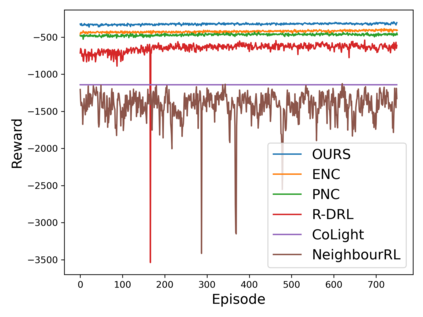

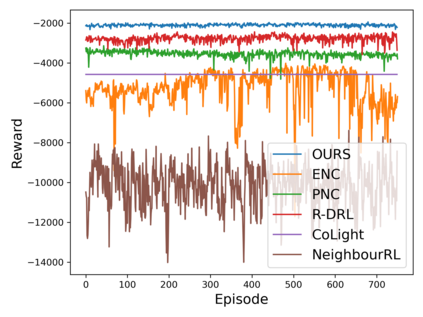

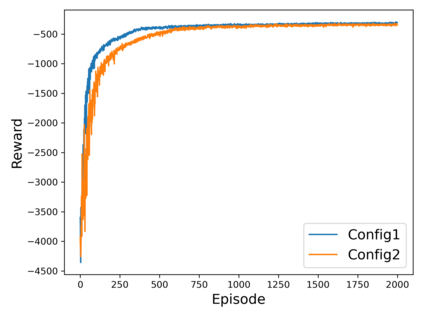

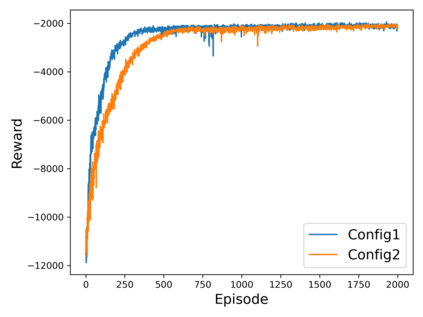

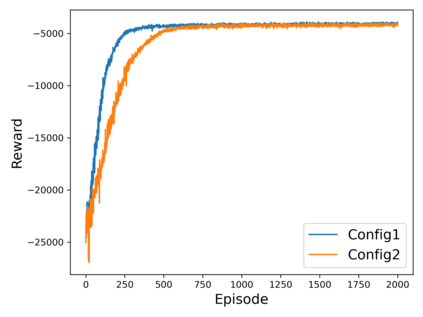

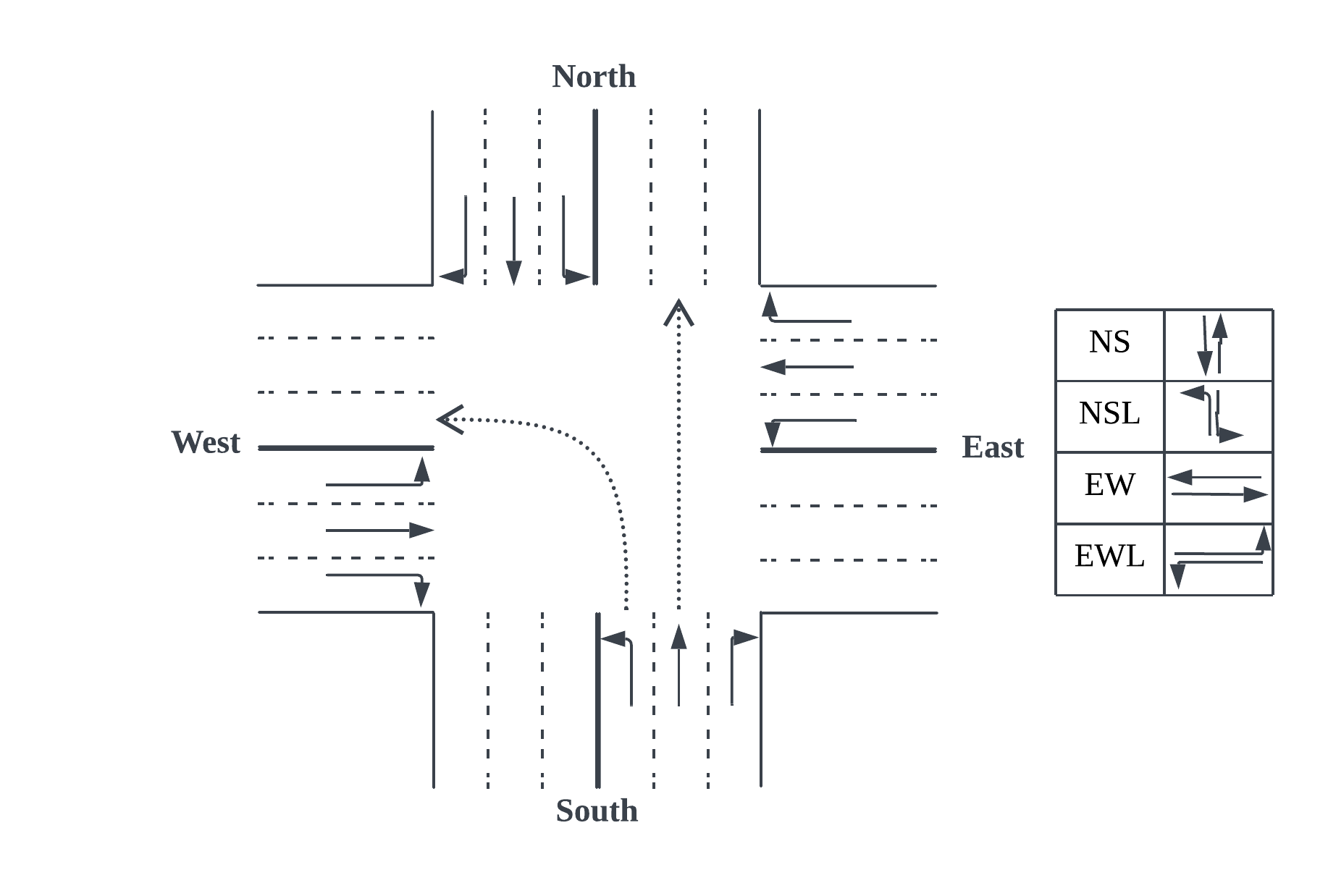

Adaptive traffic signal control with Multi-agent Reinforcement Learning(MARL) is a very popular topic nowadays. In most existing novel methods, one agent controls single intersections and these methods focus on the cooperation between intersections. However, the non-stationary property of MARL still limits the performance of the above methods as the size of traffic networks grows. One compromised strategy is to assign one agent with a region of intersections to reduce the number of agents. There are two challenges in this strategy, one is how to partition a traffic network into small regions and the other is how to search for the optimal joint actions for a region of intersections. In this paper, we propose a novel training framework RegionLight where our region partition rule is based on the adjacency between the intersection and extended Branching Dueling Q-Network(BDQ) to Dynamic Branching Dueling Q-Network(DBDQ) to bound the growth of the size of joint action space and alleviate the bias introduced by imaginary intersections outside of the boundary of the traffic network. Our experiments on both real datasets and synthetic datasets demonstrate that our framework performs best among other novel frameworks and that our region partition rule is robust.

翻译:针对自适应的多智能体强化学习(MARL)交通信号控制是当前非常热门的课题。现有的一些新颖方法中,一个控制单个交叉口的智能体应用于协作多个交叉口。然而,MARL 的非静态特性仍限制着上述方法的性能,尤其是在交通网络不断扩大的情况下。一种可行妥协策略是将每个智能体分配到一组交叉口区域中以减少智能体的数量。该策略存在两个挑战,一是如何将交通网络划分为小区域,二是如何搜索区域交叉口的最佳联合行动。本文提出一个名为 RegionLight 的新型培训框架,在其中,我们的区域划分规则基于交叉口之间的邻接关系,并利用扩展分支对决 Q-Network(BDQ)来将其转化为动态分支对决 Q-Network(DBDQ)以限制联合动作空间大小的增长并减轻引入边界之外的虚假交叉口所带来的偏差。我们在真实数据集和合成数据集上的实验表明,我们的框架在其他新型框架中表现最佳,而我们的区域划分规则具有较好的鲁棒性。