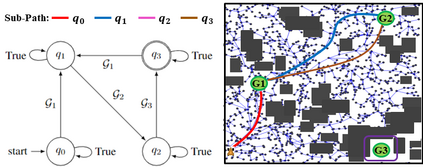

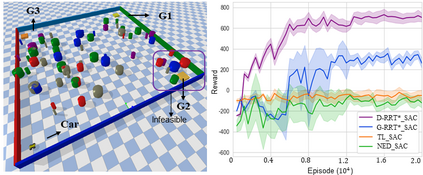

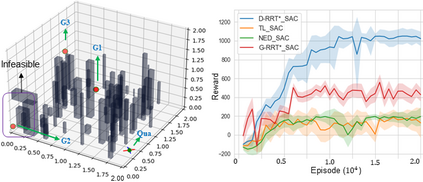

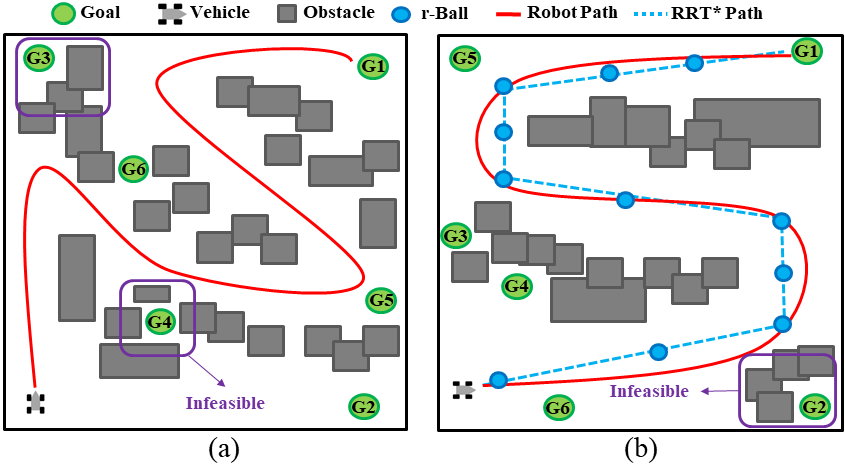

This paper explores continuous-time control synthesis for target-driven navigation to satisfy complex high-level tasks expressed as linear temporal logic (LTL). We propose a model-free framework using deep reinforcement learning (DRL) where the underlying dynamic system is unknown (an opaque box). Unlike prior work, this paper considers scenarios where the given LTL specification might be infeasible and therefore cannot be accomplished globally. Instead of modifying the given LTL formula, we provide a general DRL-based approach to satisfy it with minimal violation. To do this, we transform a previously multi-objective DRL problem, which requires simultaneous automata satisfaction and minimum violation cost, into a single objective. By guiding the DRL agent with a sampling-based path planning algorithm for the potentially infeasible LTL task, the proposed approach mitigates the myopic tendencies of DRL, which are often an issue when learning general LTL tasks that can have long or infinite horizons. This is achieved by decomposing an infeasible LTL formula into several reach-avoid sub-tasks with shorter horizons, which can be trained in a modular DRL architecture. Furthermore, we overcome the challenge of the exploration process for DRL in complex and cluttered environments by using path planners to design rewards that are dense in the configuration space. The benefits of the presented approach are demonstrated through testing on various complex nonlinear systems and compared with state-of-the-art baselines. The Video demonstration can be found on YouTube Channel:https://youtu.be/jBhx6Nv224E.

翻译:本文探索目标驱动导航的持续时间控制合成, 以满足以线性时间逻辑( LTL) 表示的复杂高级任务。 我们提出一个使用深强化学习( DRL) 的无模型框架, 其基础动态系统未知( 一个不透明的框 ) 。 与先前的工作不同, 本文考虑了特定 LTL 规格可能不可行, 因此无法在全球完成的情景。 我们不是修改给定的 LTL 公式, 而是提供一个通用的 DRL 方法, 以最小的违规方式满足它。 为此, 我们将一个先前多目标的 DRL 问题( 需要同时自动满意度和最低违规成本) 转化为一个单一目标。 通过以基于取样路径的路径规划算法指导 DRL 代理器, 降低 LL 的短视率。 将一个错误的 LTL 公式转换成若干次目标, 它需要同时的自动自动满足和最小的 DRL 。 在模块中, 将常规的 RDR 测试流程中, 将找到一个复杂的路径 。 。 通过 演示图式的 RDR 系统, 。 将 将 的 测试 测试 。

相关内容

Source: Apple - iOS 8