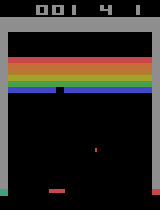

Humans perceive the world in terms of objects and relations between them. In fact, for any given pair of objects, there is a myriad of relations that apply to them. How does the cognitive system learn which relations are useful to characterize the task at hand? And how can it use these representations to build a relational policy to interact effectively with the environment? In this paper we propose that this problem can be understood through the lens of a sub-field of symbolic machine learning called relational reinforcement learning (RRL). To demonstrate the potential of our approach, we build a simple model of relational policy learning based on a function approximator developed in RRL. We trained and tested our model in three Atari games that required to consider an increasingly number of potential relations: Breakout, Pong and Demon Attack. In each game, our model was able to select adequate relational representations and build a relational policy incrementally. We discuss the relationship between our model with models of relational and analogical reasoning, as well as its limitations and future directions of research.

翻译:人类从物体和它们之间的关系的角度来看待世界。 事实上, 对于任何一对物体来说, 都存在各种各样的关系。 认知系统如何学会哪些关系对确定手头的任务有用? 以及它如何利用这些表现来建立关系政策来有效地与环境互动? 在本文中,我们建议, 这个问题可以通过象征性机器学习的子领域来理解, 称为关系强化学习( RRL) 。 为了展示我们的方法的潜力, 我们根据在RRL中开发的相对功能来建立一个简单的关系政策学习模式。 我们在三个阿塔里游戏中培训和测试了我们的模式,这三场游戏需要考虑越来越多的潜在关系: 突破、 蓬和恶魔攻击。 在每一场游戏中, 我们的模式能够选择适当的关系表现和逐步建立关系政策。 我们讨论了我们的模型与关系和类比推理模型之间的关系, 以及其局限性和未来研究方向。