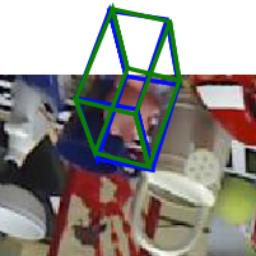

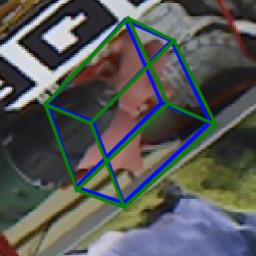

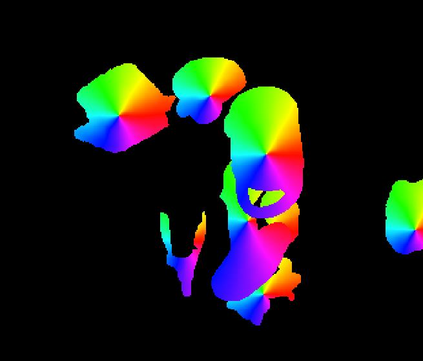

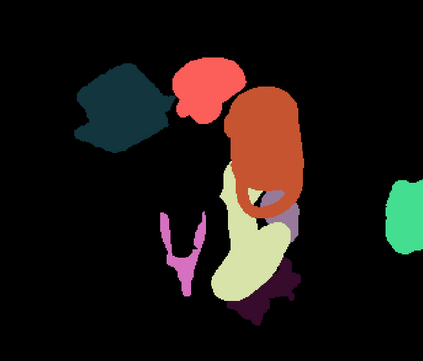

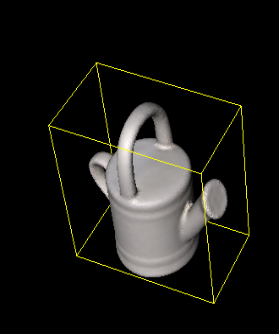

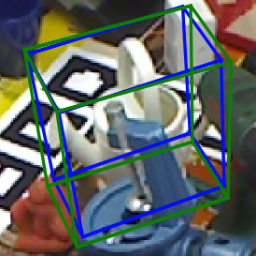

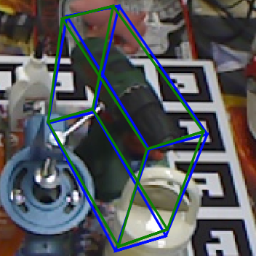

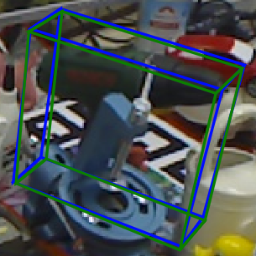

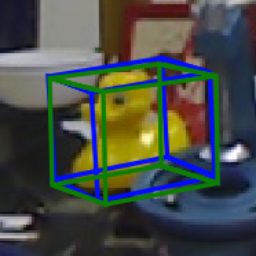

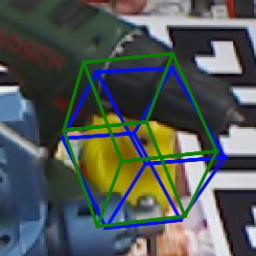

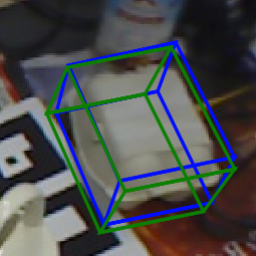

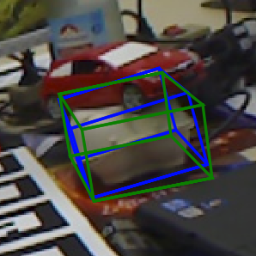

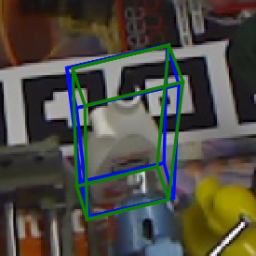

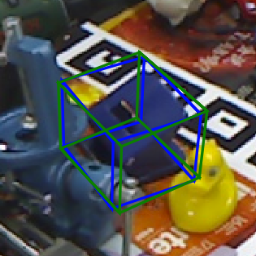

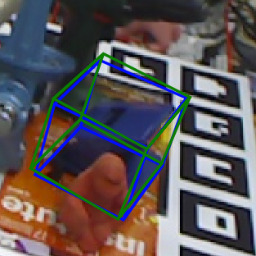

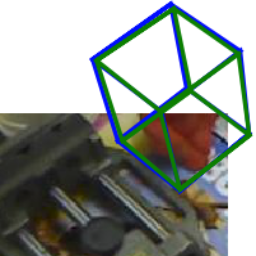

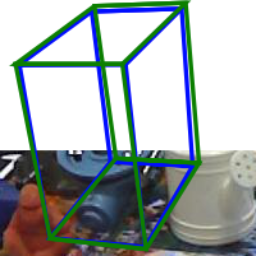

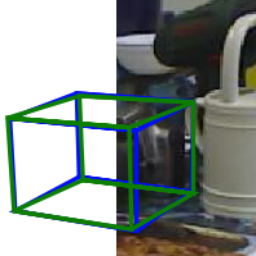

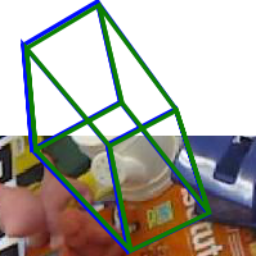

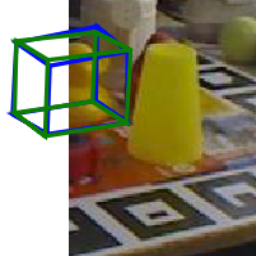

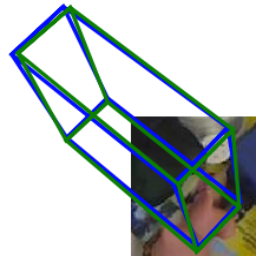

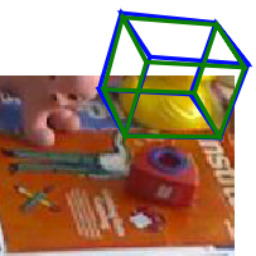

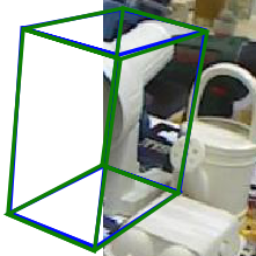

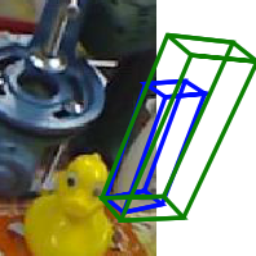

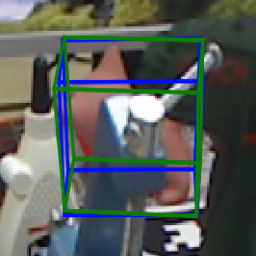

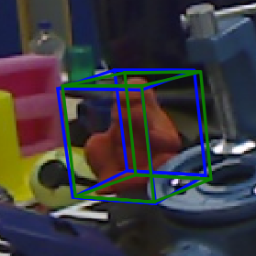

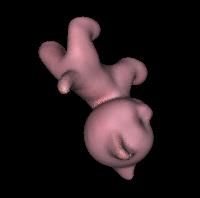

This paper addresses the challenge of 6DoF pose estimation from a single RGB image under severe occlusion or truncation. Many recent works have shown that a two-stage approach, which first detects keypoints and then solves a Perspective-n-Point (PnP) problem for pose estimation, achieves remarkable performance. However, most of these methods only localize a set of sparse keypoints by regressing their image coordinates or heatmaps, which are sensitive to occlusion and truncation. Instead, we introduce a Pixel-wise Voting Network (PVNet) to regress pixel-wise unit vectors pointing to the keypoints and use these vectors to vote for keypoint locations using RANSAC. This creates a flexible representation for localizing occluded or truncated keypoints. Another important feature of this representation is that it provides uncertainties of keypoint locations that can be further leveraged by the PnP solver. Experiments show that the proposed approach outperforms the state of the art on the LINEMOD, Occlusion LINEMOD and YCB-Video datasets by a large margin, while being efficient for real-time pose estimation. We further create a Truncation LINEMOD dataset to validate the robustness of our approach against truncation. The code will be avaliable at https://zju-3dv.github.io/pvnet/.

翻译:本文针对了 6DoF 包含在严重隐蔽或截断下从单一 RGB 图像中进行估计的挑战。 许多最近的工作显示, 一种两阶段方法, 先检测关键点, 然后再解决透视- 点( PnP) 问题, 以提出估计, 取得了显著的性能。 然而, 大多数这些方法仅通过回缩图像坐标或热图将一组稀疏的关键点定位为本地化, 而这些关键点对隐蔽和曲解十分敏感。 相反, 我们引入了一个顺流投票网络( PVNet ), 以回归正向关键点点的顺流单位向量, 并使用这些矢量来使用 RANSAC 为关键点地点投票 。 这为定位隐蔽或曲解关键点提供了灵活的表达方式。 这种表达的另一个重要特征是, 它提供了关键点位置的不确定性, 能够被 PnP 解析点方法进一步加以利用。 实验显示, 拟议的方法将超越 LINEMOD 、 OclisliveD- slevideal dealationalational 数据, 而我们的流流数据将进一步生成流数据。