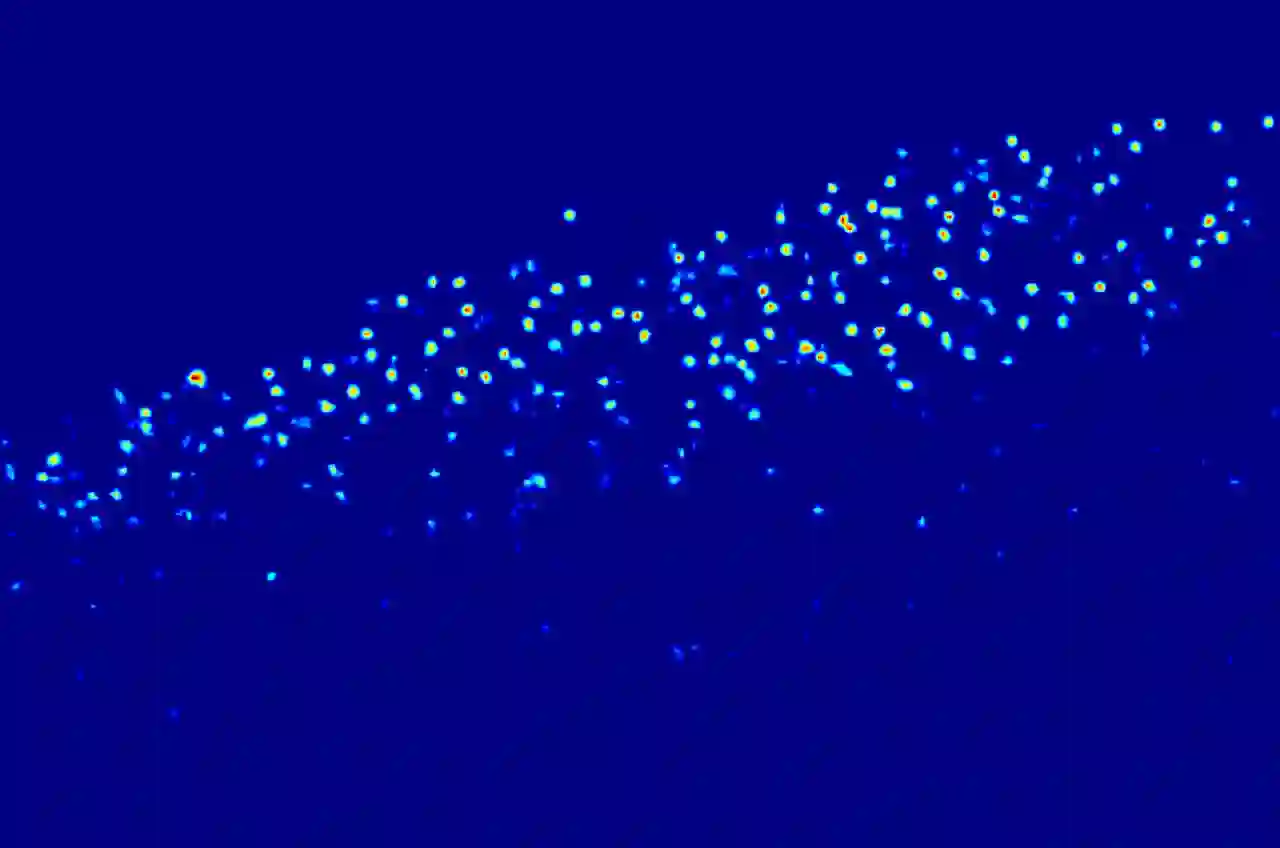

In crowd counting datasets, people appear at different scales, depending on their distance from the camera. To address this issue, we propose a novel multi-branch scale-aware attention network that exploits the hierarchical structure of convolutional neural networks and generates, in a single forward pass, multi-scale density predictions from different layers of the architecture. To aggregate these maps into our final prediction, we present a new soft attention mechanism that learns a set of gating masks. Furthermore, we introduce a scale-aware loss function to regularize the training of different branches and guide them to specialize on a particular scale. As this new training requires annotations for the size of each head, we also propose a simple, yet effective technique to estimate them automatically. Finally, we present an ablation study on each of these components and compare our approach against the literature on 4 crowd counting datasets: UCF-QNRF, ShanghaiTech A & B and UCF_CC_50. Our approach achieves state-of-the-art on all them with a remarkable improvement on UCF-QNRF (+25% reduction in error).

翻译:在人群计数数据集中,人们会根据与相机的距离而出现在不同的尺度上。 为了解决这一问题,我们提议建立一个新型的多部门规模关注网络,利用进化神经网络的等级结构,并在一个前传中从结构的不同层面生成多尺度的密度预测。为了将这些地图汇总到我们的最后预测中,我们提出了一个新的软关注机制,以学习一套加盖面罩。此外,我们引入了一个规模感知损失功能,以使不同分支的培训正规化,并指导他们进行特定规模的专门化。由于这一新培训需要每个头部的大小说明,我们还提出了一个简单而有效的自动估算技术。最后,我们提出了对所有这些组成部分的简单而有效的计算方法。我们提出了对每个组成部分的模拟研究,并将我们的方法与关于4个人群计数据集的文献进行比较:UCF-QNRF、上海科技A & B和UCF_CC_50。我们的方法在所有这些单元上都取得了显著的进步,在UCFC-QNRF(减少25%错误)。