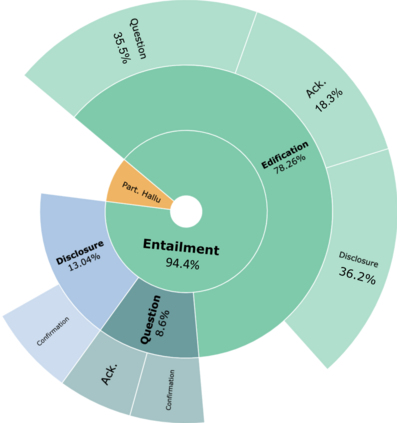

The goal of information-seeking dialogue is to respond to seeker queries with natural language utterances that are grounded on knowledge sources. However, dialogue systems often produce unsupported utterances, a phenomenon known as hallucination. To mitigate this behavior, we adopt a data-centric solution and create FaithDial, a new benchmark for hallucination-free dialogues, by editing hallucinated responses in the Wizard of Wikipedia (WoW) benchmark. We observe that FaithDial is more faithful than WoW while also maintaining engaging conversations. We show that FaithDial can serve as training signal for: i) a hallucination critic, which discriminates whether an utterance is faithful or not, and boosts the performance by 12.8 F1 score on the BEGIN benchmark compared to existing datasets for dialogue coherence; ii) high-quality dialogue generation. We benchmark a series of state-of-the-art models and propose an auxiliary contrastive objective that achieves the highest level of faithfulness and abstractiveness based on several automated metrics. Further, we find that the benefits of FaithDial generalize to zero-shot transfer on other datasets, such as CMU-Dog and TopicalChat. Finally, human evaluation reveals that responses generated by models trained on FaithDial are perceived as more interpretable, cooperative, and engaging.

翻译:寻求信息对话的目标是对基于知识来源的自然语言发声的寻寻者询问作出回应。然而,对话系统往往产生不支持的发声,一种被称为幻觉的现象。为了减轻这一行为,我们采用了以数据为中心的解决方案,并创建了无幻觉对话的新基准FaithDial,这是在维基百科向导(WoW)基准中编辑一系列最先进的幻觉反应。我们观察到,信仰Dial比WWoW更忠实,同时保持接触的对话。我们显示,信仰Dial可以充当培训信号,用于:i) 幻觉批评,它区分一个发音是否忠实,并且比现有的数据集更加一致地提高BEGIN基准的12.8 F1分的性能;ii) 高质量对话生成。我们为一系列最新状态模型做基准,并提出一个辅助性对比性目标,在几个自动度量度的基础上实现最高水平的忠诚和抽象性。此外,我们发现信仰通用到对其他数据集进行零透分转换的好处,如CMU-Dog-Times(C-Alishal Inviewdal Indeal-deal Indeal Inviolview) 和Cligal Inviolviolvial Indeal Indeal-dealdaldaldal-dealdal-deal Indaldaldaldal-deal-deal-dealdaldaldaldaldaldaldsaldaldaldaldaldalsaldaldaldaldisalsaldaldaldaldationsal 等,我们最后的模型。