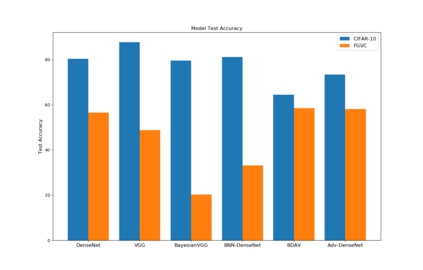

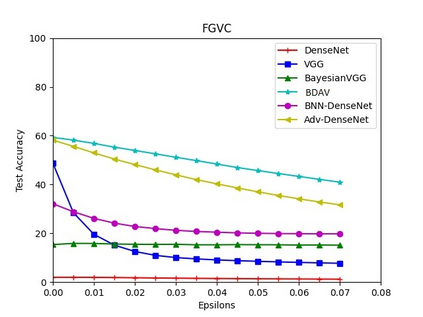

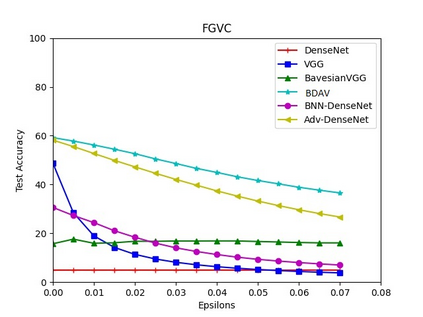

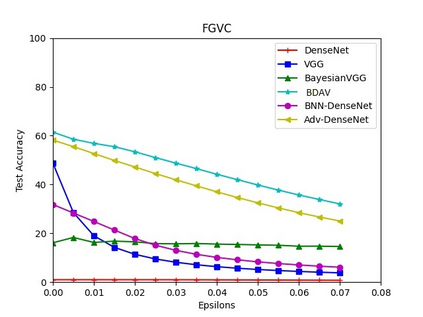

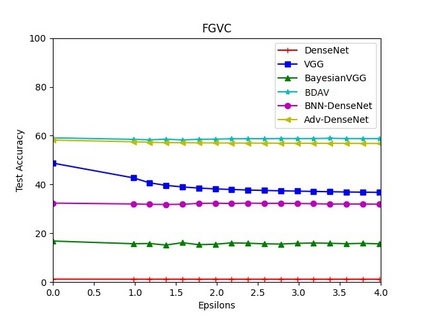

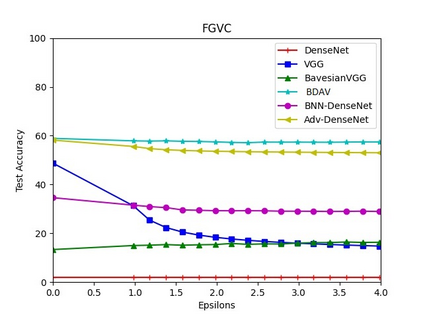

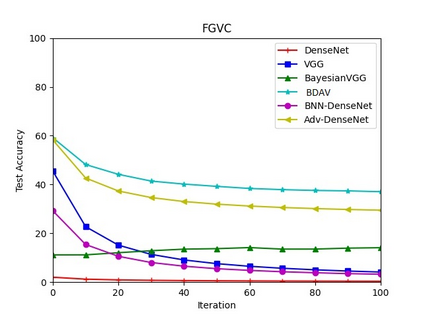

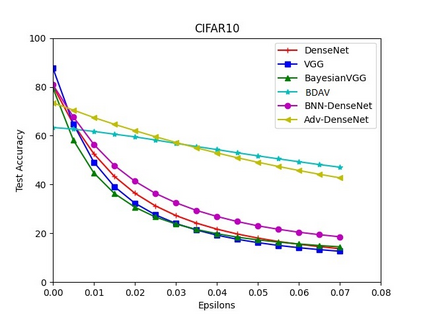

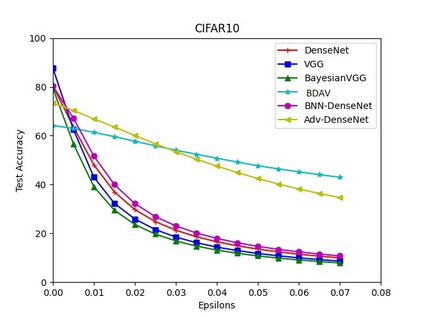

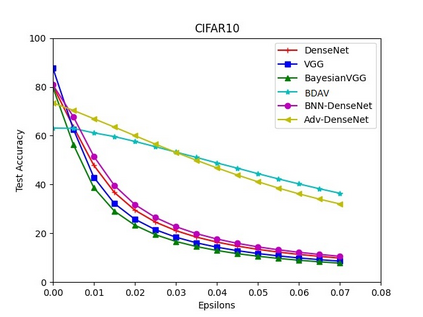

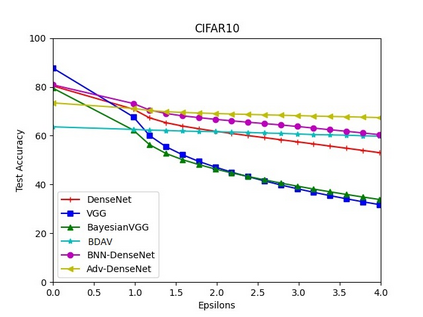

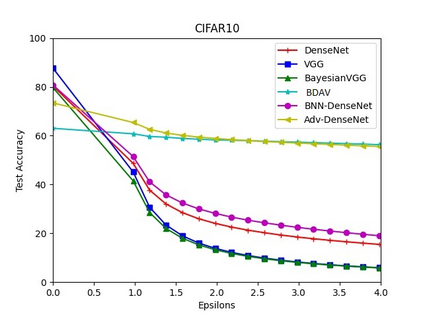

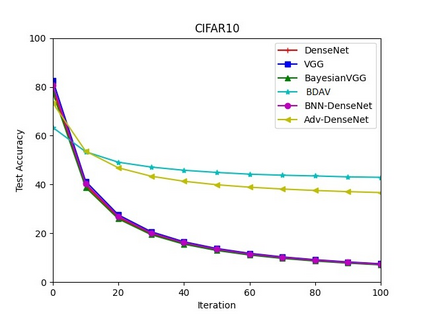

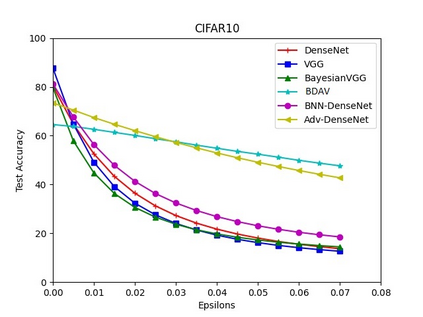

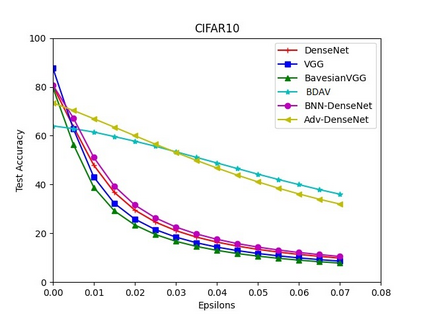

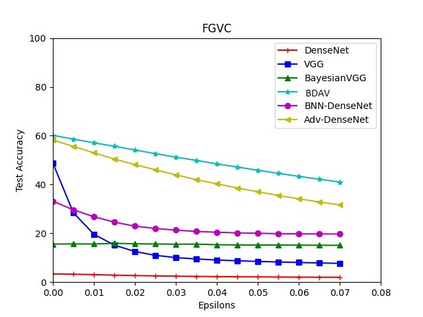

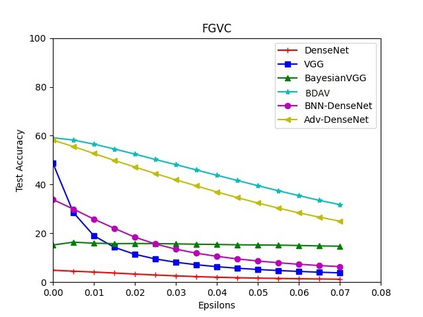

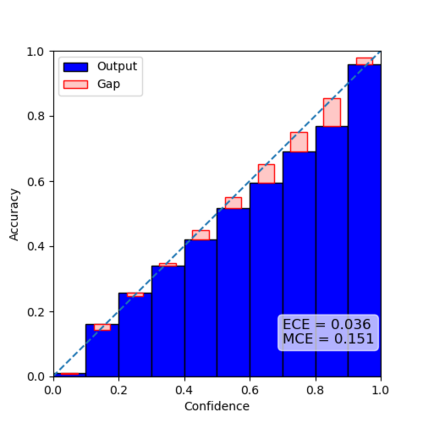

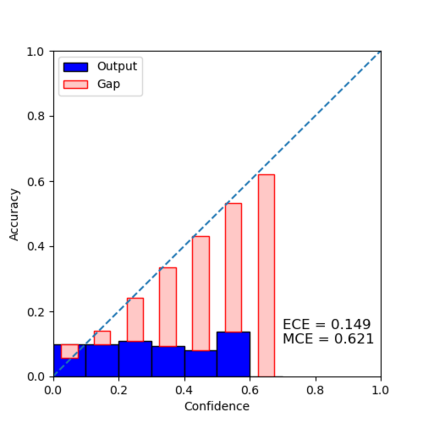

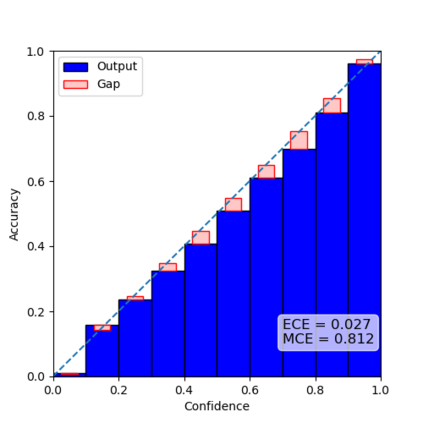

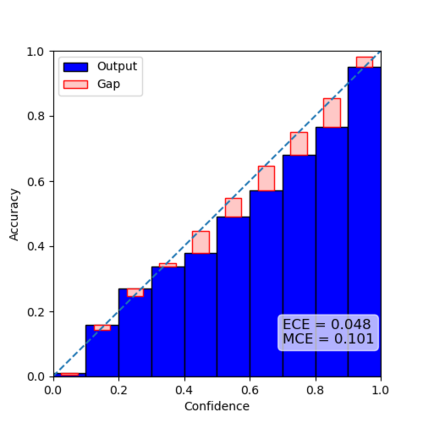

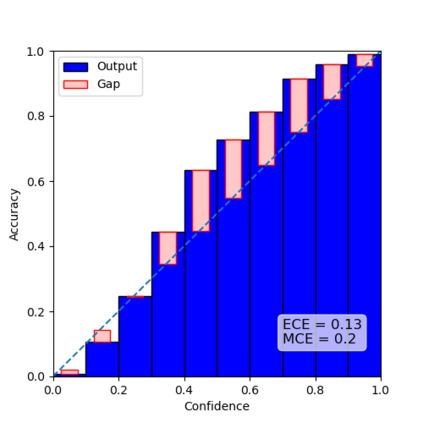

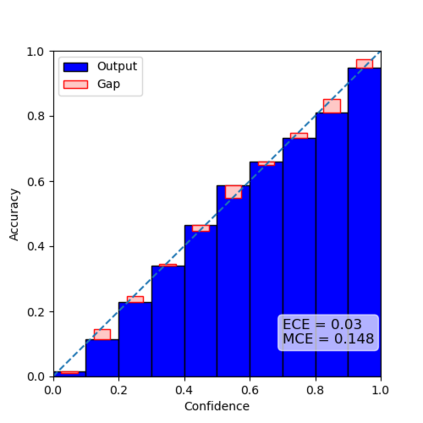

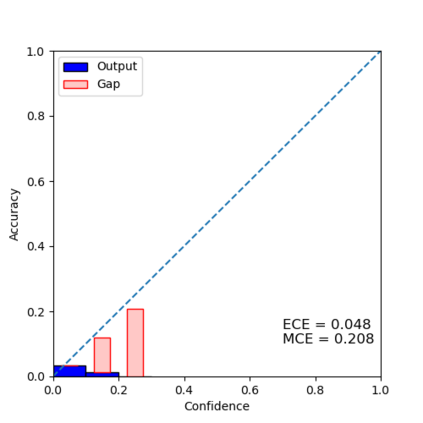

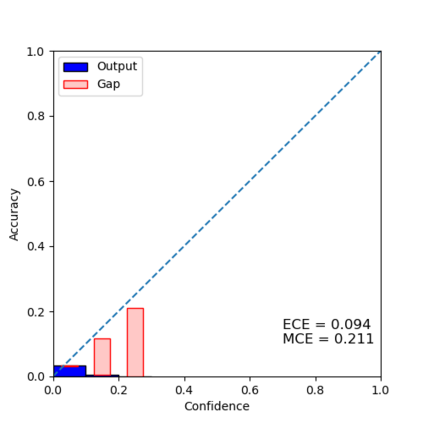

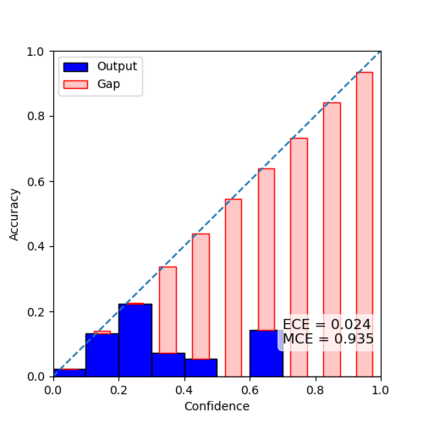

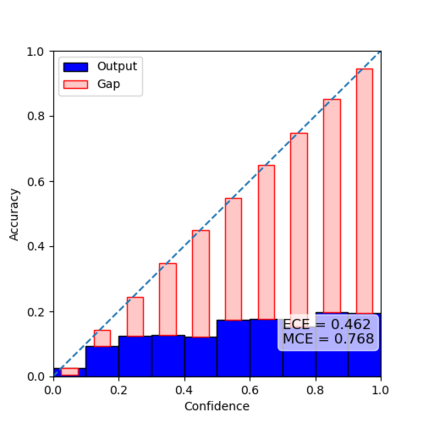

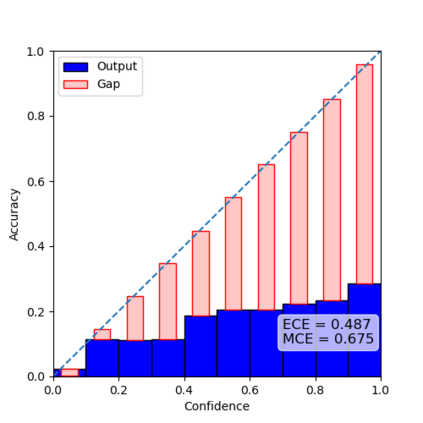

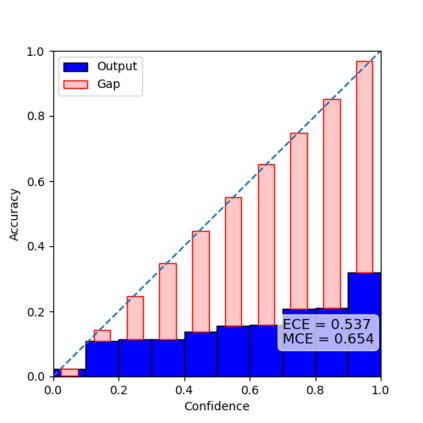

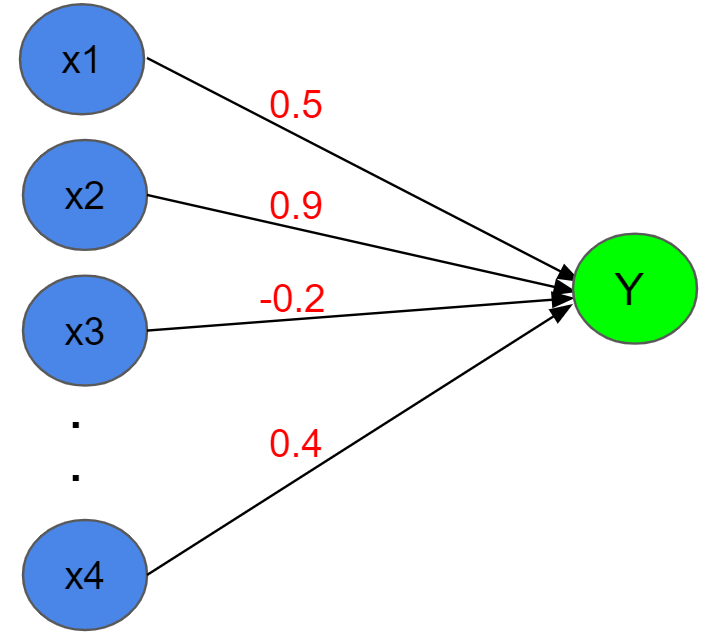

Bayesian Neural Networks (BNNs), unlike Traditional Neural Networks (TNNs) are robust and adept at handling adversarial attacks by incorporating randomness. This randomness improves the estimation of uncertainty, a feature lacking in TNNs. Thus, we investigate the robustness of BNNs to white-box attacks using multiple Bayesian neural architectures. Furthermore, we create our BNN model, called BNN-DenseNet, by fusing Bayesian inference (i.e., variational Bayes) to the DenseNet architecture, and BDAV, by combining this intervention with adversarial training. Experiments are conducted on the CIFAR-10 and FGVC-Aircraft datasets. We attack our models with strong white-box attacks ($l_\infty$-FGSM, $l_\infty$-PGD, $l_2$-PGD, EOT $l_\infty$-FGSM, and EOT $l_\infty$-PGD). In all experiments, at least one BNN outperforms traditional neural networks during adversarial attack scenarios. An adversarially-trained BNN outperforms its non-Bayesian, adversarially-trained counterpart in most experiments, and often by significant margins. Lastly, we investigate network calibration and find that BNNs do not make overconfident predictions, providing evidence that BNNs are also better at measuring uncertainty.

翻译:与传统神经网络不同,贝叶神经网络(BNN-DenseNet)与传统神经网络(TNNS)不同,强健并善于通过随机性来应对对抗性攻击。这种随机性改善了对不确定性的估计,这是TNS缺少的一个特征。 因此,我们调查了BNS对白箱攻击的强力性,使用了多种巴伊西亚神经结构。此外,我们创建了我们的BNNN模型,称为BNN-DenseNet,方法是将贝伊推断(即变换贝斯)与DenseNet结构(即变换贝斯贝斯)和BDAVAV相结合,将这一干预与对抗性培训相结合。在CIFAR-10和FGVC-Aircraft数据集上进行了实验。我们用白色盒子攻击我们的模型($lffty$-FGSM,$läffty$-PGD,$l_2$-PGD, $l_FNEM, EO $lfinftylemental-frimeal laction 和EODFGD) 也提供了更准确性的不易反对称的网络。在BNNBBF-BF-BF-BF-BF-BFMFMFIF-BF-BF-BF-F-BF-BF-BF-BF-F-BF-F-FT-BF-BFT-FT-SM-BFT-F-FT-FT-SM-AT-S-F-S-S-F-F-S-F-S-S-S-S-S-S-S-S-S-S-S-S-F-F-F-F-F-F-F-F-F-F-S-S-F-F-F-F-F-F-F-F-F-F-F-F-F-F-F-F-F-F-F-I-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-I-I-I-I-I-I-