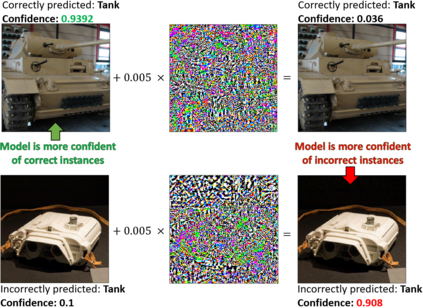

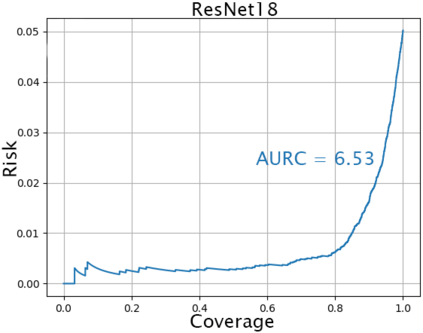

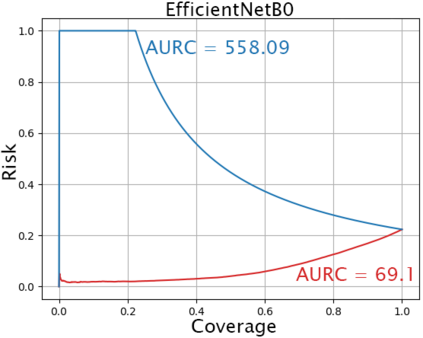

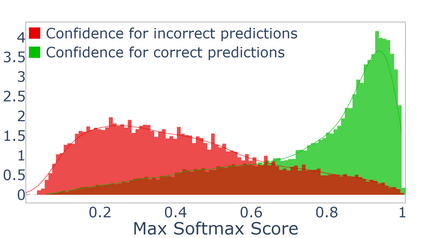

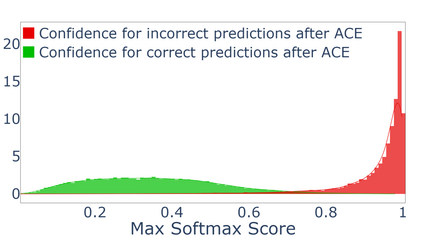

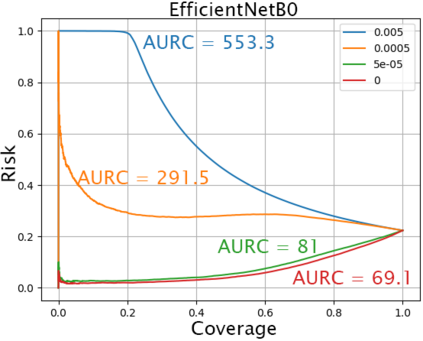

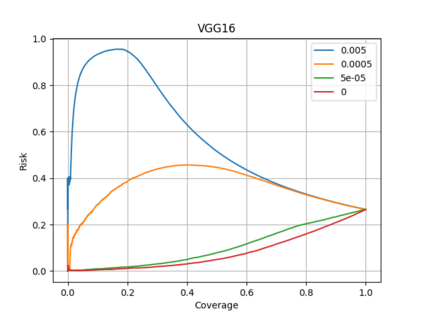

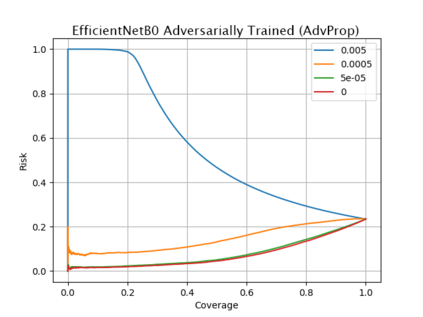

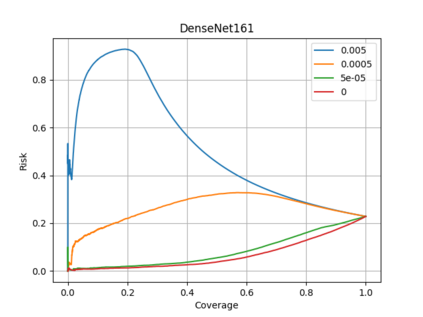

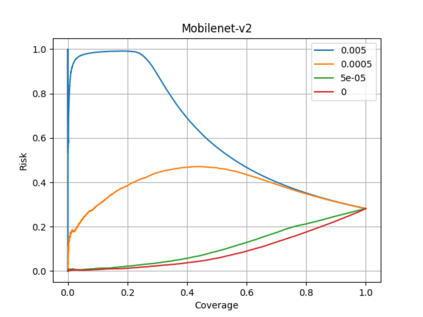

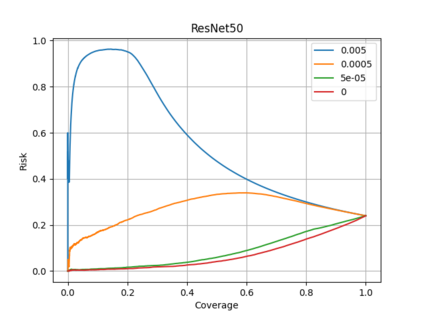

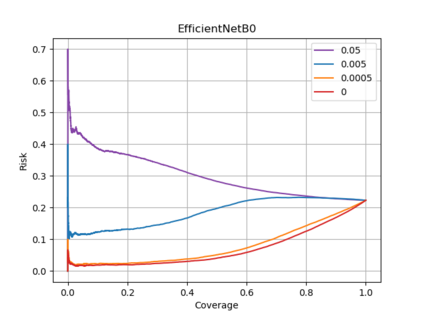

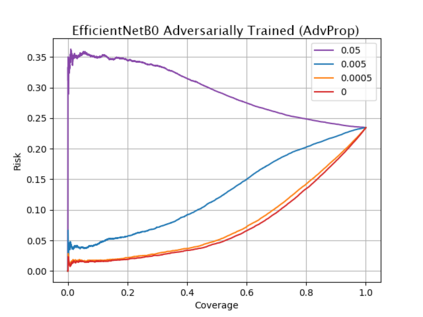

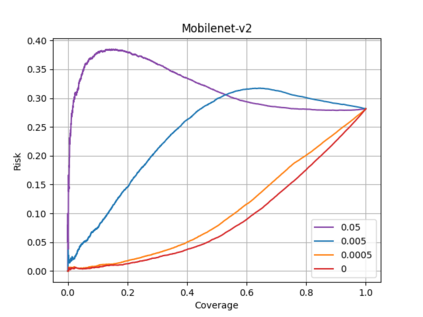

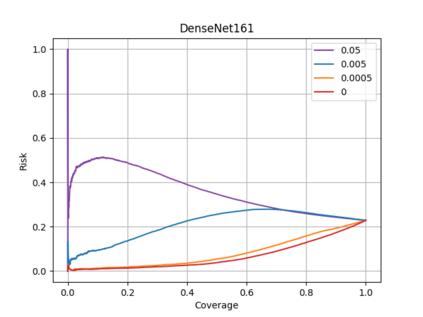

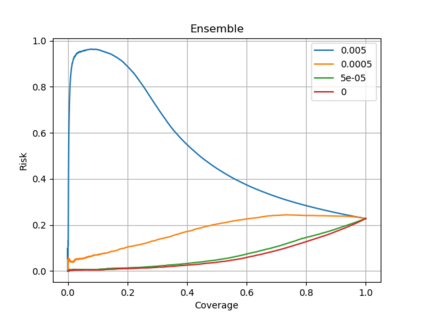

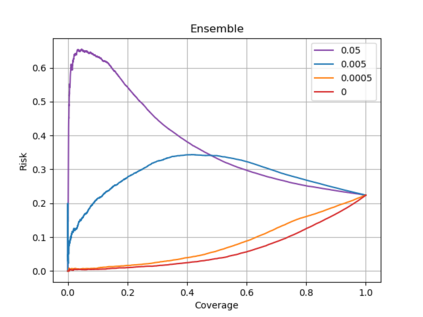

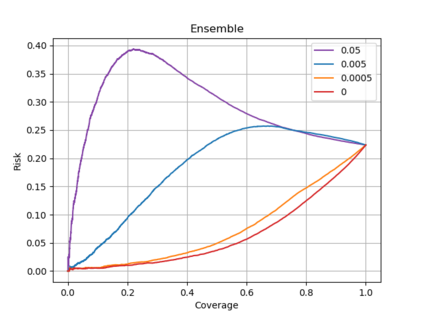

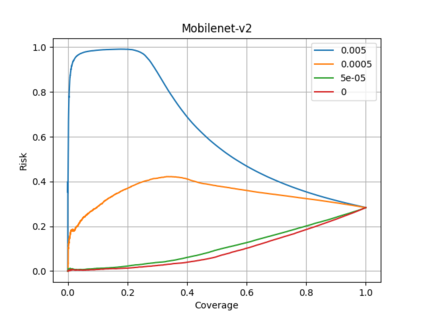

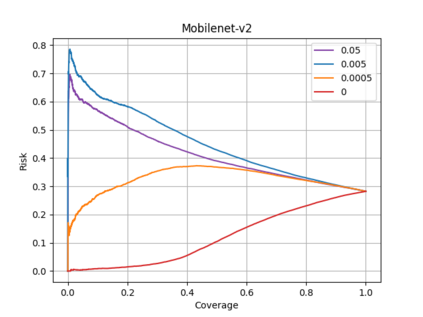

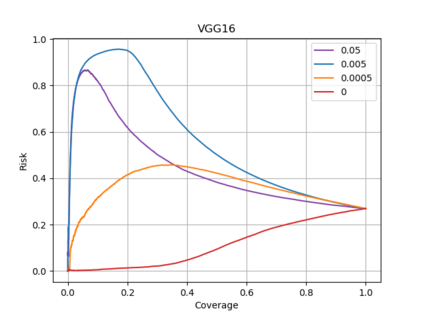

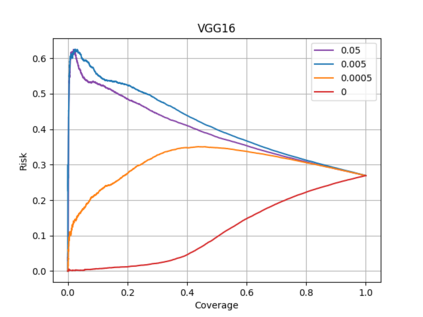

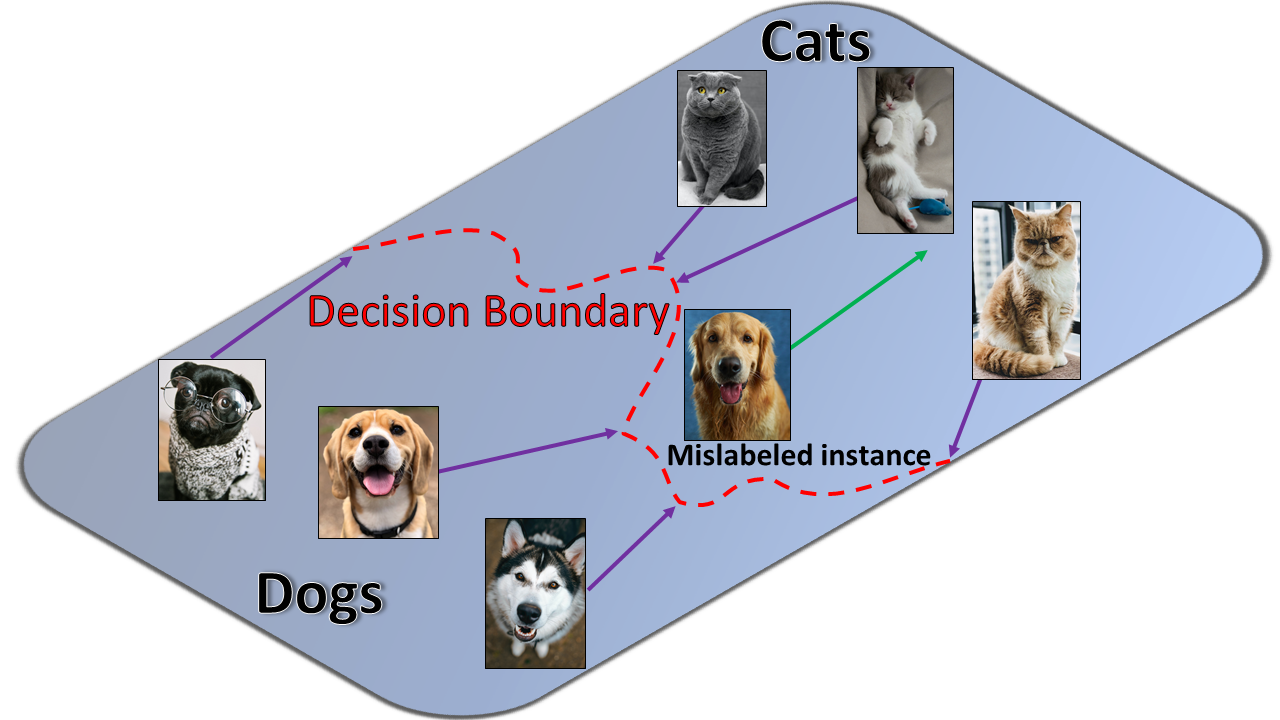

Deep neural networks (DNNs) have proven to be powerful predictors and are widely used for various tasks. Credible uncertainty estimation of their predictions, however, is crucial for their deployment in many risk-sensitive applications. In this paper we present a novel and simple attack, which unlike adversarial attacks, does not cause incorrect predictions but instead cripples the network's capacity for uncertainty estimation. The result is that after the attack, the DNN is more confident of its incorrect predictions than about its correct ones without having its accuracy reduced. We present two versions of the attack. The first scenario focuses on a black-box regime (where the attacker has no knowledge of the target network) and the second scenario attacks a white-box setting. The proposed attack is only required to be of minuscule magnitude for its perturbations to cause severe uncertainty estimation damage, with larger magnitudes resulting in completely unusable uncertainty estimations. We demonstrate successful attacks on three of the most popular uncertainty estimation methods: the vanilla softmax score, Deep Ensembles and MC-Dropout. Additionally, we show an attack on SelectiveNet, the selective classification architecture. We test the proposed attack on several contemporary architectures such as MobileNetV2 and EfficientNetB0, all trained to classify ImageNet.

翻译:深心神经网络(DNNS)被证明是强大的预测器,并被广泛用于各种任务。但是,对其预测的可信不确定性估计对于许多风险敏感应用中的部署至关重要。在本文中,我们提出了一个新颖而简单的攻击,与对抗性攻击不同,它不会造成不正确的预测,而是削弱网络的不确定性估计能力。结果是,在攻击之后,DNNN对其不正确的预测比其正确预测更有信心,但没有降低准确性。我们介绍了这次攻击的两个版本。第一种情景侧重于黑箱制度(攻击者对目标网络一无所知),第二种情景攻击则侧重于白箱设置。拟议的攻击只需要达到微量攻击,才能造成严重的不确定性估计损害,其规模更大,导致完全无法使用的不确定性估计。我们展示了对三种最受欢迎的不确定性估计方法的成功攻击:香草软体分、深 Eng Ensembles和MC-Dropout。此外,我们展示了对SemiveNet2号网络、所有经过培训的选择性图像分类和高压图像结构进行攻击。我们测试了几种攻击结构。