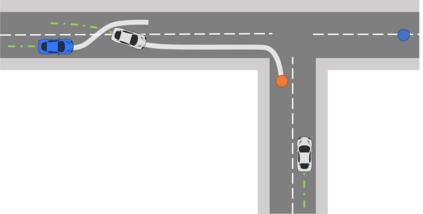

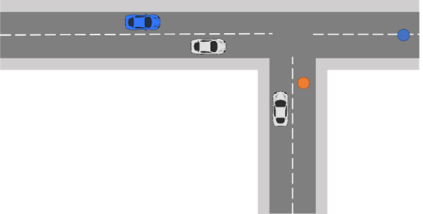

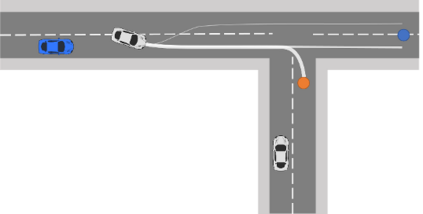

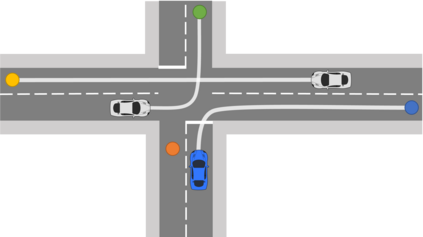

Inscrutable AI systems are difficult to trust, especially if they operate in safety-critical settings like autonomous driving. Therefore, there is a need to build transparent and queryable systems to increase trust levels. We propose a transparent, human-centric explanation generation method for autonomous vehicle motion planning and prediction based on an existing white-box system called IGP2. Our method integrates Bayesian networks with context-free generative rules and can give causal natural language explanations for the high-level driving behaviour of autonomous vehicles. Preliminary testing on simulated scenarios shows that our method captures the causes behind the actions of autonomous vehicles and generates intelligible explanations with varying complexity.

翻译:不可置信的人工智能系统是难以相信的,特别是当这些系统在诸如自主驾驶等安全关键环境下运作时。因此,有必要建立透明和可查询的系统来提高信任度。我们建议了一种透明、以人为本的自主车辆运动规划和预测解释生成方法,其依据是现有的白箱系统IGP2。我们的方法将巴伊西亚网络与无上下文的基因化规则结合起来,并且能够对自主车辆的高水平驾驶行为作出自然语言的因果关系解释。对模拟情景的初步测试表明,我们的方法捕捉了自主车辆行动背后的原因,并产生了复杂程度不一的解释。