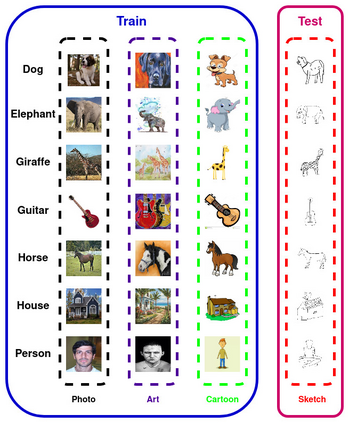

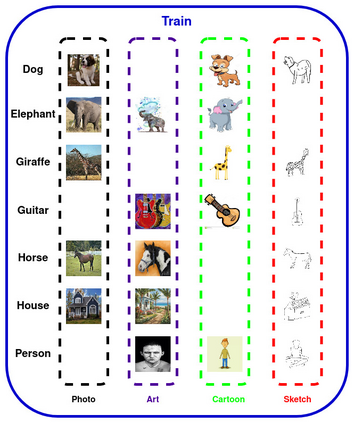

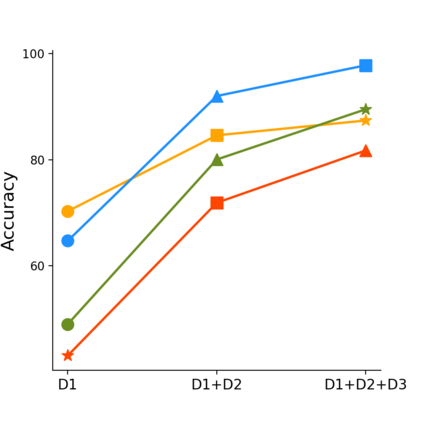

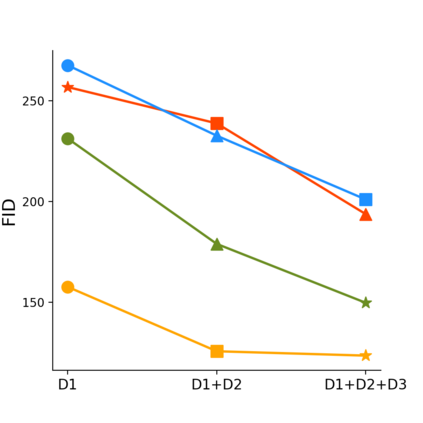

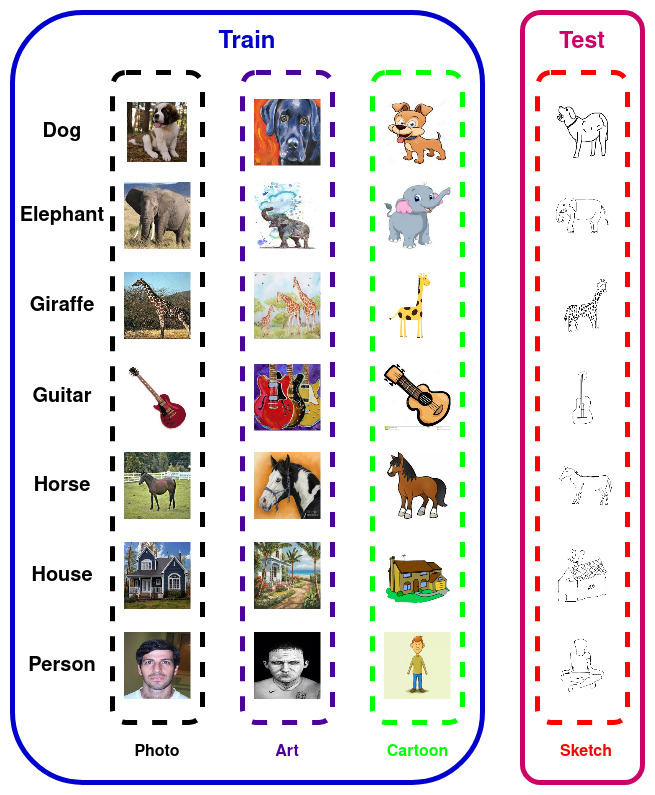

Given that Neural Networks generalize unreasonably well in the IID setting (with benign overfitting and betterment in performance with more parameters), OOD presents a consistent failure case to better the understanding of how they learn. This paper focuses on Domain Generalization (DG), which is perceived as the front face of OOD generalization. We find that the presence of multiple domains incentivizes domain agnostic learning and is the primary reason for generalization in Tradition DG. We show that the state-of-the-art results can be obtained by borrowing ideas from IID generalization and the DG tailored methods fail to add any performance gains. Furthermore, we perform explorations beyond the Traditional DG (TDG) formulation and propose a novel ClassWise DG (CWDG) benchmark, where for each class, we randomly select one of the domains and keep it aside for testing. Despite being exposed to all domains during training, CWDG is more challenging than TDG evaluation. We propose a novel iterative domain feature masking approach, achieving state-of-the-art results on the CWDG benchmark. Overall, while explaining these observations, our work furthers insights into the learning mechanisms of neural networks.

翻译:鉴于神经网络在IID设置中过于笼统(在使用更多参数时,效果优于和更好),OOD是一个一贯的失败案例,无法更好地了解他们是如何学习的。本文侧重于Domel Generalization(DG),它被视为OOD一般化的前面。我们发现,多个领域的存在激励了域名学习,是传统DG中普遍化的主要原因。我们表明,通过从IDl一般化和DG定制方法中借用想法,可以取得最先进的成果,无法增加任何业绩收益。此外,我们在传统的DG(TDG)配方之外进行探索,并提出新的CLassWise DG(CDG)基准,我们随机选择了每个类别中的一个领域,将其留待测试。尽管在培训期间接触到所有领域,但CWDG比TDG评估更具挑战性。我们提议了一种新型的迭代域特征,在CWDG基准中实现状态成果。总体而言,我们的工作洞察了这些观察机制。