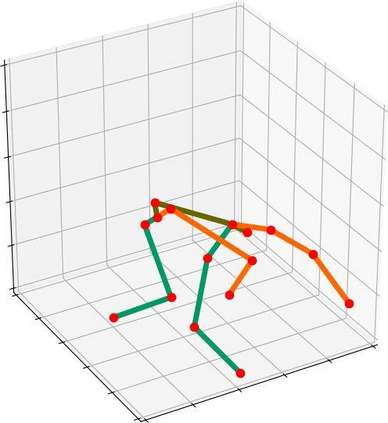

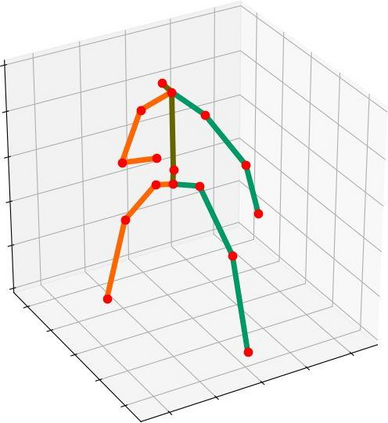

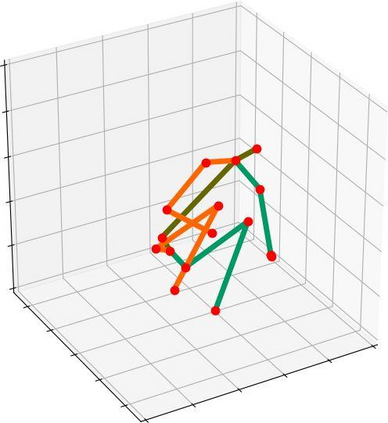

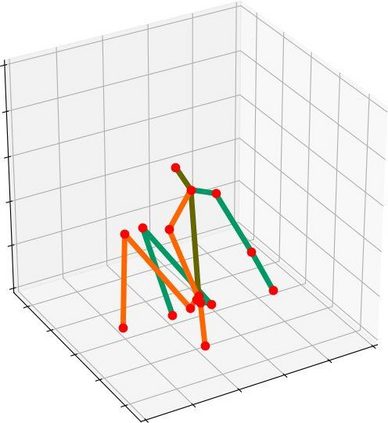

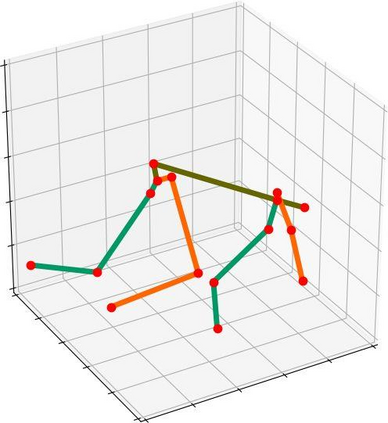

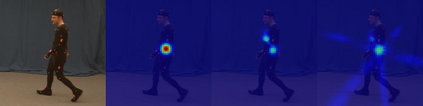

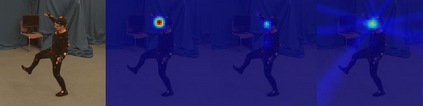

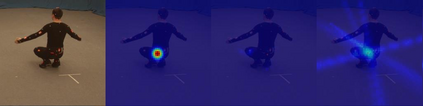

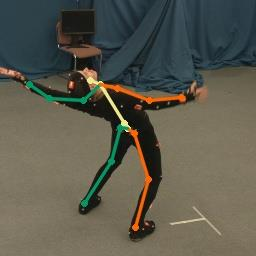

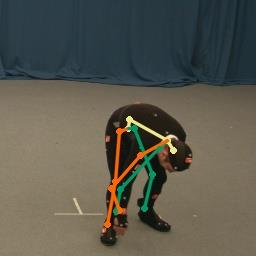

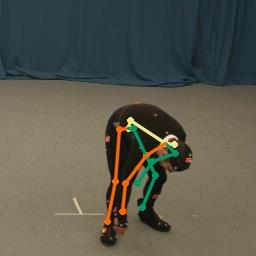

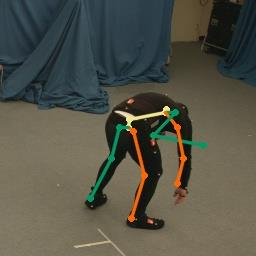

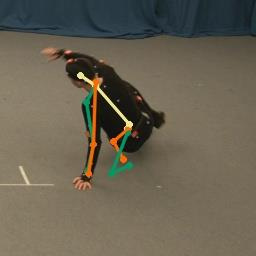

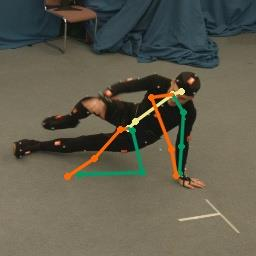

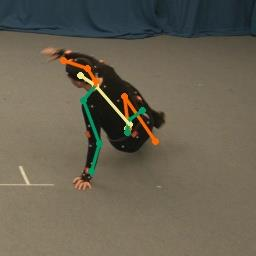

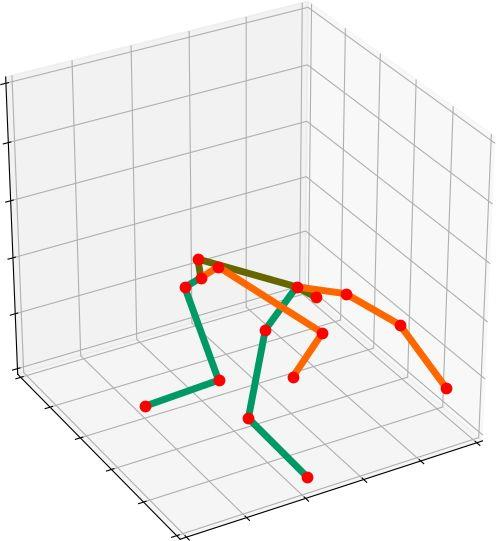

We propose to estimate 3D human pose from multi-view images and a few IMUs attached at person's limbs. It operates by firstly detecting 2D poses from the two signals, and then lifting them to the 3D space. We present a geometric approach to reinforce the visual features of each pair of joints based on the IMUs. This notably improves 2D pose estimation accuracy especially when one joint is occluded. We call this approach Orientation Regularized Network (ORN). Then we lift the multi-view 2D poses to the 3D space by an Orientation Regularized Pictorial Structure Model (ORPSM) which jointly minimizes the projection error between the 3D and 2D poses, along with the discrepancy between the 3D pose and IMU orientations. The simple two-step approach reduces the error of the state-of-the-art by a large margin on a public dataset. Our code will be released at https://github.com/CHUNYUWANG/imu-human-pose-pytorch.

翻译:我们建议从多视图图像和人肢上附着的几部三维人姿势图象来估计三维人姿势。 它首先从两个信号中检测二维人姿势, 然后再将其提升到三维空间。 我们提出了一个几何方法, 以基于以IMUs为基础的每对关节的视觉特征强化。 这明显提高了二维人姿势的估测准确度, 特别是在一个关节被隐蔽的情况下。 我们称之为“ 定向正规化网络 ” ( ORN ) 。 然后, 我们通过定向正规化的皮层结构模型( ORPSM) 将多视图 2D 向三维空间展示的多维人姿势 2D, 从而将三维和二维之间的投影误差加在一起, 3D 姿势和 IMU 方向之间的偏差。 简单的两步方法将状态的误差降低公共数据集上一个大边缘值。 我们的代码将在 https://github.com/ CHUNUWANG/ im- hu- plut- pytorchn- pytorch 上发布 。