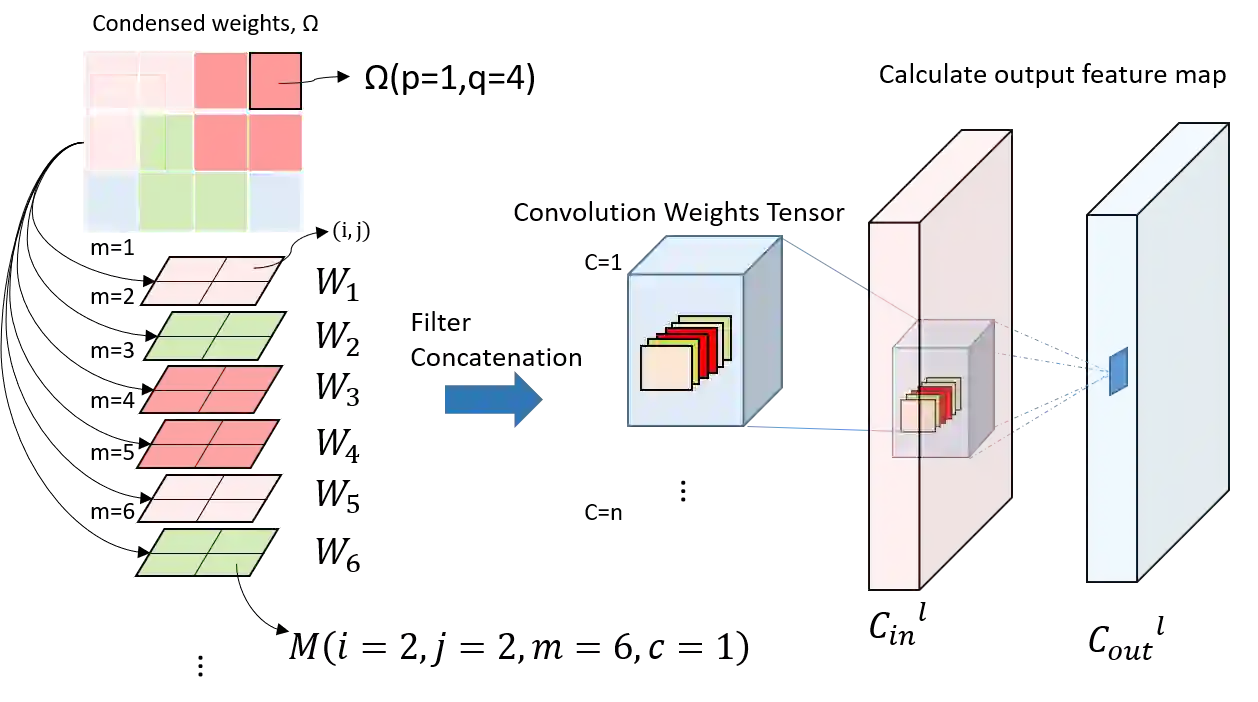

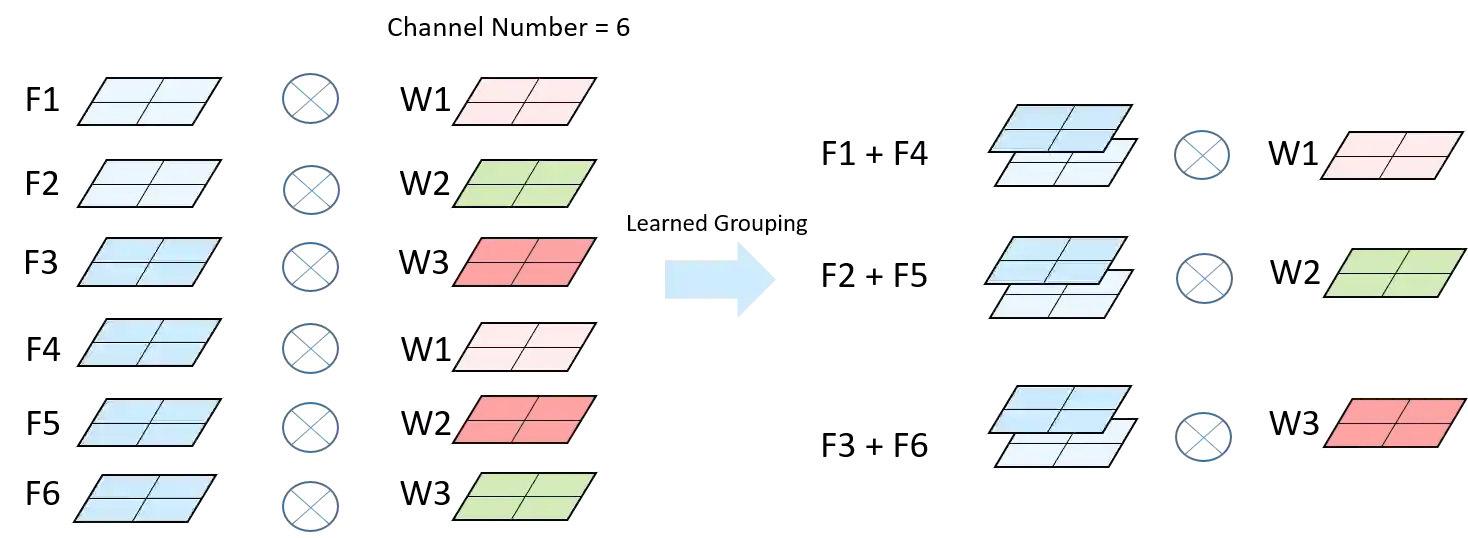

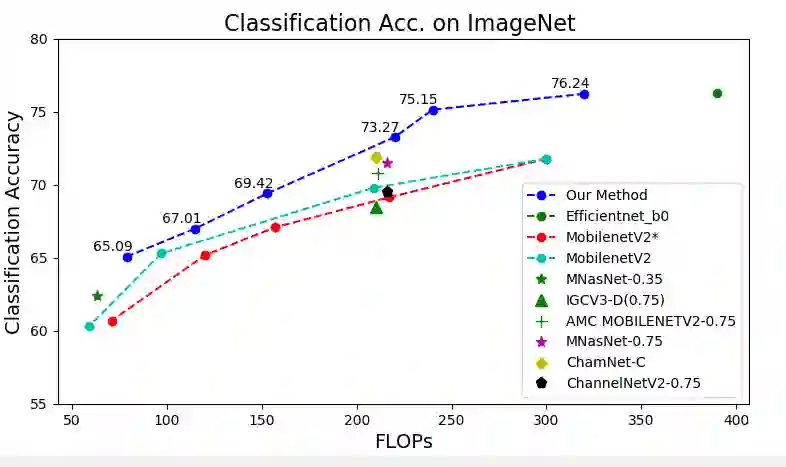

The recent WSNet [1] is a new model compression method through sampling filterweights from a compact set and has demonstrated to be effective for 1D convolutionneural networks (CNNs). However, the weights sampling strategy of WSNet ishandcrafted and fixed which may severely limit the expression ability of the resultedCNNs and weaken its compression ability. In this work, we present a novel auto-sampling method that is applicable to both 1D and 2D CNNs with significantperformance improvement over WSNet. Specifically, our proposed auto-samplingmethod learns the sampling rules end-to-end instead of being independent of thenetwork architecture design. With such differentiable weight sampling rule learning,the sampling stride and channel selection from the compact set are optimized toachieve better trade-off between model compression rate and performance. Wedemonstrate that at the same compression ratio, our method outperforms WSNetby6.5% on 1D convolution. Moreover, on ImageNet, our method outperformsMobileNetV2 full model by1.47%in classification accuracy with25%FLOPsreduction. With the same backbone architecture as baseline models, our methodeven outperforms some neural architecture search (NAS) based methods such asAMC [2] and MNasNet [3].

翻译:最近的WSNet [1] 是一个新的模型压缩方法,通过一组紧凑的抽样过滤器进行取样,并证明对1D进化网络(CNNs)有效。然而,WSNet的加权抽样战略是手动和固定的,可能严重限制结果CNN的表达能力,削弱压缩能力。在这项工作中,我们提出了一个适用于1D和2DCNN的新型自动抽样方法,其性能比WSNet明显改进。具体地说,我们提议的自动抽样方法学习了从端到端的抽样规则,而不是独立于网络结构设计。在进行这种不同的加权抽样规则学习后,从该组中取样和频道选择的重量战略可能会严重限制结果CNN的表达能力,并削弱其压缩能力。在这项工作中,我们提出了一种适用于1DCNNISNet6.5%的新的自动抽样方法。此外,在图像网络上,我们的方法比WSNet2全模范模型的全模范化,而不是独立于网络结构设计设计设计。在1.47 %NA2中,从该组取样和频道选择的精准性模型,作为基础结构的模型,[ASOM3] 。