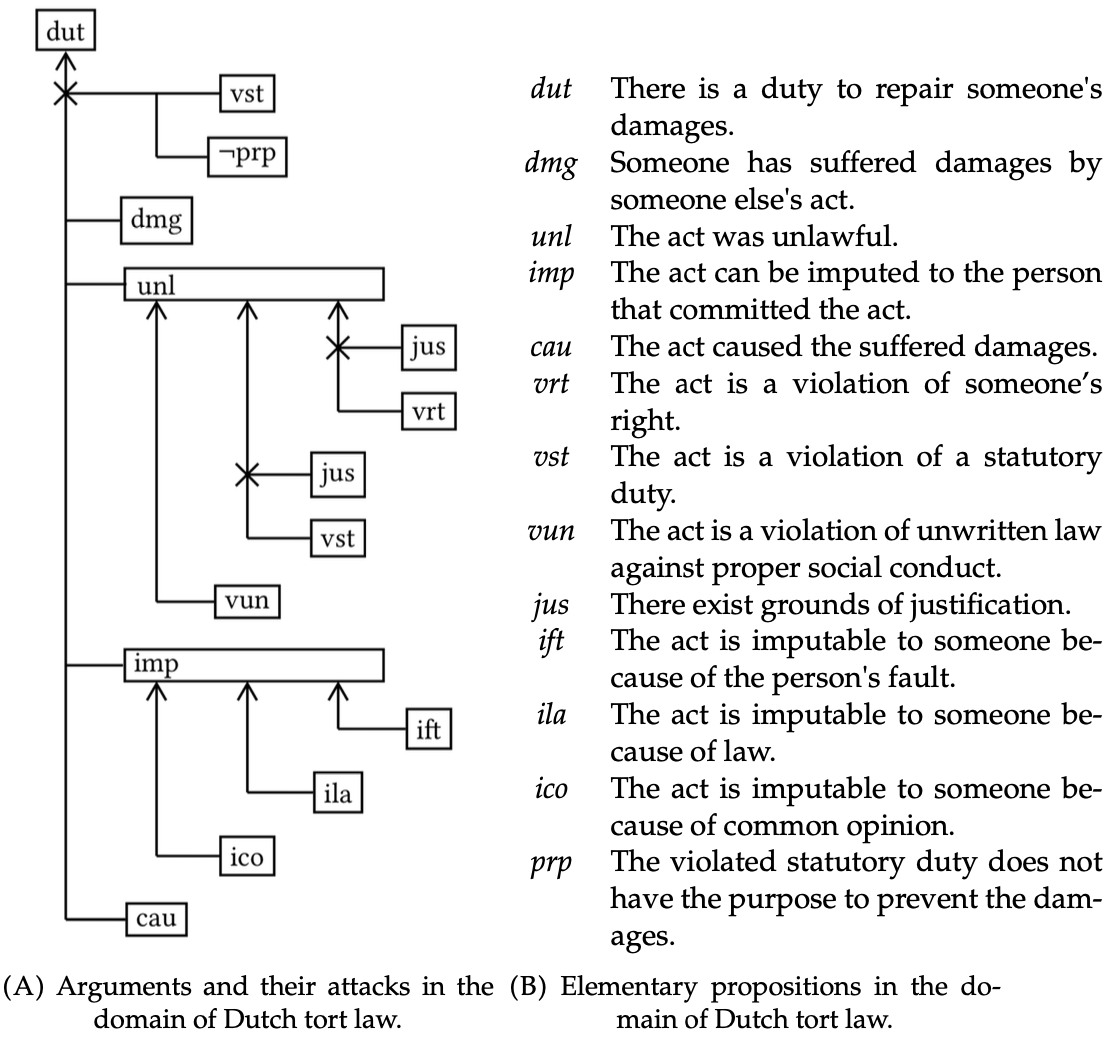

In AI and law, systems that are designed for decision support should be explainable when pursuing justice. In order for these systems to be fair and responsible, they should make correct decisions and make them using a sound and transparent rationale. In this paper, we introduce a knowledge-driven method for model-agnostic rationale evaluation using dedicated test cases, similar to unit-testing in professional software development. We apply this new method in a set of machine learning experiments aimed at extracting known knowledge structures from artificial datasets from fictional and non-fictional legal settings. We show that our method allows us to analyze the rationale of black-box machine learning systems by assessing which rationale elements are learned or not. Furthermore, we show that the rationale can be adjusted using tailor-made training data based on the results of the rationale evaluation.

翻译:在大赦国际和法律中,设计用于决策支持的系统在追求正义时应当可以解释。为了使这些系统公平和负责,它们应当作出正确的决定,并使用合理和透明的理由来作出正确的决定。在本文中,我们引入了一种知识驱动方法,利用专门的测试案例进行模型-不可知理学评估,类似于专业软件开发中的单位测试。我们在一系列机器学习实验中应用了这种新方法,目的是从虚构和非虚构的法律环境的人工数据集中提取已知的知识结构。我们表明,我们的方法使我们能够通过评估哪些理由要素是学到的还是没有学到的,来分析黑盒机器学习系统的理由。此外,我们表明,可以利用基于理由评估结果的量身定制培训数据来调整理由。