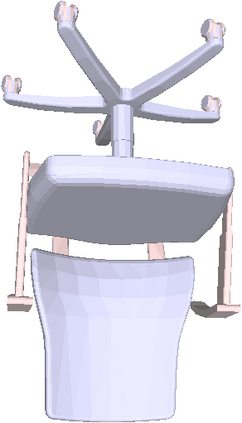

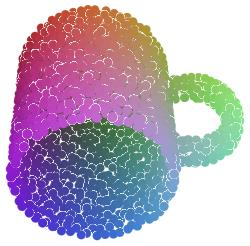

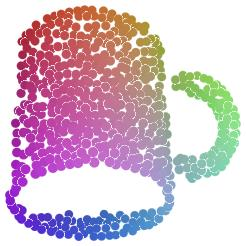

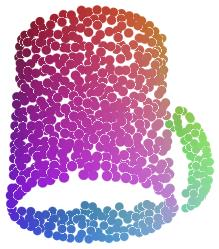

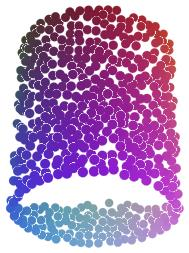

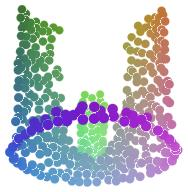

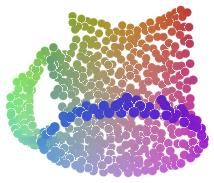

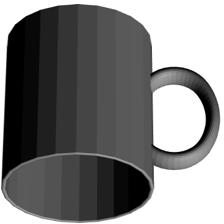

As two fundamental representation modalities of 3D objects, 2D multi-view images and 3D point clouds reflect shape information from different aspects of visual appearances and geometric structures. Unlike deep learning-based 2D multi-view image modeling, which demonstrates leading performances in various 3D shape analysis tasks, 3D point cloud-based geometric modeling still suffers from insufficient learning capacity. In this paper, we innovatively construct a unified cross-modal knowledge transfer framework, which distills discriminative visual descriptors of 2D images into geometric descriptors of 3D point clouds. Technically, under a classic teacher-student learning paradigm, we propose multi-view vision-to-geometry distillation, consisting of a deep 2D image encoder as teacher and a deep 3D point cloud encoder as student. To achieve heterogeneous feature alignment, we further propose visibility-aware feature projection, through which per-point embeddings can be aggregated into multi-view geometric descriptors. Extensive experiments on 3D shape classification, part segmentation, and unsupervised learning validate the superiority of our method. We will make the code and data publicly available.

翻译:作为三维天体的两个基本表达模式,二维多视图图像和三维点云反映了视觉外观和几何结构不同方面的形状信息。与深学习基础的二维多视图图像模型不同,它展示了各种三维形状分析任务的主要性能,三维点云的几何模型仍然受到学习能力不足的影响。在本文件中,我们创新地构建了一个统一的跨模式知识传输框架,将二维图像的歧视性直观描述符提取成三维点云的几维描述符。从技术上讲,在经典师范学习模式下,我们提出了多视角的二维图像到几维图像模型蒸馏,由作为教师的深2D图像编码器和作为学生的深3D点云编码器组成。为了实现不同特征的校准,我们进一步提出可见度特征预测,通过这种预测,可将每点嵌入的成多视图几维点描述符。在3D形状分类、部分分割和未经校准的学习中,我们将公开提供数据与数据,并验证我们的方法的优越性。