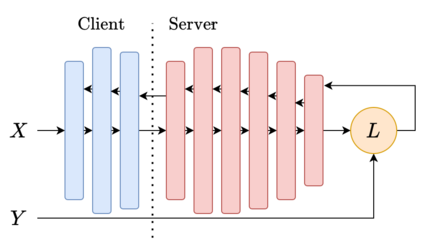

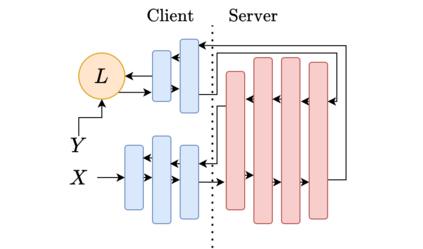

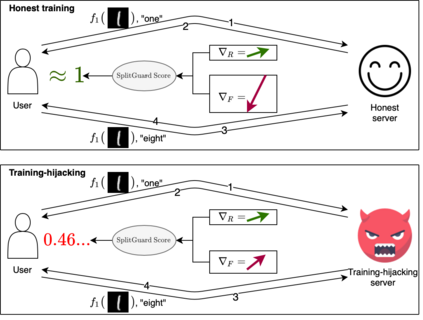

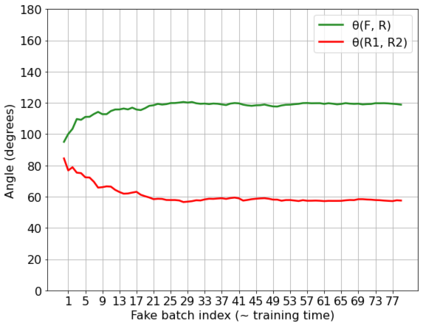

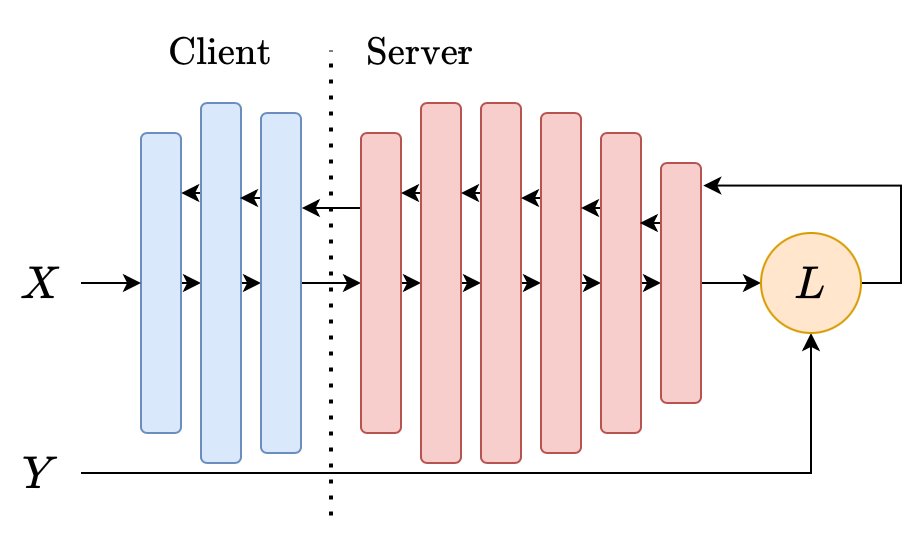

Distributed deep learning frameworks, such as split learning, have recently been proposed to enable a group of participants to collaboratively train a deep neural network without sharing their raw data. Split learning in particular achieves this goal by dividing a neural network between a client and a server so that the client computes the initial set of layers, and the server computes the rest. However, this method introduces a unique attack vector for a malicious server attempting to steal the client's private data: the server can direct the client model towards learning a task of its choice. With a concrete example already proposed, such training-hijacking attacks present a significant risk for the data privacy of split learning clients. In this paper, we propose SplitGuard, a method by which a split learning client can detect whether it is being targeted by a training-hijacking attack or not. We experimentally evaluate its effectiveness, and discuss in detail various points related to its use. We conclude that SplitGuard can effectively detect training-hijacking attacks while minimizing the amount of information recovered by the adversaries.

翻译:最近有人提议采用分散式深层次学习框架,如分解学习,以使一组参与者能够在不分享原始数据的情况下合作训练深神经网络。 分解学习特别通过将一个神经网络在客户和服务器之间分割,使客户计算最初的一组层次,而服务器则计算其余部分。 但是,这一方法为恶意服务器试图偷窃客户的私人数据引入了独特的攻击矢量:服务器可以引导客户模式学习自己选择的任务。 有了已经提出的一个具体例子,这种劫持训练攻击对分解学习客户的数据隐私构成了重大风险。 我们提议SplitGuard, 使用这一方法,让一个分解学习客户能够发现它是否被劫持训练攻击的目标。 我们实验性地评估其有效性,并详细讨论与使用它有关的各点。 我们的结论是,SplitGuard能够有效地探测到劫持训练攻击,同时尽量减少对手所收集的信息数量。