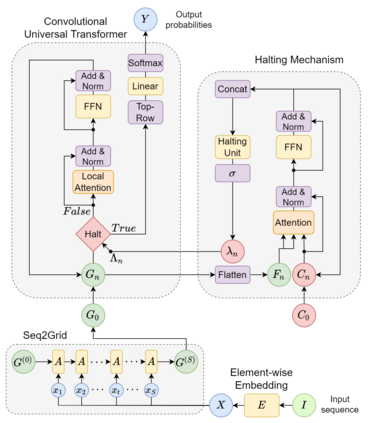

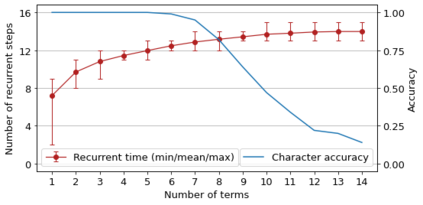

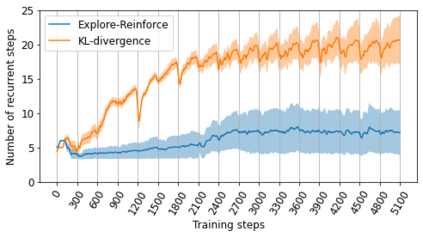

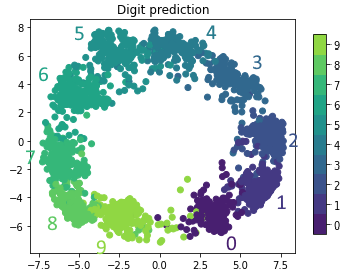

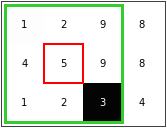

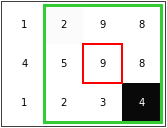

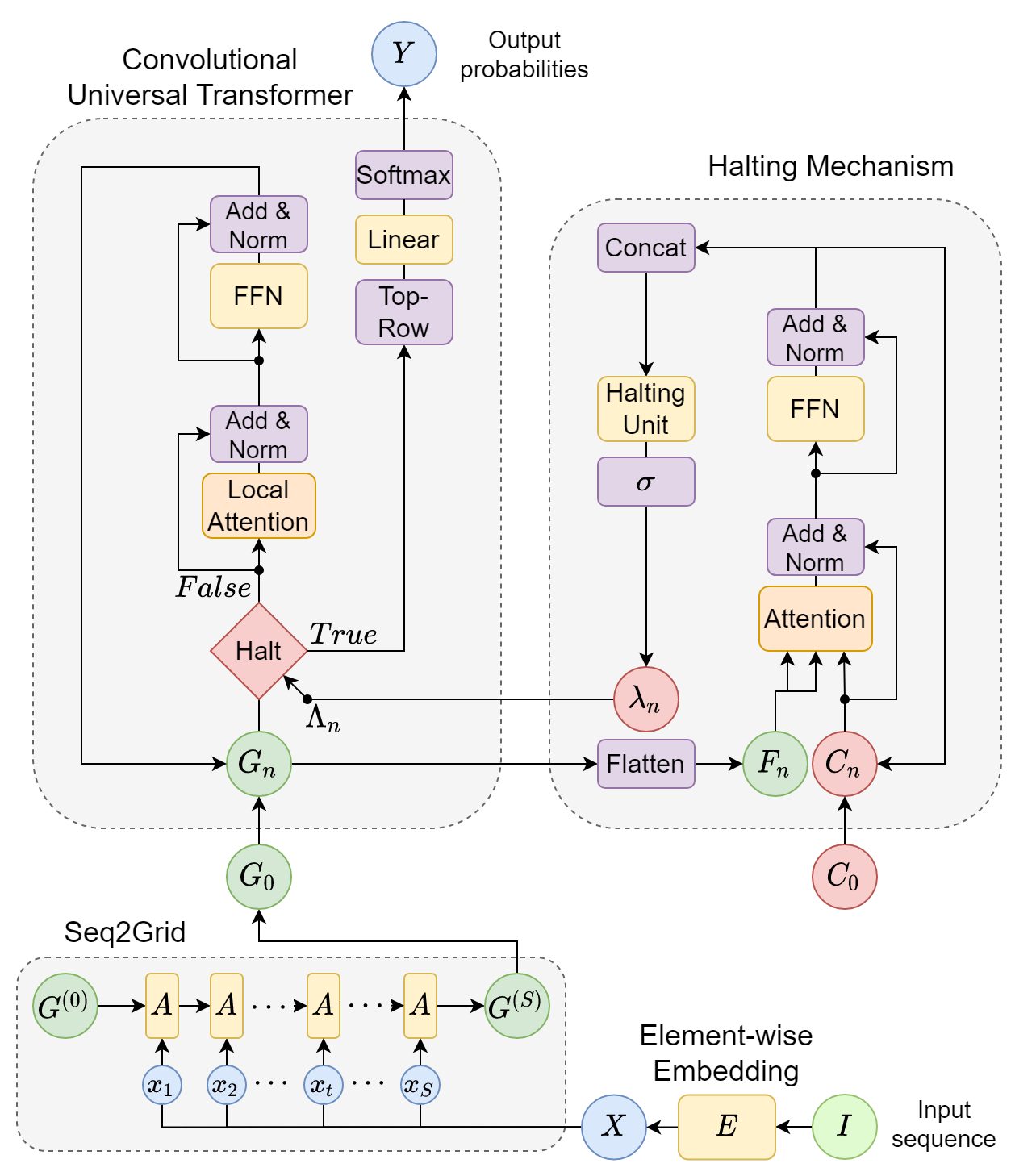

Mathematical reasoning is one of the most impressive achievements of human intellect but remains a formidable challenge for artificial intelligence systems. In this work we explore whether modern deep learning architectures can learn to solve a symbolic addition task by discovering effective arithmetic procedures. Although the problem might seem trivial at first glance, generalizing arithmetic knowledge to operations involving a higher number of terms, possibly composed by longer sequences of digits, has proven extremely challenging for neural networks. Here we show that universal transformers equipped with local attention and adaptive halting mechanisms can learn to exploit an external, grid-like memory to carry out multi-digit addition. The proposed model achieves remarkable accuracy even when tested with problems requiring extrapolation outside the training distribution; most notably, it does so by discovering human-like calculation strategies such as place value alignment.

翻译:数学推理是人类智能中最令人印象深刻的成就之一,但对人工智能系统来说仍然是一项艰巨的挑战。 在这项工作中,我们探讨现代深层次的学习结构能否通过发现有效的算术程序来学会解决象征性的额外任务。尽管问题乍看起来似乎微不足道,但将算术知识概括到涉及更多条件的操作中,可能由较长的数位序列组成,对神经网络来说是极具挑战性的。在这里,我们表明配备当地注意力和适应性停止机制的通用变压器可以学会利用外部的、类似于网格的记忆来进行多位数的添加。 拟议的模型即使经过在培训分布之外需要外外推算的问题的测试,也达到了惊人的准确性;最明显的是,它通过发现类似人类的计算战略,如地点价值调整。