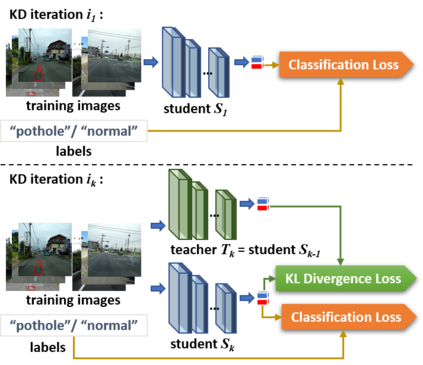

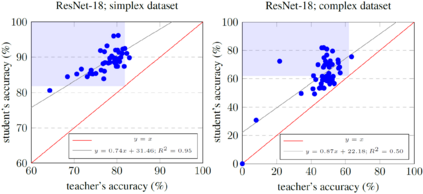

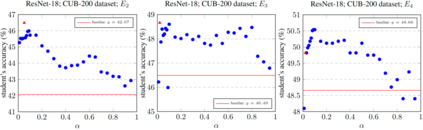

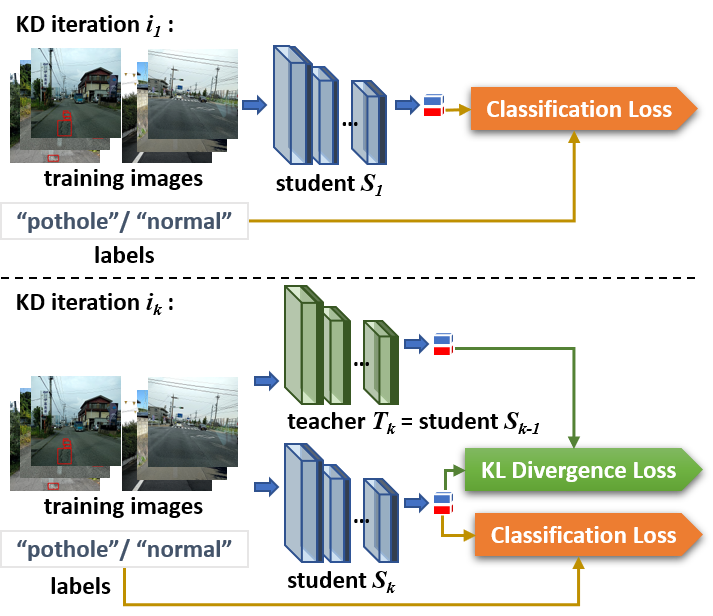

Pothole classification has become an important task for road inspection vehicles to save drivers from potential car accidents and repair bills. Given the limited computational power and fixed number of training epochs, we propose iterative self knowledge distillation (ISKD) to train lightweight pothole classifiers. Designed to improve both the teacher and student models over time in knowledge distillation, ISKD outperforms the state-of-the-art self knowledge distillation method on three pothole classification datasets across four lightweight network architectures, which supports that self knowledge distillation should be done iteratively instead of just once. The accuracy relation between the teacher and student models shows that the student model can still benefit from a moderately trained teacher model. Implying that better teacher models generally produce better student models, our results justify the design of ISKD. In addition to pothole classification, we also demonstrate the efficacy of ISKD on six additional datasets associated with generic classification, fine-grained classification, and medical imaging application, which supports that ISKD can serve as a general-purpose performance booster without the need of a given teacher model and extra trainable parameters.

翻译:水坑分类已成为公路检查车辆的一项重要任务,目的是保护司机免受潜在的汽车事故和修理费的伤害。鉴于计算力有限,培训次数有限,我们提议进行迭代自学蒸馏(ISKD),以培训轻量级坑洞分类人员。设计是为了在知识蒸馏过程中不断改进教师和学生模式,ISKD在四个轻量级网络结构的三个坑眼分类数据集中优于最先进的自学蒸馏方法,这支持了自我知识蒸馏应当迭代而不是仅仅一次。教师和学生模型之间的精确关系表明学生模型仍然可以受益于中度培训的教师模型。由于更好的教师模型通常产生更好的学生模型,我们的结果证明设计ISKD是合理的。除了水坑分类外,我们还展示了ISKD在与通用分类、精细分类和医学成像应用有关的另外六个数据集上的功效,这六个数据集支持ISKD可以作为一般用途的促进器,而不需要给定的教师模型和可培训的参数。