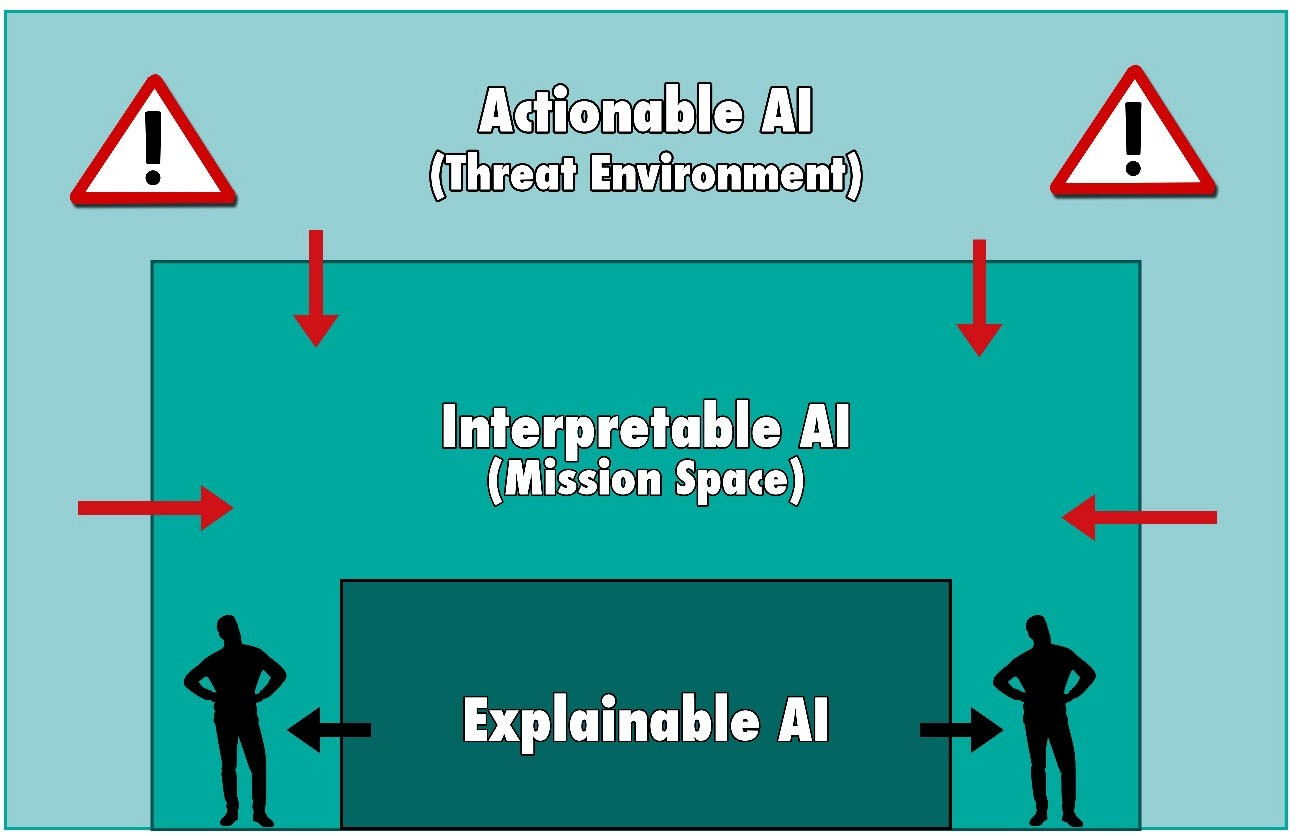

To benefit from AI advances, users and operators of AI systems must have reason to trust it. Trust arises from multiple interactions, where predictable and desirable behavior is reinforced over time. Providing the system's users with some understanding of AI operations can support predictability, but forcing AI to explain itself risks constraining AI capabilities to only those reconcilable with human cognition. We argue that AI systems should be designed with features that build trust by bringing decision-analytic perspectives and formal tools into AI. Instead of trying to achieve explainable AI, we should develop interpretable and actionable AI. Actionable and Interpretable AI (AI2) will incorporate explicit quantifications and visualizations of user confidence in AI recommendations. In doing so, it will allow examining and testing of AI system predictions to establish a basis for trust in the systems' decision making and ensure broad benefits from deploying and advancing its computational capabilities.

翻译:为了从AI的进步中获益,AI系统的用户和操作者必须有理由相信它。信任来自多种互动,这种互动随着时间的推移加强了可预测和可取的行为。为系统用户提供对AI操作的某种了解,可以支持可预测性,但迫使AI解释自己的风险是将AI的能力限制在只有与人类认知相协调的能力上。我们主张,AI系统的设计应具备通过将决策分析观点和正式工具纳入AI来建立信任的特点。我们不应试图实现可解释和可操作的AI,而应开发可解释和可操作的AI。可操作和可解释的AI(AI2)将包含对AI建议中用户信心的明确量化和可视化。这样做,将允许审查和测试AI系统预测,以建立对系统决策的信任基础,并确保通过部署和提升其计算能力获得广泛的好处。