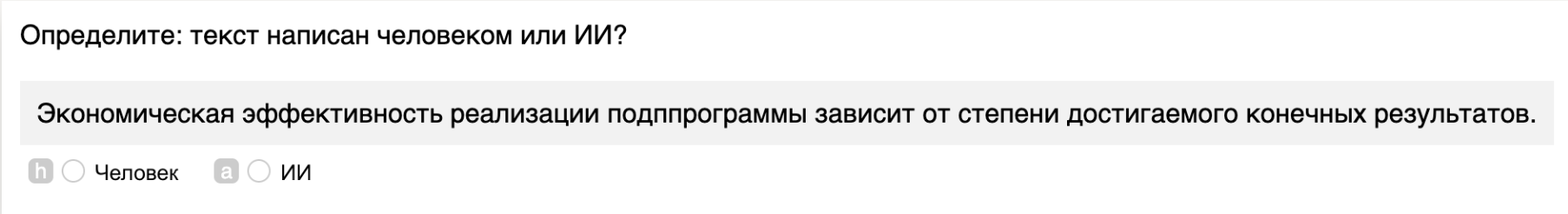

We present the shared task on artificial text detection in Russian, which is organized as a part of the Dialogue Evaluation initiative, held in 2022. The shared task dataset includes texts from 14 text generators, i.e., one human writer and 13 text generative models fine-tuned for one or more of the following generation tasks: machine translation, paraphrase generation, text summarization, text simplification. We also consider back-translation and zero-shot generation approaches. The human-written texts are collected from publicly available resources across multiple domains. The shared task consists of two sub-tasks: (i) to determine if a given text is automatically generated or written by a human; (ii) to identify the author of a given text. The first task is framed as a binary classification problem. The second task is a multi-class classification problem. We provide count-based and BERT-based baselines, along with the human evaluation on the first sub-task. A total of 30 and 8 systems have been submitted to the binary and multi-class sub-tasks, correspondingly. Most teams outperform the baselines by a wide margin. We publicly release our codebase, human evaluation results, and other materials in our GitHub repository (https://github.com/dialogue-evaluation/RuATD).

翻译:作为2022年对话评价倡议的一部分,我们用俄文介绍了人工文本探测的分担任务,这是2022年举办的对话评价倡议的一部分。共享任务数据集包括14个文本生成器的文本,即1名人类作家和13个文本基因模型,为以下一代任务中的1个或1个以上任务作了微调:机器翻译、原话生成、文本摘要、文本简化。我们还考虑回译和零光生成方法。人文文本是从多个领域的公开资源中收集的。共同任务包括两个子任务:(一) 确定某一文本是自动生成还是由人类编写;(二) 确定某一文本的作者。第一项任务是一个二元分类问题。第二个任务是多级分类问题。我们提供基于数和基于BERT的基线,同时对第一个子任务进行人文评估。总共30和8个系统已经提交给二元和多级子任务。大多数团队在宽距基线上超越了其他的基线。我们公开发布了我们Giab/Ruba的代码库/人类评估结果。