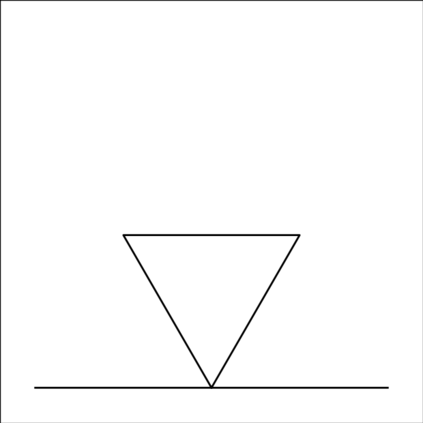

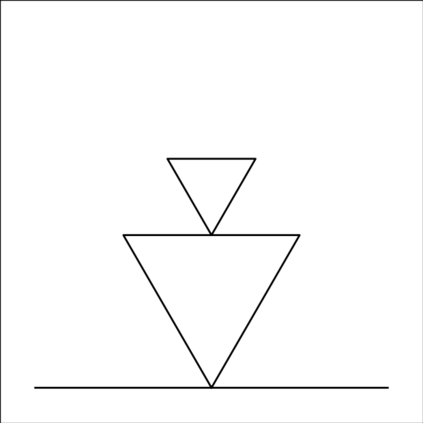

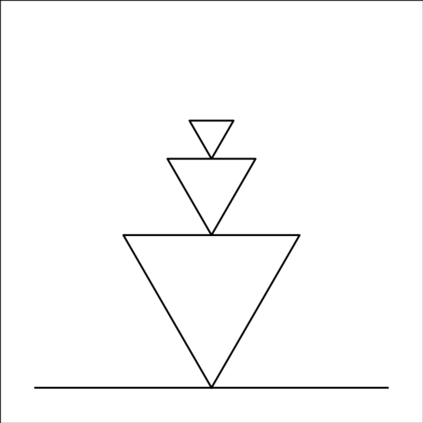

Machine learning has made major advances in categorizing objects in images, yet the best algorithms miss important aspects of how people learn and think about categories. People can learn richer concepts from fewer examples, including causal models that explain how members of a category are formed. Here, we explore the limits of this human ability to infer causal "programs" -- latent generating processes with nontrivial algorithmic properties -- from one, two, or three visual examples. People were asked to extrapolate the programs in several ways, for both classifying and generating new examples. As a theory of these inductive abilities, we present a Bayesian program learning model that searches the space of programs for the best explanation of the observations. Although variable, people's judgments are broadly consistent with the model and inconsistent with several alternatives, including a pre-trained deep neural network for object recognition, indicating that people can learn and reason with rich algorithmic abstractions from sparse input data.

翻译:机器学习在图像对象分类方面取得了重大进步, 但最佳算法却忽略了人们如何学习和思考类别的重要方面。 人们可以从更少的例子中学习更丰富的概念, 包括解释某类成员如何组成的因果模型。 在这里, 我们探索人类推断因果“ 程序” -- 具有非三重算法特性的潜在生成过程 -- -- 从一、 二或三个视觉例子。 人们被要求以几种方式推断程序, 既分类又产生新的例子。 作为这些感应能力的理论, 我们展示了一种巴伊西亚程序学习模型, 探索程序空间, 以对观察进行最佳解释。 虽然人们的判断与模型大致一致, 并且与几种替代方法不相符, 包括一个经过预先训练的深神经网络, 以确认物体。 这表明人们可以从稀有的输入数据中学习丰富的算法抽象和理性。