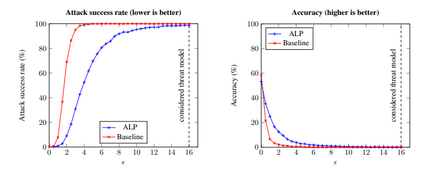

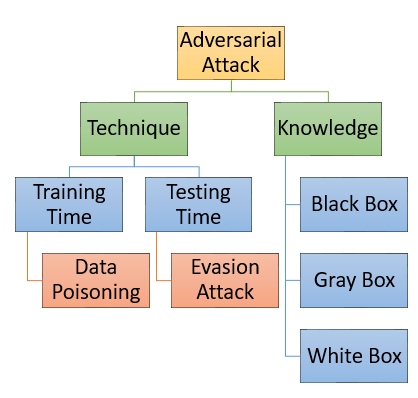

From past couple of years there is a cycle of researchers proposing a defence model for adversaries in machine learning which is arguably defensible to most of the existing attacks in restricted condition (they evaluate on some bounded inputs or datasets). And then shortly another set of researcher finding the vulnerabilities in that defence model and breaking it by proposing a stronger attack model. Some common flaws are been noticed in the past defence models that were broken in very short time. Defence models being broken so easily is a point of concern as decision of many crucial activities are taken with the help of machine learning models. So there is an utter need of some defence checkpoints that any researcher should keep in mind while evaluating the soundness of technique and declaring it to be decent defence technique. In this paper, we have suggested few checkpoints that should be taken into consideration while building and evaluating the soundness of defence models. All these points are recommended after observing why some past defence models failed and how some model remained adamant and proved their soundness against some of the very strong attacks.

翻译:过去几年来,有周期的研究人员提出机器学习对手的防御模式,对大多数现有限制条件下的攻击来说,这可以说是站得住脚的(他们评价了某些封闭的投入或数据集)。不久,又有一组研究人员发现该防御模式的弱点,并通过提出更强大的攻击模式打破了这一模式。在过去的防御模式中,一些常见的缺陷在短短的时间内破碎了。防御模式如此容易破碎是一个令人关切的问题,因为许多关键活动的决定是在机器学习模式的帮助下作出的。因此,完全需要一些国防检查站,任何研究人员在评估技术的健全性并宣布技术是正当的国防技术时,都应铭记这些检查站。在本文中,我们建议在建立和评价防御模式的健全性时,很少考虑这些检查站。所有这些问题都是在观察过去一些防御模式失败的原因和一些模式如何坚固不屈不挠之后提出的。