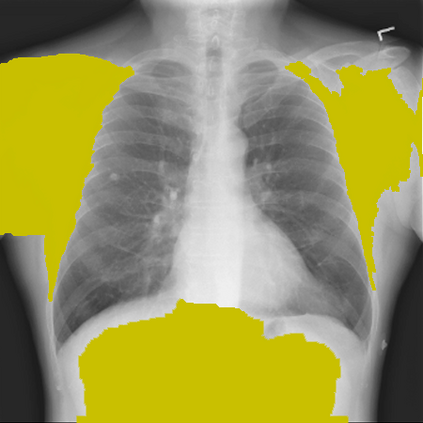

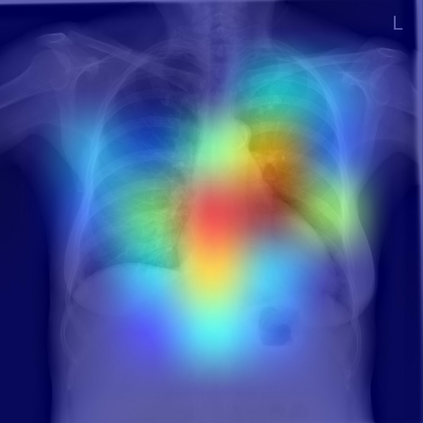

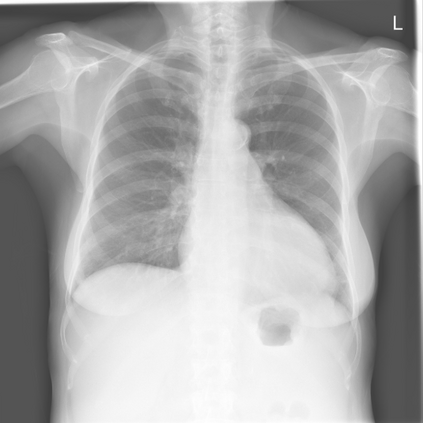

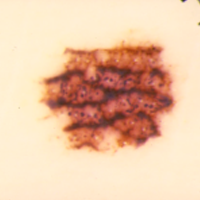

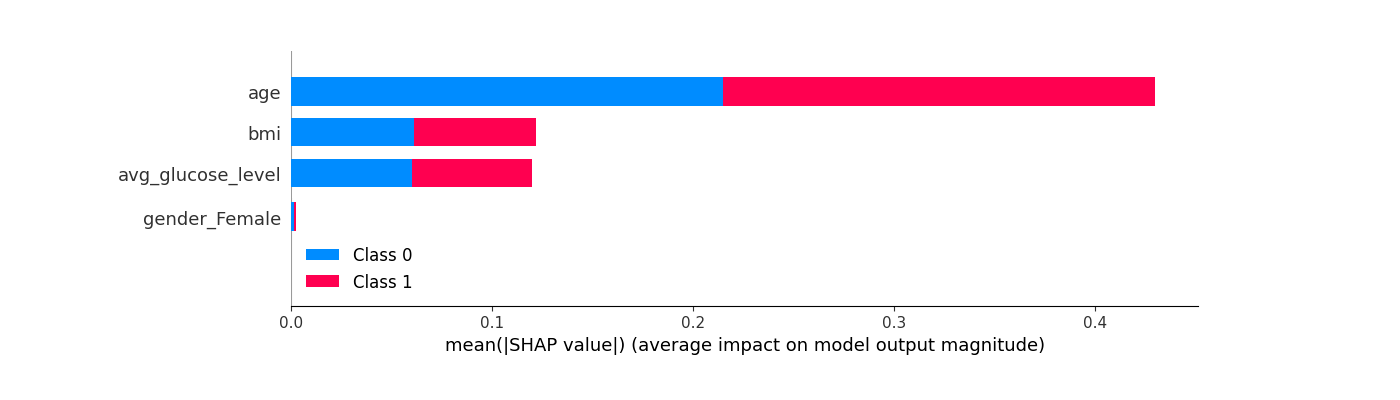

The remarkable success of deep learning has prompted interest in its application to medical imaging diagnosis. Even though state-of-the-art deep learning models have achieved human-level accuracy on the classification of different types of medical data, these models are hardly adopted in clinical workflows, mainly due to their lack of interpretability. The black-box-ness of deep learning models has raised the need for devising strategies to explain the decision process of these models, leading to the creation of the topic of eXplainable Artificial Intelligence (XAI). In this context, we provide a thorough survey of XAI applied to medical imaging diagnosis, including visual, textual, example-based and concept-based explanation methods. Moreover, this work reviews the existing medical imaging datasets and the existing metrics for evaluating the quality of the explanations. In addition, we include a performance comparison among a set of report generation-based methods. Finally, the major challenges in applying XAI to medical imaging and the future research directions on the topic are also discussed.

翻译:尽管最先进的深造模型在对不同类型的医疗数据进行分类方面达到了人的准确度,但这些模型很少被采纳到临床工作流程中,主要原因是缺乏解释性;深造模型的黑箱性质使得有必要制定战略解释这些模型的决策过程,导致创建了可移植人工智能(XAI)专题;在这方面,我们提供了对适用于医学成像诊断的XAI的彻底调查,包括视觉、文字、实例和基于概念的解释方法;此外,这项工作还审查了现有的医疗成像数据集和用于评估解释质量的现有指标;此外,我们还对一套基于报告的生成方法进行了业绩比较;最后,还讨论了在将XAI应用于医学成像方面所面临的主要挑战以及该专题的未来研究方向。