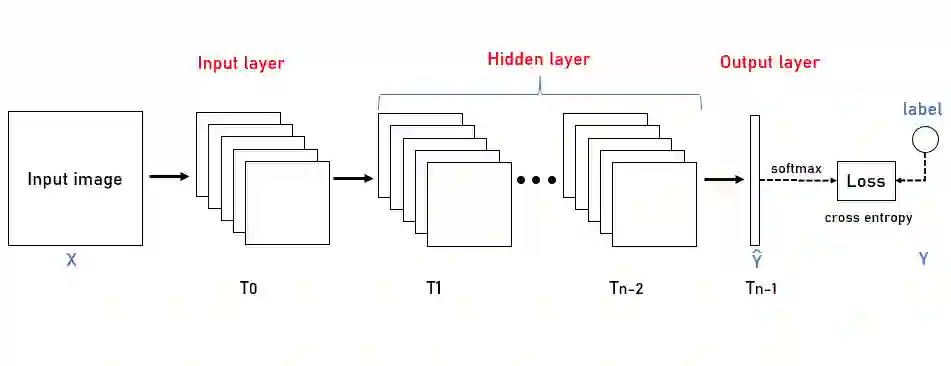

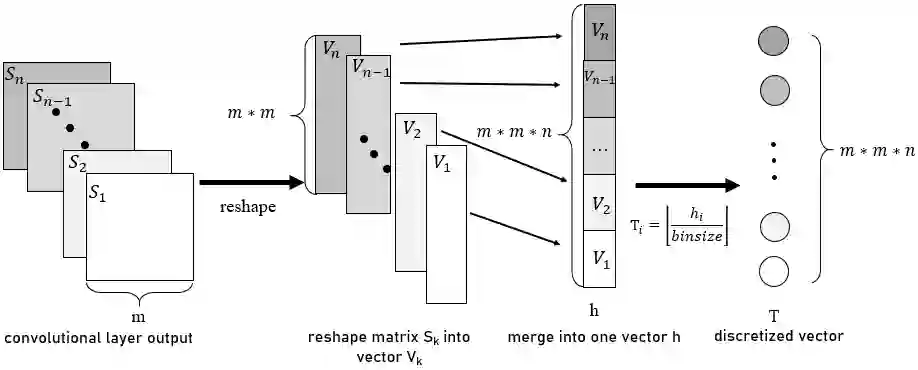

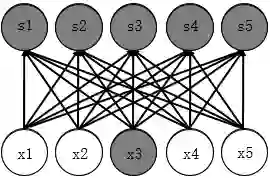

Recent years, many researches attempt to open the black box of deep neural networks and propose a various of theories to understand it. Among them, Information Bottleneck (IB) theory claims that there are two distinct phases consisting of fitting phase and compression phase in the course of training. This statement attracts many attentions since its success in explaining the inner behavior of feedforward neural networks. In this paper, we employ IB theory to understand the dynamic behavior of convolutional neural networks (CNNs) and investigate how the fundamental features such as convolutional layer width, kernel size, network depth, pooling layers and multi-fully connected layer have impact on the performance of CNNs. In particular, through a series of experimental analysis on benchmark of MNIST and Fashion-MNIST, we demonstrate that the compression phase is not observed in all these cases. This shows us the CNNs have a rather complicated behavior than feedforward neural networks.

翻译:近些年来,许多研究试图打开深神经网络的黑盒,并提出各种理论来理解它。其中,信息博特内克(IB)理论声称,在培训过程中,有两个不同的阶段,包括适当阶段和压缩阶段。这个声明吸引了许多关注,因为它成功地解释了进食神经网络的内在行为。在本文中,我们运用IB理论来理解卷发神经网络(CNNs)的动态行为,并调查卷发层宽度、内核大小、网络深度、集合层和多功能连接层等基本特征如何影响CNN的运行。特别是,通过对MNIST和时装-MNIST的基准进行一系列实验分析,我们证明所有这些案例都没有观察到压缩阶段。这向我们展示CNNC有比进取神经网络更为复杂的行为。