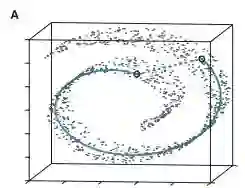

Manifold learning (ML) aims to seek low-dimensional embedding from high-dimensional data. The problem is challenging on real-world datasets, especially with under-sampling data, and we find that previous methods perform poorly in this case. Generally, ML methods first transform input data into a low-dimensional embedding space to maintain the data's geometric structure and subsequently perform downstream tasks therein. The poor local connectivity of under-sampling data in the former step and inappropriate optimization objectives in the latter step leads to two problems: structural distortion and underconstrained embedding. This paper proposes a novel ML framework named Deep Local-flatness Manifold Embedding (DLME) to solve these problems. The proposed DLME constructs semantic manifolds by data augmentation and overcomes the structural distortion problem using a smoothness constrained based on a local flatness assumption about the manifold. To overcome the underconstrained embedding problem, we design a loss and theoretically demonstrate that it leads to a more suitable embedding based on the local flatness. Experiments on three types of datasets (toy, biological, and image) for various downstream tasks (classification, clustering, and visualization) show that our proposed DLME outperforms state-of-the-art ML and contrastive learning methods.

翻译:曼氏学习( ML) 旨在从高维数据中寻找低维嵌入。 问题在于真实世界数据集上的问题, 特别是抽样不足的数据, 并且我们发现以前的方法在此情况下表现不佳。 一般而言, ML 方法首先将输入数据转换成低维嵌入空间, 以维护数据几何结构, 并随后执行下游任务 。 在前一步中, 取样不足的数据在本地连接不足, 在后一步中优化目标不适当, 导致两个问题: 结构扭曲和不受限制的嵌入。 本文提出了一个名为深本地缩缩缩和嵌入( DLME) 的新ML 框架, 以解决这些问题。 提议的 DLME 通过数据增强和克服结构扭曲问题, 使用基于本地定型假设的平滑度限制, 克服结构扭曲问题 。 为了克服摄取的嵌入问题, 我们设计了一种损失, 从理论上证明它导致更合适的嵌入基于本地平坦。 实验了三种类型的数据集( 向上、 生物、 和图像化) 的图像化方法, 展示了各种下游任务( 向、 模版化、 模版) 和图像化、 模版化、 演示式、 展示了 我们的图像的模型的模型的模型的模型化方法。